Looking for the best Gemini API alternatives with free tier in 2025? You're in the right place. After Google's December 2025 rate limit cuts that slashed free tier quotas by up to 92%, developers worldwide need reliable alternatives. This guide covers 15+ free LLM API options with detailed rate limits, setup code, and honest recommendations.

Quick answer: The best free Gemini API alternatives are Groq (fastest, 14,400 requests/day), OpenRouter (30+ free models), and Mistral AI (1 billion tokens/month). All three require no credit card and offer OpenAI-compatible APIs.

Why You Need Gemini API Alternatives in December 2025

Google's Gemini API has been a popular choice for developers due to its generous free tier and impressive 1M token context window. However, recent changes have made alternatives more attractive than ever.

Google's December 2025 Rate Limit Changes

On December 6, 2025, Google made significant changes to Gemini's free tier without prior notice:

| Change | Before | After | Reduction |

|---|---|---|---|

| Gemini 2.5 Flash daily requests | 250 | 20 | 92% |

| Gemini 2.5 Pro access | Free | Removed | 100% |

| Rate limits (RPM) | 15-30 | 5-15 | 50-67% |

These sudden cuts left many developers scrambling. Apps that relied on the free tier started throwing "429 quota exceeded" errors, breaking production systems overnight.

Impact on Developers

The rate limit changes particularly affected:

- Indie developers building AI-powered side projects

- Students learning to work with LLM APIs

- Startups validating product ideas before securing funding

- Open-source projects that can't afford API costs

According to GitHub's 2025 Octoverse report, indie developers contribute 40% of open-source AI projects. These free tier restrictions create significant barriers to innovation.

When to Consider Switching

Consider alternatives when:

- You need more than 20 requests per day

- You want predictable, stable rate limits

- You prefer providers with transparent pricing changes

- You need faster inference speeds (Groq offers 300+ tokens/second)

- You want access to different model architectures

Top 15 Free Gemini API Alternatives Compared

Here's a comprehensive comparison of all major free LLM API options available in December 2025:

Quick Comparison Table

| Provider | Rate Limit | Token Limit | Credit Card | Best For |

|---|---|---|---|---|

| Groq | 14,400/day | 70K TPM | No | Speed-critical apps |

| Mistral AI | 1 req/sec | 1B/month | No | Code generation |

| OpenRouter | 20/min, 50/day | Varies | No | Model variety |

| Google AI Studio | 5-30/min | 250K TPM | No | Long context |

| Cohere | 20/min | 1K/month | No | RAG applications |

| HuggingFace | Moderate | $0.10/month | No | Experimentation |

| GitHub Models | Tier-based | Low | No | GitHub users |

| Together AI | - | $25 credits | No | Llama 4 access |

| Baseten | - | $30 credits | No | Custom models |

| AI21 Labs | - | $10 credits | No | Text analysis |

| Cloudflare | Varies | 10K neurons/day | No | Edge deployment |

| SambaNova | - | $5 credits | No | Enterprise testing |

| Replicate | - | $5 credits | No | Image/video |

| NVIDIA NIM | 40/min | Limited | No | NVIDIA ecosystem |

| Cerebras | Free tier | Varies | No | Research |

Best for Beginners

If you're new to LLM APIs, start with these:

- Google AI Studio - Despite the cuts, still has the easiest setup

- Groq - Simple API, excellent documentation

- OpenRouter - One API for multiple models

Best for Production

For production workloads on a budget:

- Mistral AI - 1 billion tokens/month is substantial

- Groq - 14,400 daily requests with consistent performance

- Together AI - $25 credits go far with efficient models

Free Tier Providers (No Credit Card Required)

Let's dive deep into each provider that offers truly free access without requiring payment information.

Google AI Studio (Remaining Free Tier)

Despite the December cuts, Google AI Studio still offers value:

Current limits (December 2025):

- Gemini 2.5 Flash: 5-15 RPM, 250K TPM

- Gemini 2.5 Flash-Lite: 30 RPM, 250K TPM

- Context window: 1 million tokens

Caveats:

- Data used for model training (outside EU/UK/CH)

- Unpredictable limit changes

- No Gemini 2.5 Pro on free tier

typescript// Google AI Studio setup import { GoogleGenerativeAI } from "@google/generative-ai"; const genAI = new GoogleGenerativeAI(process.env.GOOGLE_API_KEY); const model = genAI.getGenerativeModel({ model: "gemini-1.5-flash" }); const result = await model.generateContent("Explain quantum computing"); console.log(result.response.text());

Groq - Fastest Free Option

Groq stands out with custom LPU hardware delivering 300+ tokens per second:

Free tier limits:

- Llama 3.3 70B: 14,400 requests/day, 70K tokens/min

- Mixtral 8x7B: 14,400 requests/day, 32K tokens/min

- Whisper (audio): 7,200 requests/day

Why choose Groq:

- Fastest inference in the industry

- OpenAI-compatible API

- No data training on your prompts

- Excellent for real-time applications

typescript// Groq setup import OpenAI from 'openai'; const groq = new OpenAI({ baseURL: 'https://api.groq.com/openai/v1', apiKey: process.env.GROQ_API_KEY }); const completion = await groq.chat.completions.create({ model: 'llama-3.3-70b-versatile', messages: [{ role: 'user', content: 'Hello!' }], temperature: 0.7 });

OpenRouter - Most Model Variety

OpenRouter aggregates multiple providers through one API:

Free tier includes:

- 30+ free models

- 20 requests/minute

- 50 requests/day base (1,000/day with $10 lifetime topup)

- Models: Llama, Mistral, Qwen, Gemma variants

Unique advantage: Try different models without switching providers.

typescript// OpenRouter setup import OpenAI from 'openai'; const openrouter = new OpenAI({ baseURL: 'https://openrouter.ai/api/v1', apiKey: process.env.OPENROUTER_API_KEY, defaultHeaders: { 'HTTP-Referer': 'https://your-app.com', 'X-Title': 'Your App Name' } }); const response = await openrouter.chat.completions.create({ model: 'meta-llama/llama-3.1-8b-instruct:free', messages: [{ role: 'user', content: 'Explain REST APIs' }] });

For more on API pricing and options, check our ChatGPT API pricing guide.

Trial Credit Providers

These providers offer free credits to get started:

Together AI ($25 Credits)

Together AI provides access to cutting-edge models:

What you get:

- $25 free credits (one-time)

- Llama 4 Scout and Maverick access

- Competitive per-token pricing after credits

typescript// Together AI setup import OpenAI from 'openai'; const together = new OpenAI({ baseURL: 'https://api.together.xyz/v1', apiKey: process.env.TOGETHER_API_KEY }); const response = await together.chat.completions.create({ model: 'meta-llama/Llama-4-Scout-17B-16E-Instruct', messages: [{ role: 'user', content: 'Write a poem about AI' }] });

Baseten ($30 Credits)

Baseten specializes in model deployment:

- $30 free credits

- Deploy custom models

- Good for ML engineers testing infrastructure

AI21 Labs ($10 Credits)

AI21's Jurassic and Jamba models:

- $10 credits valid for 3 months

- Strong at text analysis and summarization

- Unique model architecture

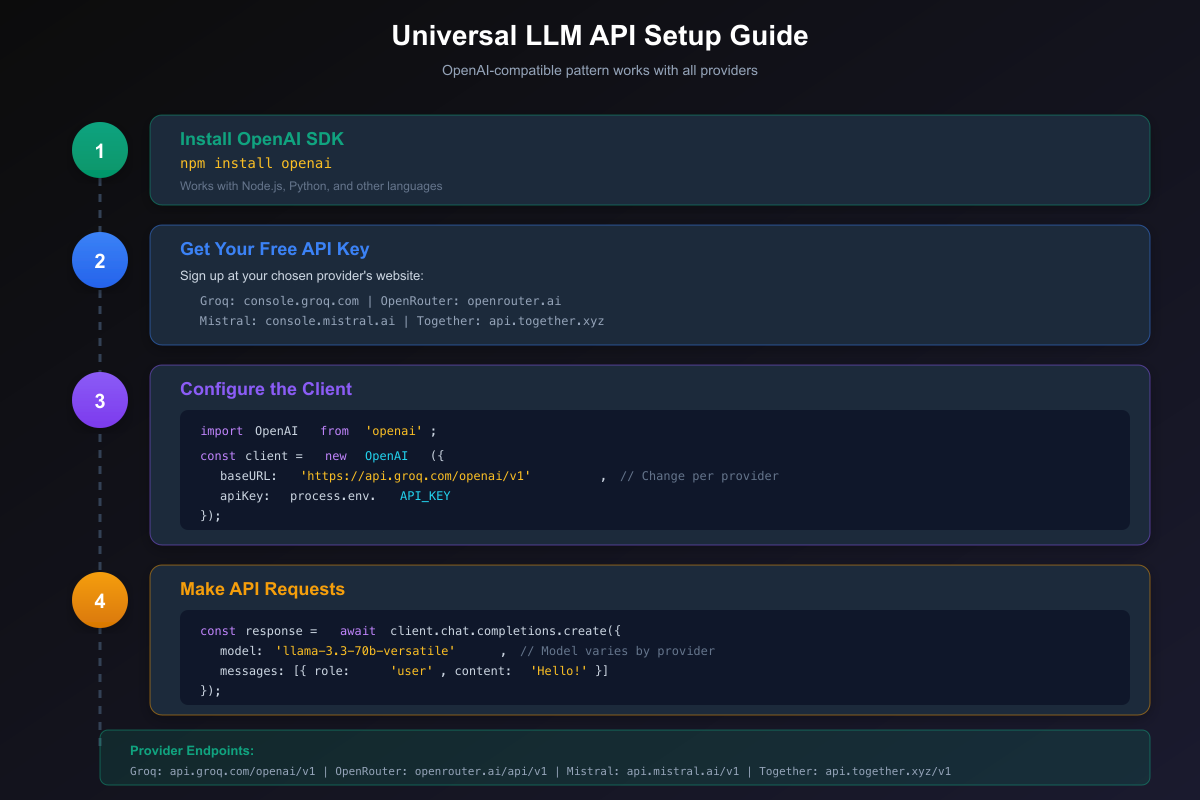

Setup Guide with Code Examples

All major free LLM APIs now support the OpenAI-compatible format. This means you can switch providers by changing just two lines of code.

Universal OpenAI-Compatible Pattern

typescriptimport OpenAI from 'openai'; // Configuration object - change these for different providers const config = { groq: { baseURL: 'https://api.groq.com/openai/v1', apiKey: process.env.GROQ_API_KEY, model: 'llama-3.3-70b-versatile' }, openrouter: { baseURL: 'https://openrouter.ai/api/v1', apiKey: process.env.OPENROUTER_API_KEY, model: 'meta-llama/llama-3.1-8b-instruct:free' }, mistral: { baseURL: 'https://api.mistral.ai/v1', apiKey: process.env.MISTRAL_API_KEY, model: 'mistral-small-latest' }, together: { baseURL: 'https://api.together.xyz/v1', apiKey: process.env.TOGETHER_API_KEY, model: 'meta-llama/Llama-3.1-8B-Instruct-Turbo' } }; // Select your provider const provider = config.groq; const client = new OpenAI({ baseURL: provider.baseURL, apiKey: provider.apiKey }); async function chat(message: string) { const response = await client.chat.completions.create({ model: provider.model, messages: [{ role: 'user', content: message }], temperature: 0.7, max_tokens: 1000 }); return response.choices[0].message.content; }

Groq Setup Example

Complete Groq integration with error handling:

typescriptimport OpenAI from 'openai'; const groq = new OpenAI({ baseURL: 'https://api.groq.com/openai/v1', apiKey: process.env.GROQ_API_KEY }); async function groqChat(prompt: string) { try { const response = await groq.chat.completions.create({ model: 'llama-3.3-70b-versatile', messages: [ { role: 'system', content: 'You are a helpful assistant.' }, { role: 'user', content: prompt } ], temperature: 0.7, max_tokens: 2000, stream: false }); return { content: response.choices[0].message.content, usage: response.usage }; } catch (error) { if (error.status === 429) { console.error('Rate limit exceeded. Wait before retrying.'); } throw error; } } // Usage const result = await groqChat('What is machine learning?'); console.log(result.content); console.log(`Tokens used: ${result.usage.total_tokens}`);

OpenRouter Setup Example

OpenRouter with model fallback:

typescriptimport OpenAI from 'openai'; const openrouter = new OpenAI({ baseURL: 'https://openrouter.ai/api/v1', apiKey: process.env.OPENROUTER_API_KEY, defaultHeaders: { 'HTTP-Referer': 'https://your-site.com', 'X-Title': 'Your App' } }); // Free models available on OpenRouter const freeModels = [ 'meta-llama/llama-3.1-8b-instruct:free', 'google/gemma-2-9b-it:free', 'mistralai/mistral-7b-instruct:free', 'qwen/qwen-2-7b-instruct:free' ]; async function openrouterChat(prompt: string, modelIndex = 0) { try { const response = await openrouter.chat.completions.create({ model: freeModels[modelIndex], messages: [{ role: 'user', content: prompt }] }); return response.choices[0].message.content; } catch (error) { // Fallback to next model if rate limited if (error.status === 429 && modelIndex < freeModels.length - 1) { return openrouterChat(prompt, modelIndex + 1); } throw error; } }

International Access Solutions

Many developers face regional restrictions when accessing LLM APIs. Here's how to handle international access challenges.

Regional Availability Matrix

| Provider | US | EU | China | Requirements |

|---|---|---|---|---|

| Groq | Full | Full | Blocked | VPN needed |

| OpenRouter | Full | Full | Blocked | VPN needed |

| Mistral | Full | Full | Blocked | VPN needed |

| Google AI Studio | Full | Full | Blocked | VPN needed |

| HuggingFace | Full | Full | Partial | Some models work |

| DeepSeek | Full | Full | Full | Native access |

China Developer Solutions

Developers in China face unique challenges. Here are practical solutions:

Option 1: API Relay Services

API relay services provide stable access without VPN:

typescript// Example using API relay service const client = new OpenAI({ baseURL: 'https://api.laozhang.ai/v1', // Relay endpoint apiKey: process.env.RELAY_API_KEY }); // Same code works with relay const response = await client.chat.completions.create({ model: 'gpt-4o-mini', messages: [{ role: 'user', content: 'Hello!' }] });

Benefits of relay services:

- No VPN required

- Stable connections

- Multiple model access

- Often includes free credits (laozhang.ai offers $10 free for new users)

Option 2: Self-hosted Solutions

For complete control, consider local deployment:

bashcurl -fsSL https://ollama.com/install.sh | sh ollama pull llama3.1:8b # API available at localhost:11434

Option 3: DeepSeek API

DeepSeek is accessible from China without restrictions:

typescriptimport OpenAI from 'openai'; const deepseek = new OpenAI({ baseURL: 'https://api.deepseek.com/v1', apiKey: process.env.DEEPSEEK_API_KEY }); const response = await deepseek.chat.completions.create({ model: 'deepseek-chat', messages: [{ role: 'user', content: '你好!' }] });

Learn more about browser automation in our browser MCP complete guide.

Use Case Recommendations

Different APIs excel at different tasks. Here's a detailed breakdown.

Best for Coding Assistance

For code generation and debugging:

| Provider | Model | Strength | Speed |

|---|---|---|---|

| Mistral | Codestral | 30 req/min free | Fast |

| Groq | Llama 3.3 70B | General coding | Very fast |

| DeepSeek | DeepSeek Coder | Specialized | Medium |

Recommended setup for coding:

typescriptconst codingAssistant = new OpenAI({ baseURL: 'https://codestral.mistral.ai/v1', apiKey: process.env.MISTRAL_API_KEY }); const codeResponse = await codingAssistant.chat.completions.create({ model: 'codestral-latest', messages: [ { role: 'system', content: 'You are an expert programmer.' }, { role: 'user', content: 'Write a Python function to merge two sorted lists' } ] });

Best for Chatbots

For conversational AI applications:

- Groq + Llama 3.3 - Fast responses, natural conversation

- OpenRouter + Multiple Models - Personality variety

- Together AI + Llama 4 - Most capable free option

Best for Data Analysis

For processing and analyzing data:

- Google AI Studio - 1M context for large documents

- Cohere - Specialized for RAG applications

- AI21 Labs - Strong text comprehension

Frequently Asked Questions

What are the truly free LLM APIs?

The following providers offer genuinely free tiers without requiring payment:

- Groq: 14,400 requests/day, no credit card

- Mistral AI: 1 billion tokens/month, no credit card (phone verification required)

- OpenRouter: 50 requests/day base, no credit card

- Google AI Studio: 5-30 RPM depending on model, no credit card

- Cohere: 1,000 requests/month, no credit card

- HuggingFace: $0.10/month in credits, no credit card

Which APIs don't require credit cards?

All providers listed above work without credit cards. However, some require:

- Phone verification: Mistral, NVIDIA NIM, NLP Cloud

- GitHub account: GitHub Models

- Google account: Google AI Studio

What are the rate limits for free tiers?

Current rate limits (December 2025):

| Provider | Requests | Tokens | Period |

|---|---|---|---|

| Groq | 14,400 | 70K TPM | Daily |

| Mistral | 1/sec | 1B | Monthly |

| OpenRouter | 20/min | Varies | - |

| Google AI Studio | 5-30/min | 250K TPM | - |

| Cohere | 20/min | 1,000 | Monthly |

Can I use these APIs in China?

Most providers are blocked in China. Recommended solutions:

- API relay services like laozhang.ai (no VPN needed, $10 free credits)

- DeepSeek - Works natively in China

- Local deployment with Ollama

- VPN for direct access (reliability varies)

Which is best for production use?

For production on a budget, consider:

- Mistral AI - 1B tokens/month is substantial for most apps

- Groq - Most reliable free tier with fast inference

- Together AI - $25 credits + reasonable paid rates

Avoid relying solely on Google AI Studio due to unpredictable limit changes.

How do I migrate from Gemini?

Migration is straightforward since most providers use OpenAI-compatible APIs:

- Install the OpenAI SDK:

npm install openai - Change the

baseURLto your new provider - Update the

apiKeyenvironment variable - Adjust the

modelname

Most code remains unchanged.

Are there any hidden costs?

Watch out for:

- Data training: Google AI Studio uses your data for training (outside EU)

- Phone verification: Mistral, NVIDIA require phone numbers

- Credit expiration: Trial credits often expire (AI21: 3 months)

- Rate limit changes: Google cut limits without warning

Which has the best documentation?

Documentation quality ranking:

- Groq - Excellent, clear examples

- OpenAI (for reference) - Industry standard

- Mistral - Good, improving

- Google AI Studio - Comprehensive but complex

- OpenRouter - Basic but sufficient

Conclusion and Recommendations

Summary Table

| Need | Best Choice | Why |

|---|---|---|

| Maximum free requests | Groq | 14,400/day |

| Most tokens | Mistral | 1B/month |

| Model variety | OpenRouter | 30+ free models |

| Long context | Google AI Studio | 1M tokens |

| Speed | Groq | 300+ tokens/sec |

| China access | DeepSeek / Relay | No VPN needed |

| Coding | Mistral Codestral | Specialized |

| Production | Mistral + Groq | Reliable + Fast |

Final Recommendations

For hobbyists and learners: Start with Groq for its speed and generous limits. The documentation is excellent for beginners.

For indie developers: Combine Groq (speed) with Mistral (volume) for a robust free stack. Use OpenRouter for model experimentation.

For startups: Begin with Together AI's $25 credits to validate your product. Scale to paid tiers as needed.

For China-based developers: Use API relay services like laozhang.ai for easy access to multiple models with free starting credits. DeepSeek is also a strong native option.

Next Steps

- Create accounts at Groq, Mistral, and OpenRouter

- Test each provider with your specific use case

- Implement fallback logic to handle rate limits

- Monitor usage to stay within free tier limits

- Plan for scaling when your project grows

The LLM API landscape is evolving rapidly. Google's December 2025 changes remind us that free tiers can change without notice. Build with flexibility in mind, and you'll be prepared for whatever comes next.

Last updated: December 14, 2025. All rate limits and pricing verified against official documentation.

![Best Gemini API Alternatives with Free Tier [2025 Complete Guide]](/posts/en/best-gemini-api-alternative-free-tier/img/cover.png)