The democratization of AI through ChatGPT's free tier has transformed how millions interact with artificial intelligence daily. Yet beneath this revolutionary accessibility lies a complex system of usage limits that can frustrate users mid-conversation or derail important work sessions. Understanding these restrictions isn't just about avoiding interruptions – it's about maximizing the incredible value OpenAI provides at no cost while knowing exactly when paid alternatives become necessary.

Recent data from July 2025 reveals that over 180 million users actively engage with ChatGPT's free tier, making it one of the most widely adopted AI services in history. However, user forums are filled with confusion about exactly how these limits work, why they seem inconsistent, and what strategies can help stretch free access further. This comprehensive guide demystifies ChatGPT's free tier restrictions, providing you with actionable insights based on the latest 2025 updates and real-world user experiences.

The Reality of ChatGPT Free Tier Limits in 2025

ChatGPT's free tier operates on a sophisticated throttling system that balances user access with computational resources. Unlike traditional software with fixed feature sets, ChatGPT's limits dynamically adjust based on server load, query complexity, and usage patterns. This creates an experience where two users might encounter different restrictions at the same time, leading to widespread confusion about what the "real" limits are.

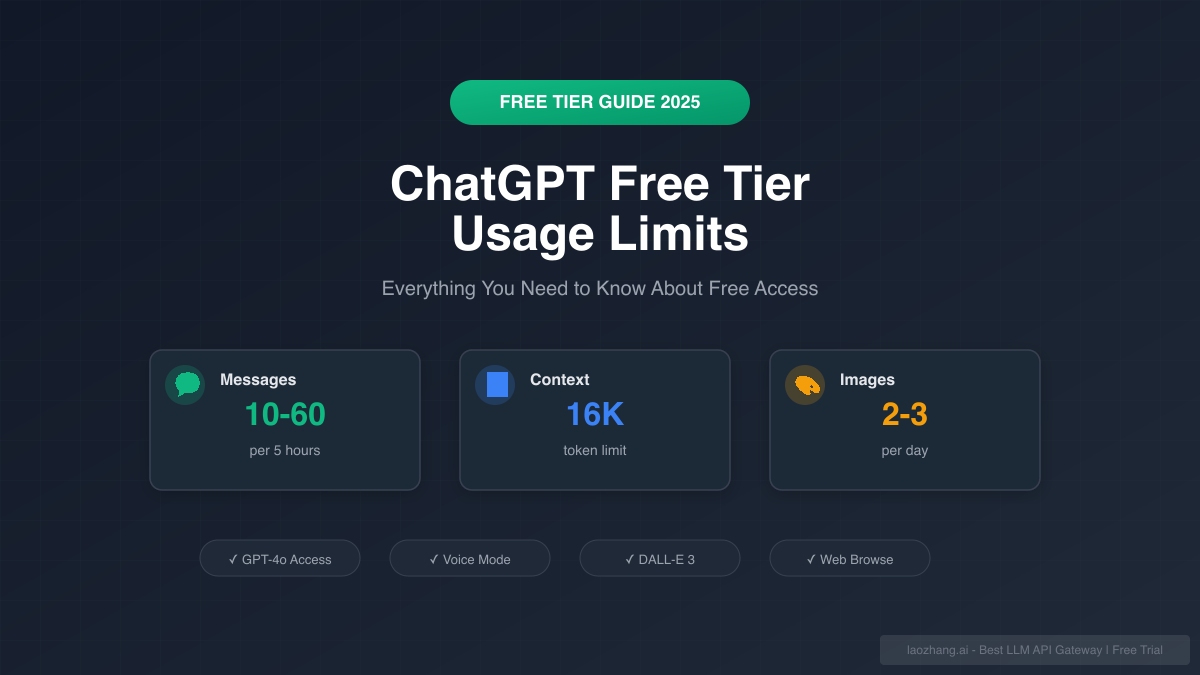

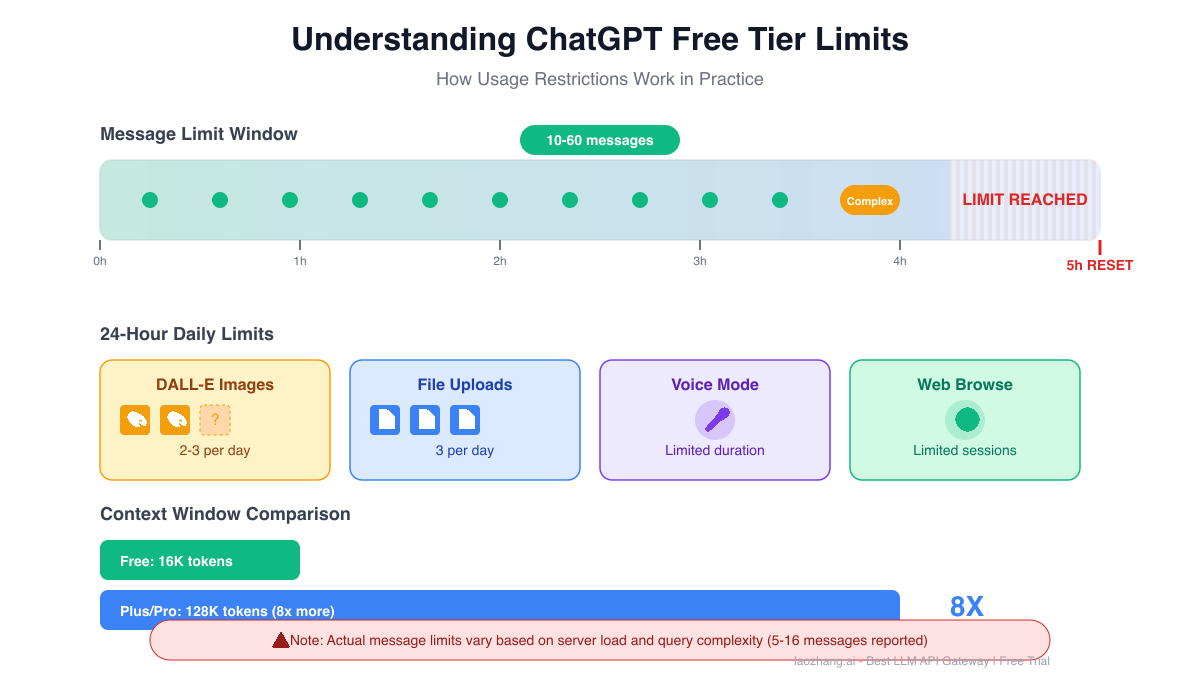

The primary restriction revolves around GPT-4o access, OpenAI's flagship model that powers the most impressive ChatGPT capabilities. Free users receive approximately 10-60 messages within a rolling five-hour window, though this range varies significantly. Many users report hitting limits after just 5-16 messages, particularly when engaging in complex tasks like code generation, data analysis, or detailed creative writing. This variability stems from OpenAI's resource management system, which allocates more "weight" to computationally intensive queries.

Beyond message counts, free tier users face a 16,000 token context window limitation – roughly equivalent to 12,000 words of conversation history. This means lengthy discussions may lose earlier context, forcing users to restart conversations or carefully manage their interaction length. When compared to the 128,000 token window available to Plus subscribers, this limitation becomes particularly constraining for professional use cases requiring extensive context retention.

Understanding the Five-Hour Reset Window

The five-hour reset window represents one of the most misunderstood aspects of ChatGPT's free tier. Contrary to popular belief, this isn't a fixed timer that starts at midnight or when you begin chatting. Instead, it's a rolling window that begins counting from your first GPT-4o interaction. This means if you start using ChatGPT at 9 AM and hit your limit at 10 AM, you'll need to wait until approximately 2-3 PM before regaining full access to GPT-4o.

During peak usage hours – typically 9 AM to 5 PM in major time zones – the system becomes more restrictive. Server load directly impacts available message allocations, with some users reporting limits as low as 5 messages during extreme demand periods. OpenAI's infrastructure prioritizes paid subscribers during these peaks, ensuring they maintain consistent access while free tier availability fluctuates.

The reset mechanism also employs a "soft landing" approach. Rather than abruptly cutting off access, ChatGPT provides warnings as you approach limits and automatically switches to GPT-4o mini, a lighter model that maintains conversation continuity albeit with reduced capabilities. This fallback ensures users can continue their work, though complex reasoning tasks may suffer from the model downgrade.

Daily Restrictions Beyond Message Limits

While the five-hour message window captures most attention, ChatGPT's free tier includes several daily restrictions that significantly impact power users. These 24-hour limits operate independently from message caps, creating a multi-layered restriction system that requires careful resource management.

DALL-E 3 image generation stands as the most visible daily restriction, limiting free users to just 2-3 images per 24-hour period. This constraint particularly affects creative professionals, educators, and content creators who rely on AI-generated visuals. The limit applies regardless of image complexity or size, meaning a simple icon consumes the same allocation as a detailed artistic creation. Users report that image generation limits reset at midnight local time, providing a predictable refresh cycle unlike the message restrictions.

File upload capabilities face similar daily constraints, with free tier users limited to 3 file uploads per day. This restriction encompasses all file types – PDFs, images, spreadsheets, and code files – making it challenging for users working on document-heavy projects. The system counts each upload individually, so uploading multiple files simultaneously still consumes multiple slots from your daily allocation.

Voice conversation features, while revolutionary in their natural interaction capabilities, come with undisclosed duration limits. Users consistently report sessions ending after 15-20 minutes of continuous conversation, with cooldown periods required before starting new voice sessions. Web browsing capabilities face comparable restrictions, with limited search sessions available daily, though OpenAI hasn't published specific numbers for these features.

Free vs Plus: A Comprehensive Feature Comparison

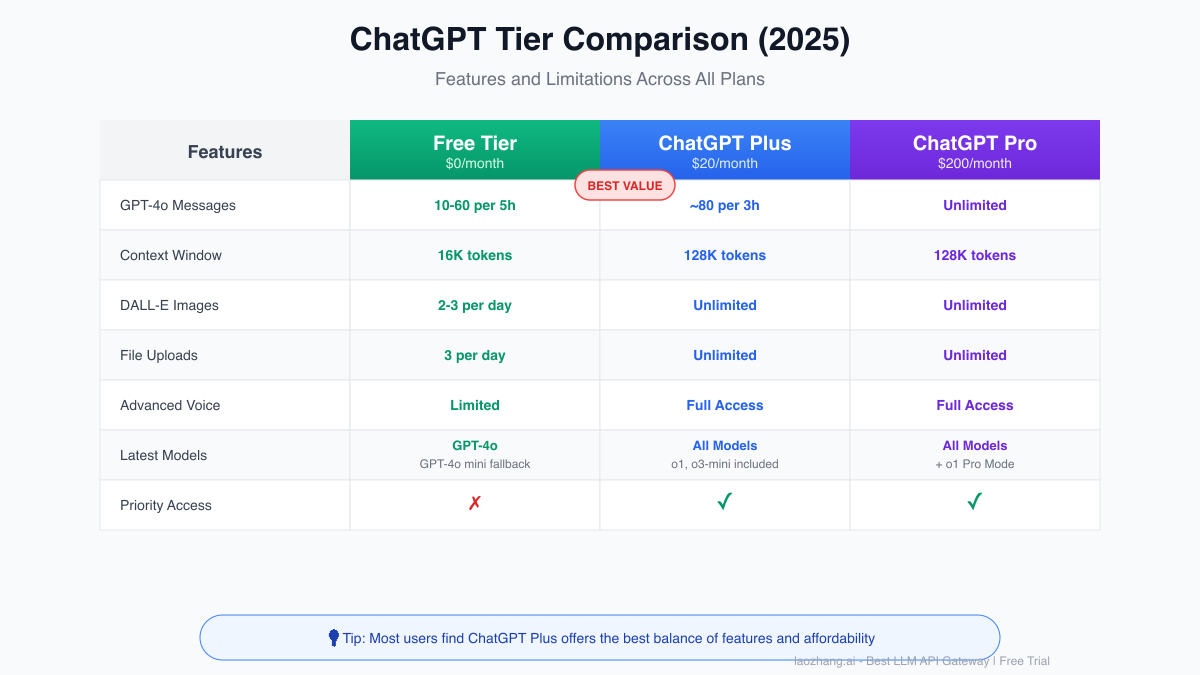

The decision between staying on ChatGPT's free tier versus upgrading to Plus ($20/month) or Pro ($200/month) often comes down to specific use patterns and professional requirements. Understanding the precise differences helps users make informed decisions about when upgrading becomes economically justified.

ChatGPT Plus transforms the user experience primarily through expanded access rather than exclusive features. Where free users receive 10-60 GPT-4o messages per five hours, Plus subscribers enjoy approximately 80 messages every three hours – a 4-8x improvement in availability. This expanded access proves crucial during work hours when consistent AI assistance can significantly boost productivity. Plus subscribers also gain priority access during peak times, eliminating the frustrating "ChatGPT is at capacity" messages that plague free users during high-demand periods.

The context window expansion from 16K to 128K tokens represents perhaps the most transformative upgrade for professional users. This eight-fold increase enables Plus subscribers to maintain lengthy technical discussions, analyze substantial documents, and preserve conversation context across extended sessions. For software developers debugging complex code, researchers analyzing papers, or writers developing long-form content, this expanded context often justifies the subscription cost alone.

Model access differentiates the tiers significantly. While free users can access GPT-4o with limitations, Plus subscribers gain unrestricted access to the entire model family, including specialized variants like o1 for complex reasoning tasks and o3-mini for efficient processing. Pro tier users ($200/month) receive unlimited GPT-4o access and exclusive o1 Pro mode, designed for the most demanding computational tasks. This tier primarily serves enterprise users and AI researchers requiring consistent, unlimited access to cutting-edge capabilities.

Maximizing Your Free Tier Experience

Strategic usage patterns can dramatically extend the value extracted from ChatGPT's free tier. Understanding how the system allocates resources enables users to accomplish more within existing constraints while avoiding common pitfalls that prematurely exhaust limits.

Query optimization stands as the most impactful strategy. Complex, multi-part questions consume significantly more resources than focused, specific queries. Instead of asking ChatGPT to "analyze this entire codebase and suggest improvements," breaking requests into targeted questions like "identify potential memory leaks in this function" preserves message allocations while often yielding better results. This approach leverages ChatGPT's strength in focused analysis while respecting system constraints.

Timing usage around off-peak hours provides another significant advantage. Early morning hours (4-8 AM local time) and late evenings (10 PM-2 AM) typically offer the most generous message allocations as server load decreases. Users report receiving 40-60 messages during these periods compared to 10-20 during business hours. For non-urgent tasks, scheduling ChatGPT sessions during these windows maximizes available resources.

External composition dramatically improves efficiency. Rather than iterating on content within ChatGPT, drafting initial versions in external text editors prevents wasted messages on minor revisions. This particularly benefits writers and programmers who might otherwise consume dozens of messages refining output. Only submitting polished prompts for final processing can extend effective usage by 200-300%.

When Upgrading Becomes Essential

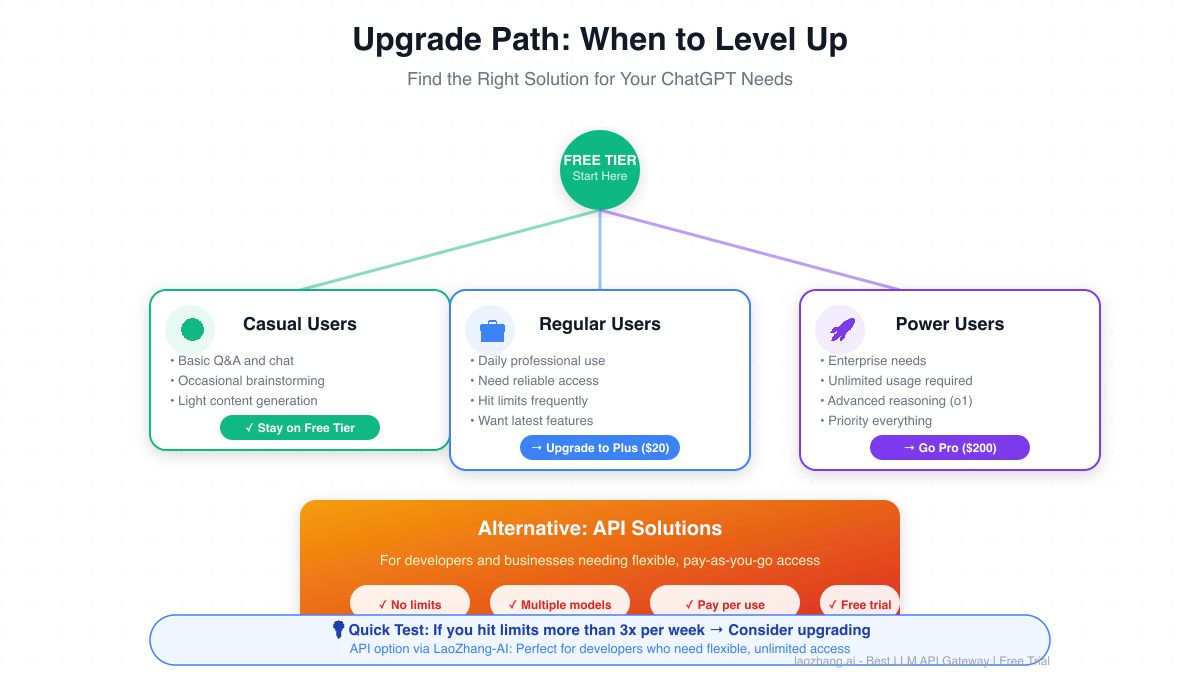

Recognizing when free tier limitations genuinely hinder productivity helps users make timely upgrade decisions. Several clear indicators suggest when transitioning to paid tiers becomes economically justified based on usage patterns and professional requirements.

The "three strikes rule" provides a simple heuristic: if you hit usage limits more than three times per week, the productivity loss likely exceeds Plus subscription costs. For professionals billing $50-100 per hour, losing even 30 minutes weekly to limit-related interruptions justifies the $20 monthly investment. This calculation becomes more compelling when considering the compounding effects of interrupted workflow and context switching.

Specific use cases inherently demand paid tier capabilities. Software developers working on complex systems quickly exhaust free tier message limits during debugging sessions. The expanded context window proves essential for maintaining code context across files and debugging sessions. Similarly, researchers analyzing multiple papers or datasets find the 16K token limit severely constraining, making Plus or Pro tiers necessary for serious academic work.

Business users face unique considerations around reliability and professionalism. Client-facing work demands consistent access without unexpected interruptions. The embarrassment of explaining to clients that "ChatGPT limits prevented completion" can damage professional relationships. For consultants, agencies, and freelancers, Plus subscriptions become basic business infrastructure rather than optional upgrades.

Alternative Solutions: The API Ecosystem

For users requiring programmatic access or facing budget constraints around ChatGPT's subscription model, the broader API ecosystem offers compelling alternatives. These solutions provide different pricing models and capabilities that may better align with specific use cases.

Direct OpenAI API access operates on a pay-per-token model, charging $2.50 per million input tokens and $10.00 per million output tokens for GPT-4o. This usage-based pricing benefits users with sporadic high-volume needs, as costs directly correlate with consumption rather than fixed monthly fees. Developers can implement precise cost controls, setting spending limits and monitoring usage programmatically.

API aggregation services have emerged to simplify multi-model deployments while offering competitive pricing. Services like LaoZhang-AI provide unified access to GPT-4o alongside competing models like Claude and Gemini through a single API endpoint. With free trial credits and volume-based discounts, these platforms often reduce costs below direct API access while simplifying integration complexity. The ability to dynamically route requests to the most cost-effective model for each task provides additional optimization opportunities.

The API approach particularly benefits applications with predictable usage patterns. Chatbots serving hundreds of users, content generation systems, and automated analysis tools often achieve better economics through usage-based pricing than per-seat subscriptions. However, API access requires technical implementation and lacks the refined interface of ChatGPT's web platform, making it unsuitable for non-technical users.

Hidden Costs and Considerations

While ChatGPT's free tier provides remarkable value, users should understand the hidden costs and limitations that impact real-world usage. These considerations extend beyond simple message counts to encompass broader workflow implications.

Context loss represents a significant hidden cost for free tier users. The 16K token limit means lengthy conversations lose earlier context, forcing users to repeatedly re-explain problems or provide background information. This invisible overhead can double or triple the time required for complex tasks, creating efficiency losses that dwarf subscription costs. Professional users often discover that apparent free tier savings evaporate when accounting for these productivity impacts.

Feature limitations create workflow disruptions that compound over time. The 3-file daily upload limit might seem generous until you're collaborating on a project requiring multiple document revisions. Similarly, 2-3 daily images suffice for casual use but severely constrain content creators developing visual-heavy materials. These restrictions force users to distribute work across multiple days or seek alternative tools, fragmenting workflows and reducing efficiency.

Learning curve investments deserve consideration when evaluating tier options. Users who master prompt engineering and ChatGPT's capabilities within free tier constraints may find upgrading unnecessary. Conversely, those struggling with limits might benefit more from investing time in optimization strategies than immediately upgrading. The skills developed while maximizing free tier usage often transfer to more efficient paid tier utilization.

Future Outlook: The Evolution of Free Access

The trajectory of ChatGPT's free tier reflects broader tensions in AI democratization. As computational costs decrease and competition intensifies, we're likely to see continued evolution in how OpenAI balances accessibility with sustainability.

Historical patterns suggest gradual expansion of free tier capabilities. When GPT-4 first launched, free tier access was non-existent. The introduction of limited GPT-4o access for free users in 2024 marked a significant shift toward broader accessibility. This trend will likely continue as newer models emerge and computational efficiency improves, with today's premium features potentially becoming tomorrow's free tier baselines.

Competitive pressure from Google's Gemini, Anthropic's Claude, and emerging open-source alternatives forces OpenAI to maintain attractive free tier offerings. The risk of user migration to more generous platforms creates natural pressure toward expanding free capabilities. However, the massive computational costs of serving hundreds of millions of free users establishes firm boundaries on feasible generosity.

The integration of efficiency improvements like GPT-4o mini demonstrates OpenAI's strategy for expanding access while managing costs. These optimized models provide capable AI assistance at fraction of full model costs, enabling more generous usage limits. Future architectural improvements and hardware advances will likely enable further expansion of free tier capabilities without proportional cost increases.

Making the Right Choice for Your Needs

Navigating ChatGPT's tier system ultimately requires honest assessment of your usage patterns, professional requirements, and budget constraints. The free tier provides remarkable capabilities that satisfy many users' needs, while paid tiers offer transformative improvements for power users.

For students, casual users, and those exploring AI capabilities, the free tier often provides sufficient access. The key lies in understanding and working within its constraints rather than fighting against them. Strategic usage, proper timing, and efficient prompting can extend free tier capabilities far beyond apparent limits. Many users discover that perceived limitations stem more from inefficient usage than actual constraints.

Professionals and businesses should evaluate upgrade decisions through ROI lenses rather than pure cost considerations. The $20 monthly Plus investment often pays for itself within hours through improved productivity and eliminated interruptions. For organizations where AI assists revenue generation or critical operations, Pro tier or API access becomes infrastructure investment rather than expense.

The ecosystem of alternatives provides additional flexibility for users with specific requirements. Whether through direct API access for programmatic needs or aggregation services like LaoZhang-AI for unified multi-model access, options exist beyond ChatGPT's traditional tiers. These alternatives particularly benefit developers and businesses requiring predictable costs and programmatic control.

Conclusion: Embracing Informed AI Access

ChatGPT's free tier represents one of the most generous AI access programs available, providing millions with powerful capabilities at no cost. Understanding its limitations transforms frustration into strategic usage, maximizing value while recognizing when upgrades become necessary.

The five-hour message windows, daily restrictions, and token limits create a framework that balances accessibility with sustainability. Rather than arbitrary restrictions, these limits reflect real computational costs and resource constraints. Working within these boundaries develops skills valuable regardless of tier choice, encouraging efficient communication and focused problem-solving.

As AI continues evolving from luxury to necessity, understanding access options becomes crucial for personal and professional development. Whether maximizing free tier capabilities, investing in subscriptions, or exploring API alternatives, informed decisions ensure optimal AI utilization. The key lies not in finding ways around limits but in understanding how to work productively within them while recognizing when expansion becomes necessary.

The future promises continued evolution in AI accessibility, with today's premium features likely becoming tomorrow's standard offerings. By understanding current limitations and mastering efficient usage, users position themselves to maximize value regardless of how access models evolve. Whether you remain on the free tier or upgrade to paid options, the skills developed in understanding and optimizing AI interactions will prove invaluable as these tools become increasingly central to work and creativity.

For those ready to explore beyond traditional tier limitations, services like LaoZhang-AI offer flexible API access to multiple AI models with free trial credits to get started. The choice between free tier optimization, subscription upgrades, or API alternatives ultimately depends on your specific needs, usage patterns, and growth trajectory in the AI-augmented future.