The world of AI image generation has been revolutionized by the introduction of FLUX models, and ComfyUI stands as the most powerful interface for harnessing their capabilities. Whether you're a digital artist, developer, or AI enthusiast, mastering the ComfyUI-FLUX combination opens doors to unprecedented creative possibilities. This comprehensive guide will walk you through everything from basic setup to advanced optimization techniques, ensuring you can generate stunning images regardless of your hardware limitations.

Understanding FLUX Models: The Next Generation of AI Image Generation

FLUX.1, developed by Black Forest Labs (the creators behind Stable Diffusion), represents a significant leap forward in text-to-image generation technology. With its 12 billion parameter architecture, FLUX delivers exceptional image quality, prompt adherence, and creative flexibility that surpasses many existing models.

The FLUX Model Family

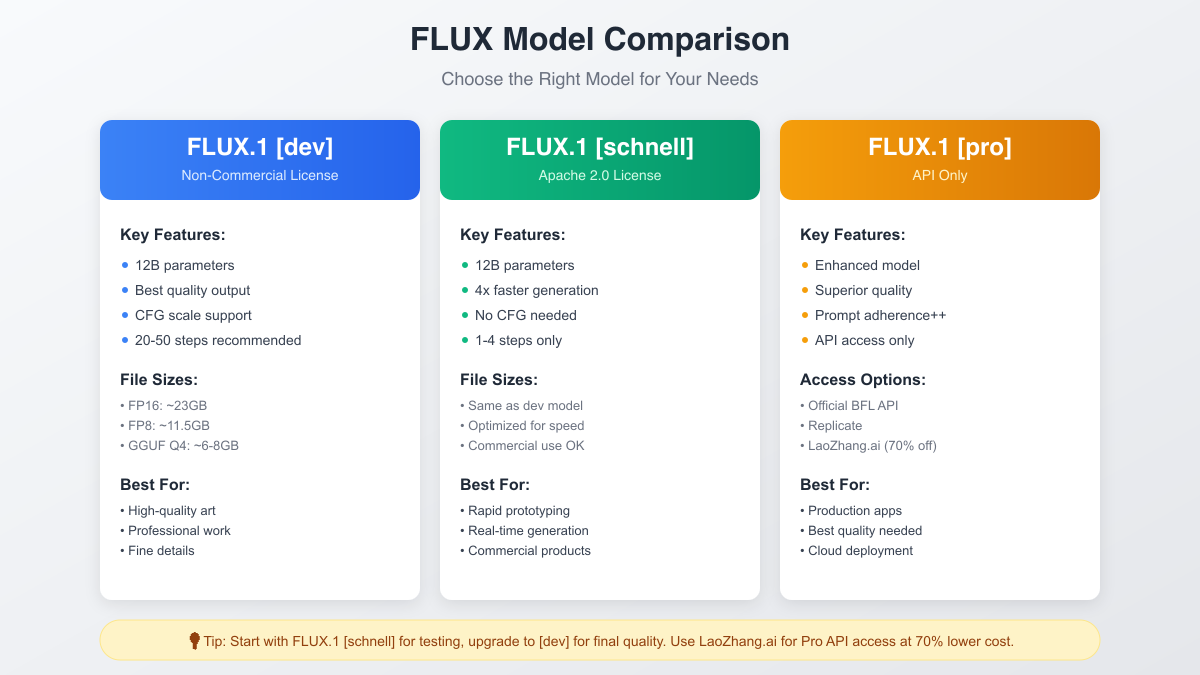

The FLUX ecosystem consists of three primary models, each designed for specific use cases:

FLUX.1 [dev] stands as the flagship model for quality-focused generation. Operating under a non-commercial license, this model excels at producing highly detailed, photorealistic images with exceptional prompt adherence. It requires 20-50 generation steps but delivers unparalleled quality, making it ideal for professional artwork and detailed creative projects.

FLUX.1 [schnell] prioritizes speed without sacrificing too much quality. Licensed under Apache 2.0 for commercial use, this model generates images 4x faster than the dev version, requiring only 1-4 steps. It's perfect for rapid prototyping, real-time applications, and production environments where speed matters.

FLUX.1 [pro] represents the premium tier, available exclusively through API access. This enhanced model offers superior quality and prompt adherence compared to the dev version, making it suitable for enterprise applications and professional production workflows. For cost-conscious users, LaoZhang.ai provides access to FLUX pro at 70% lower prices than official channels.

Why Choose FLUX Over Other Models?

FLUX models bring several advantages to the table. The transformer-based architecture handles complex prompts better than traditional U-Net models, understanding nuanced descriptions and maintaining consistency across generated elements. The models also demonstrate superior performance in generating text within images, human anatomy, and architectural details – areas where many models struggle.

System Requirements and Preparation

Before diving into ComfyUI setup, it's crucial to understand the hardware requirements for running FLUX models effectively. The good news is that with recent optimizations and quantized models, FLUX is more accessible than ever.

Minimum Hardware Requirements

For basic FLUX operation, you'll need at least 8GB of VRAM. This configuration allows you to run GGUF quantized models (Q4 or Q5) with acceptable quality. Your system should have at least 16GB of RAM to handle model loading and processing. An NVIDIA GPU with CUDA support is essential for reasonable generation speeds, though AMD GPUs can work with reduced performance.

Recommended Configuration

For optimal performance and quality, consider a setup with 16GB or more VRAM. This allows you to run FP8 models without compromising quality significantly. Pair this with 32GB of system RAM for smooth operation when working with multiple models or large batches. An RTX 3080, 4070, or better provides the sweet spot of performance and value.

Storage Considerations

FLUX models require substantial storage space. Plan for at least 50GB free space to accommodate:

- FLUX model files (11-23GB depending on precision)

- CLIP models (5-10GB for T5 and CLIP_L)

- VAE models (300MB-1GB)

- Generated images and temporary files

Detailed Installation Guide

Setting up ComfyUI with FLUX requires careful attention to detail, but following these steps ensures a smooth installation process.

Step 1: Install ComfyUI Manager

First, ensure you have ComfyUI installed. If starting fresh, clone the repository:

bashgit clone https://github.com/comfyanonymous/ComfyUI.git cd ComfyUI

Next, install the ComfyUI Manager for easier model management:

bashcd custom_nodes git clone https://github.com/ltdrdata/ComfyUI-Manager.git cd ..

The Manager provides a user-friendly interface for downloading models and managing dependencies, crucial for FLUX setup.

Step 2: Download FLUX Models

FLUX models come in various formats and precisions. For most users, the FP8 version offers the best balance:

- Navigate to Hugging Face:

https://huggingface.co/black-forest-labs - Download

flux1-dev-fp8.safetensors(approximately 11GB) - Place the file in

ComfyUI/models/unet/

For lower VRAM systems, consider GGUF quantized versions from city96's repository, which offer various quality-size trade-offs.

Step 3: Install CLIP Models

FLUX requires two CLIP models for text encoding:

T5-XXL Model: Download either t5xxl_fp16.safetensors (full quality, 10GB) or t5xxl_fp8_e4m3fn.safetensors (half size, 5GB) based on your VRAM availability.

CLIP-L Model: Download clip_l.safetensors (standard CLIP model, 2GB).

Place both files in ComfyUI/models/clip/

Step 4: Add VAE Model

The VAE (Variational Autoencoder) decodes latent images to pixels:

- Download

ae.safetensorsfrom the FLUX repository - Rename it to

flux_ae.safetensorsfor clarity - Place in

ComfyUI/models/vae/

Alternatively, SDXL VAE models work with FLUX, though the official VAE provides slightly better results.

Verification and Troubleshooting

After installation, launch ComfyUI:

bashpython main.py --lowvram --use-pytorch-cross-attention

If you encounter memory errors, add --cpu-vae to offload VAE processing to CPU. The interface should load at http://localhost:8188.

Creating Your First FLUX Workflow

Understanding ComfyUI's node-based system is key to leveraging FLUX effectively. Let's build a basic text-to-image workflow step by step.

Essential Nodes Setup

Start by clearing the default workflow and adding these essential nodes:

-

Load Checkpoint Node: This won't work for FLUX. Instead, use "Load Diffusion Model" or "UNETLoader" node. Set it to load your FLUX model from the unet folder.

-

Dual CLIP Loader: FLUX requires both T5 and CLIP encoders. Add this node and load both models you downloaded earlier.

-

CLIP Text Encode: Add two of these – one for positive prompt, one for negative (though FLUX works best without negative prompts).

-

Empty Latent Image: Set your desired resolution. FLUX performs best at 1024x1024 or similar square/near-square ratios.

-

KSampler: The heart of generation. Connect your model, positive conditioning, and latent image here.

-

VAE Decode: Converts the latent result to a viewable image.

-

Save Image: Stores your generated masterpiece.

Connecting the Workflow

Connect nodes in this order:

- UNET Loader → KSampler (model input)

- Dual CLIP Loader → CLIP Text Encode → KSampler (positive conditioning)

- Empty Latent → KSampler (latent image)

- KSampler → VAE Decode → Save Image

Optimal Settings for FLUX

FLUX requires specific settings for best results:

Sampler: Use "euler" with "simple" or "normal" scheduler Steps: 20-30 for dev model, 1-4 for schnell CFG Scale: Keep low at 3.5-4.0 (FLUX performs poorly with high CFG) Denoise: 1.0 for text-to-image

Your first prompt might be: "A majestic mountain landscape at sunset, highly detailed, professional photography"

Click "Queue Prompt" and watch FLUX create magic!

Advanced Settings and Optimization

Once comfortable with basic workflows, diving into advanced settings unlocks FLUX's full potential while optimizing performance for your hardware.

Sampler Optimization

FLUX responds differently to samplers compared to traditional models. While many prefer DPM++ variants for Stable Diffusion, FLUX achieves best results with simpler samplers:

Euler: Provides consistent, high-quality results with good convergence. This is the recommended default for most use cases.

Euler Ancestral (euler_a): Adds more variation between seeds, useful for exploring different interpretations of the same prompt.

DDIM: Faster convergence with fewer steps, though sometimes at the cost of detail. Useful for quick iterations.

The key insight: FLUX's architecture means complex samplers often overcomplicate the generation process rather than improving it.

CFG Scale Deep Dive

Unlike Stable Diffusion models that often benefit from CFG scales of 7-12, FLUX operates optimally at much lower values. Here's why:

FLUX's training methodology already incorporates strong prompt adherence, making high CFG values redundant and often detrimental. Settings between 3.0-4.0 provide the sweet spot where the model follows prompts closely without introducing artifacts or oversaturation.

For specific use cases:

- Photorealistic images: CFG 3.5

- Artistic/stylized content: CFG 4.0

- Maximum creativity: CFG 3.0

Memory Optimization Strategies

Running FLUX on limited VRAM requires strategic optimization:

Sequential Processing: The --lowvram flag processes model components sequentially rather than simultaneously, trading speed for memory efficiency.

CPU Offloading: Adding --cpu-vae moves VAE operations to system RAM, freeing 1-2GB VRAM at the cost of slower final decoding.

Attention Optimization: The --use-pytorch-cross-attention flag enables memory-efficient attention mechanisms, reducing VRAM usage by 20-30% with minimal speed impact.

Resolution Scaling: Start generation at lower resolutions (512x512) and upscale using FLUX img2img workflows for memory-efficient high-resolution outputs.

GGUF Quantized Models: Democratizing FLUX

GGUF quantization represents a breakthrough in making FLUX accessible to users with modest hardware. By reducing precision intelligently, GGUF models maintain surprisingly high quality while dramatically reducing VRAM requirements.

Understanding Quantization Levels

GGUF offers various quantization levels, each with distinct trade-offs:

Q2_K (Extreme Quantization): Reduces model to ~3GB, enabling FLUX on 4GB GPUs. Quality loss is noticeable but outputs remain coherent and usable for drafts or concepts.

Q4_K_S (Balanced Performance): At ~6GB, this hits the sweet spot for 8GB GPUs. Quality remains good for most applications, with only subtle differences from full precision.

Q5_K_S (Quality Focused): Requiring ~8GB, this level maintains excellent quality while still offering significant VRAM savings. Recommended for 12GB GPUs seeking to run additional LoRAs or control networks.

Q6_K and Q8_0 (Minimal Compromise): These higher quantization levels maintain near-perfect quality while still providing 30-50% VRAM savings compared to FP16.

Implementing GGUF in ComfyUI

Using GGUF models requires the ComfyUI-GGUF custom node:

bashcd ComfyUI/custom_nodes git clone https://github.com/city96/ComfyUI-GGUF.git

After installation, GGUF models load through specialized nodes that handle quantized formats. The workflow remains largely identical, with the UNETLoader (gguf) node replacing the standard loader.

Performance Characteristics

GGUF models exhibit interesting performance characteristics:

- Initial loading is slightly slower due to decompression

- Generation speed matches or exceeds standard models due to reduced memory bandwidth requirements

- Quality scaling is non-linear – Q4 offers 90% of full quality at 50% size

- Quantization affects fine details more than overall composition

Troubleshooting Common Issues

Even with careful setup, users encounter various challenges with FLUX. Here's how to resolve the most common issues:

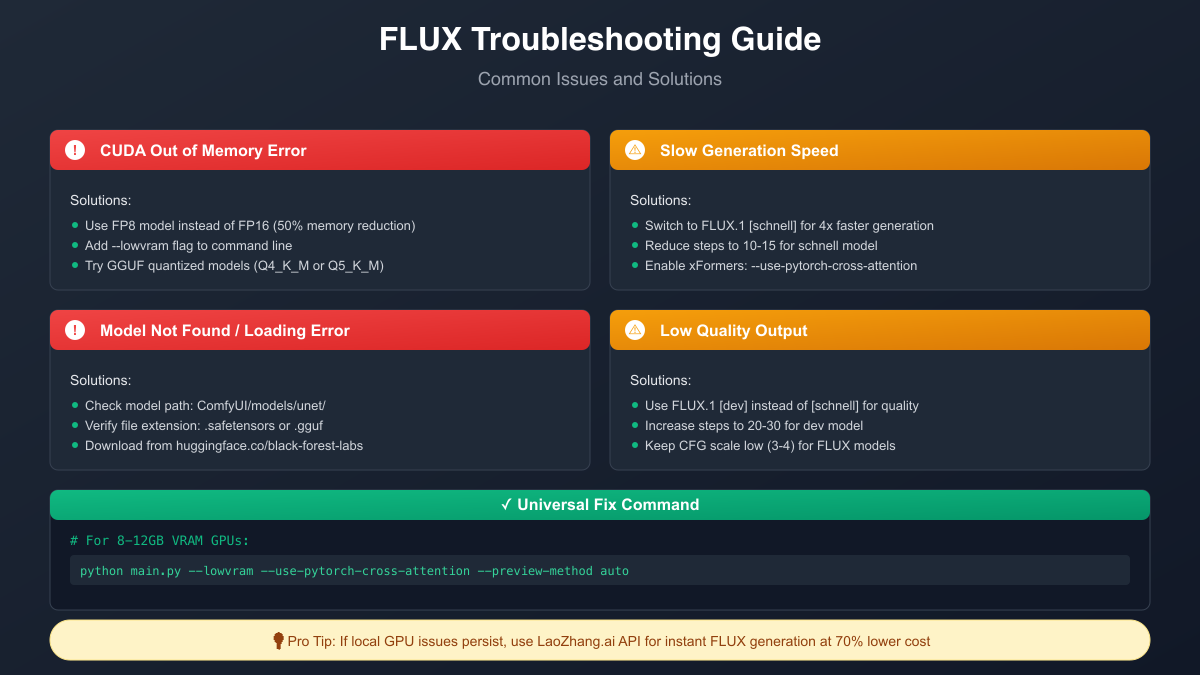

CUDA Out of Memory Errors

This frustrating error appears when VRAM requirements exceed availability. Solutions progress from simple to complex:

- Immediate Fix: Add

--lowvramto your launch command - Model Downgrade: Switch from FP16 to FP8 or GGUF quantized models

- Workflow Optimization: Reduce batch size to 1, lower resolution to 896x896

- Advanced: Enable tiled VAE processing for high resolutions

If errors persist, consider using LaoZhang.ai's API service for instant generation without hardware limitations.

Slow Generation Speed

FLUX generation shouldn't take more than 30-60 seconds on modern GPUs. If experiencing slowness:

Check GPU Utilization: Use nvidia-smi to ensure ComfyUI uses your GPU, not CPU. Low utilization indicates configuration issues.

Optimize Settings:

- Switch to schnell model for 4x speed improvement

- Reduce steps (schnell needs only 4)

- Disable preview:

--preview-method none - Enable xformers optimization

System-Level Fixes:

- Close other GPU applications

- Ensure adequate cooling (thermal throttling kills performance)

- Update GPU drivers to latest stable version

Model Loading Failures

When models fail to load, systematic debugging helps:

-

Verify File Integrity: Check model file sizes match expected values. Incomplete downloads are common.

-

Path Confirmation: Ensure models are in correct folders:

- FLUX models:

ComfyUI/models/unet/ - CLIP models:

ComfyUI/models/clip/ - VAE:

ComfyUI/models/vae/

- FLUX models:

-

Format Compatibility: FLUX requires .safetensors format. Convert .ckpt files if necessary.

-

Memory During Loading: Loading requires more VRAM than generation. Restart ComfyUI with

--lowvramif loading fails.

Quality Issues

Poor output quality often stems from incorrect settings rather than model limitations:

Blurry/Low Detail Results:

- Increase steps to 20-30 for dev model

- Ensure using correct VAE (FLUX VAE, not SD 1.5)

- Check resolution isn't being downscaled

Oversaturated/Artificial Colors:

- Reduce CFG scale to 3.5 or lower

- Avoid negative prompts (FLUX doesn't need them)

- Use "euler" sampler instead of advanced variants

Prompt Ignorance:

- Ensure both CLIP models loaded correctly

- Check text encoding connections

- Verify using FLUX-compatible text encoders

Recent 2025-Specific Issues

The December 2024/January 2025 ComfyUI updates introduced new compatibility challenges:

API Workflow Crashes: The web interface works but API calls fail. Current workaround involves using web interface or reverting to pre-December builds.

Multi-LoRA Memory Leaks: Using multiple FLUX LoRAs causes excessive VRAM usage. Limit to 1-2 LoRAs simultaneously until patches arrive.

Mac Metal Performance: FP8 models crash on Apple Silicon. Use FP16 versions or GGUF Q6_K for Mac systems.

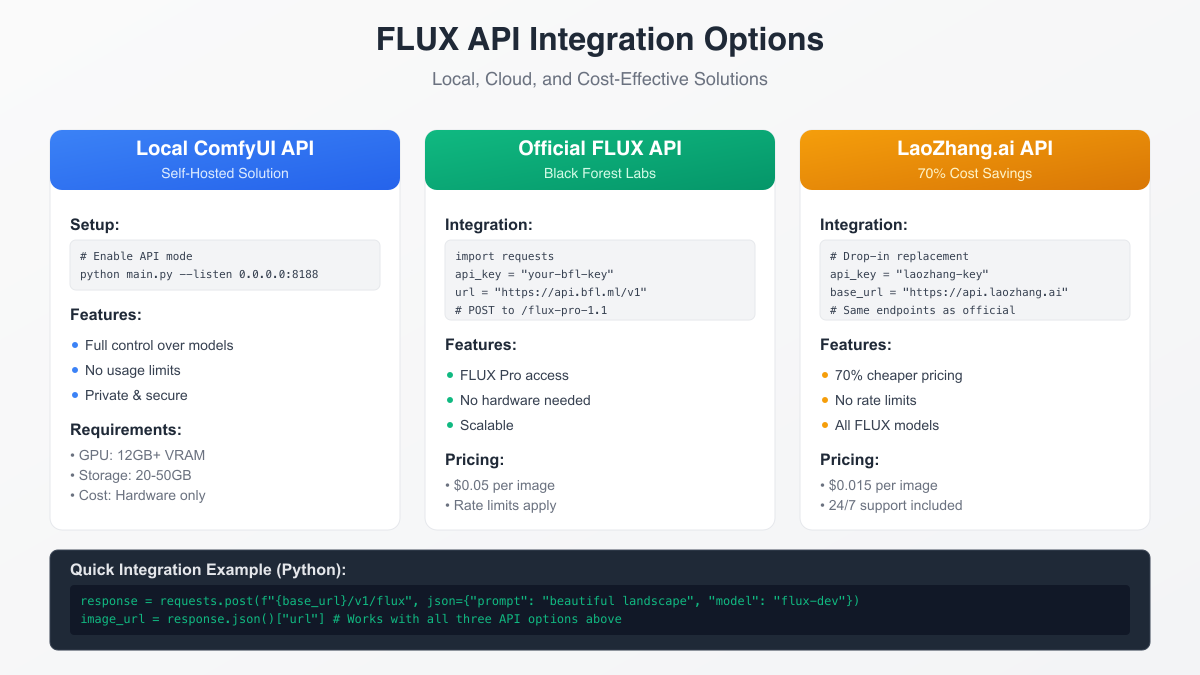

API Integration Options

While local generation offers maximum control, API solutions provide scalability and eliminate hardware constraints. Understanding each option helps choose the right approach for your needs.

Local ComfyUI API

Transform your ComfyUI installation into an API server:

bashpython main.py --listen 0.0.0.0:8188 --enable-cors-header

This enables programmatic access to your workflows:

pythonimport json import requests with open('flux_workflow.json', 'r') as f: workflow = json.load(f) # Modify prompt workflow["6"]["inputs"]["text"] = "Your prompt here" # Submit job response = requests.post("http://localhost:8188/prompt", json={"prompt": workflow})

Benefits include full control, no usage limits, and data privacy. However, you're limited by local hardware and responsible for maintenance.

Official FLUX API

Black Forest Labs offers direct API access to FLUX Pro:

pythonimport requests headers = { "Authorization": f"Bearer {BFL_API_KEY}", "Content-Type": "application/json" } data = { "prompt": "Beautiful landscape", "model": "flux-pro-1.1", "width": 1024, "height": 1024 } response = requests.post("https://api.bfl.ml/v1/flux", headers=headers, json=data)

At $0.05 per image, costs accumulate quickly for production use. Rate limits and availability issues can impact reliability.

LaoZhang.ai: Cost-Effective Alternative

LaoZhang.ai provides a compelling alternative with 70% cost savings:

python# Drop-in replacement for OpenAI/BFL APIs import openai openai.api_key = "your-laozhang-key" openai.api_base = "https://api.laozhang.ai/v1" # Same code structure, 70% lower cost response = openai.Image.create( model="flux-dev", prompt="Stunning sunset over mountains", n=1, size="1024x1024" )

Key advantages:

- $0.015 per image vs $0.05 official pricing

- No rate limits or throttling

- Support for all FLUX models (dev, schnell, pro)

- 24/7 technical support

- Simple integration with existing codebases

For teams generating hundreds or thousands of images, the savings are substantial while maintaining full quality and reliability.

Performance Optimization Best Practices

Maximizing FLUX performance requires understanding both hardware and software optimization techniques.

Batch Processing Strategies

While batch generation seems efficient, FLUX's memory requirements make single-image processing often faster:

python# Optimal for FLUX for prompt in prompts: generate_single(prompt) # Better memory management # Rather than generate_batch(prompts) # Can cause OOM errors

This counterintuitive approach prevents memory fragmentation and allows consistent generation times.

Resolution Optimization

FLUX trained on 1024x1024 images, making this the optimal resolution. However, intelligent resolution choices improve efficiency:

- Draft Generation: Start at 512x512 for rapid iteration

- Final Output: Generate at 1024x1024 or use img2img upscaling

- Aspect Ratios: Maintain total pixel count near 1 megapixel (e.g., 1152x896)

Hardware Acceleration

Beyond basic GPU usage, several optimizations boost performance:

Enable TensorFloat-32: On Ampere+ GPUs, TF32 provides free performance gains:

pythontorch.backends.cuda.matmul.allow_tf32 = True

Persistent Cache: Keep models in memory between generations:

bashpython main.py --cache-models

Multi-GPU Setup: Distribute model components across GPUs:

bashpython main.py --multi-gpu

Workflow Optimization

Efficient workflows significantly impact generation speed:

- Pre-encode Prompts: Cache CLIP encodings for repeated prompts

- Reuse Latents: For variations, modify existing latents rather than generating new

- Progressive Enhancement: Generate low-res then enhance, rather than direct high-res

- Selective Processing: Use regional prompting to regenerate only specific areas

Future Outlook and Best Practices

The FLUX ecosystem continues evolving rapidly. Staying informed about developments ensures you leverage new capabilities as they emerge.

Upcoming Developments

Black Forest Labs roadmap hints at exciting additions:

- FLUX 2.0: Improved architecture with better prompt understanding

- Video Models: FLUX-based video generation entering beta

- Control Mechanisms: Advanced ControlNet implementations

- Mobile Optimization: Quantized models for edge devices

ComfyUI development focuses on:

- Native FLUX support without custom nodes

- Improved memory management

- Real-time preview capabilities

- Enhanced workflow sharing

Community Resources

The FLUX community provides invaluable resources:

Official Channels:

- ComfyUI GitHub for updates and issue tracking

- Black Forest Labs Discord for model announcements

- Reddit r/comfyui for workflow sharing

Learning Resources:

- ComfyUI-Wiki.com for comprehensive tutorials

- YouTube channels demonstrating advanced techniques

- GitHub repositories with pre-built workflows

Best Practices Summary

Success with FLUX in ComfyUI comes from:

- Start Simple: Master basic workflows before adding complexity

- Monitor Resources: Use task manager to understand bottlenecks

- Iterate Quickly: Use schnell for drafts, dev for finals

- Document Workflows: Save and annotate successful configurations

- Stay Updated: Regular updates bring performance improvements

- Consider APIs: For production, APIs like LaoZhang.ai offer reliability

Conclusion

ComfyUI and FLUX represent the cutting edge of AI image generation, offering unprecedented quality and control. Whether running locally on high-end hardware, optimizing for modest GPUs with GGUF models, or leveraging cost-effective API solutions like LaoZhang.ai, the tools exist to bring your creative visions to life.

The key to success lies in understanding your specific needs and choosing the right approach. Local generation offers maximum control and privacy, while API solutions provide scalability and eliminate hardware constraints. GGUF quantization bridges the gap, making FLUX accessible to a broader audience without sacrificing too much quality.

As the ecosystem continues evolving, staying engaged with the community and experimenting with new techniques ensures you remain at the forefront of AI-generated art. The combination of ComfyUI's flexibility and FLUX's capabilities opens creative possibilities limited only by imagination.

Remember, whether generating locally or through APIs, the goal remains the same: creating stunning images that realize your creative vision. Choose the tools that best support your workflow, and don't hesitate to leverage services like LaoZhang.ai when scalability and cost-effectiveness matter.

The future of AI image generation is bright, and with ComfyUI and FLUX, you're equipped to be part of this exciting creative revolution.