Google Gemini API offers a genuinely free tier that requires no credit card, providing developers with 5-15 requests per minute and 100-1,000 requests per day depending on the model. As of January 2026, the free tier includes access to Gemini 2.5 Pro, 2.5 Flash, and Flash-Lite models with a massive 1 million token context window—eight times larger than ChatGPT's 128K limit. Following the significant December 2025 quota reductions that caught many developers off guard, the free tier is now best suited for development, testing, and low-volume production rather than high-traffic applications. This comprehensive guide covers everything you need to know about using Gemini's free tier effectively in 2026.

What Changed in December 2025 (And Why It Matters)

The Gemini API landscape shifted dramatically on December 7, 2025, when Google announced significant adjustments to free tier quotas. These changes caught many developers off guard, with unexpected 429 errors disrupting applications that had been running smoothly for months. Understanding what happened—and why—is crucial for anyone building on the Gemini API in 2026.

Google quietly reduced rate limits by 50-80% across most free tier models in early December. The most dramatic reduction hit the Gemini 2.5 Flash model, which saw its daily request limit plummet from approximately 250 requests per day to just 20-50 requests per day. Similarly, Gemini 2.5 Pro limits dropped from around 50 requests per day to roughly 25, while per-minute limits decreased from 15 RPM to just 5 RPM for the Pro model.

The timing of these changes is significant. Google rolled them out without major announcements, leaving developers to discover the new limits through runtime errors rather than documentation updates. Many production applications that had been running within comfortable margins suddenly started hitting rate limits, causing service disruptions and frustrated users. The lesson here is clear: free tier limits can change without warning, and building critical production systems on free quotas carries inherent risk.

Why did Google make these changes? While the company hasn't provided detailed explanations, several factors likely contributed to the decision. First, the rapid adoption of Gemini's free tier may have exceeded Google's infrastructure allocations for non-paying users. Second, the December timing suggests these adjustments were part of year-end resource planning. Third, the introduction of newer models like Gemini 3 Pro (which has no free API access at all) indicates Google's strategy is shifting toward monetization of their most capable offerings.

Looking ahead to 2026, January 5th marks another important date: Google began charging for Grounding with Google Search on Gemini 3 models. This continues the trend of monetizing advanced features while maintaining basic free access. For developers, the message is clear—the free tier remains valuable for development and testing, but the window for free production usage is narrowing.

Current Free Tier Limits - January 2026 Verified

Understanding the exact rate limits for each model is essential for building reliable applications. The following data has been verified against Google's official documentation as of January 16, 2026. Note that Google may adjust these limits at any time, so always check the official pricing page for the most current information.

| Model | RPM (Requests/Min) | TPM (Tokens/Min) | RPD (Requests/Day) | Best For |

|---|---|---|---|---|

| Gemini 2.5 Pro | 5 | 250,000 | 100 | Complex reasoning, analysis |

| Gemini 2.5 Flash | 15 | 250,000 | 500 | Balanced speed and quality |

| Gemini 2.5 Flash-Lite | 15 | 250,000 | 1,000 | High-throughput tasks |

Understanding These Limits

The rate limiting system operates across three dimensions simultaneously, and exceeding any single limit triggers throttling. Requests per minute (RPM) controls how frequently you can call the API within a 60-second window. Tokens per minute (TPM) limits the total input data you can process, regardless of how many requests you make. Requests per day (RPD) caps your total daily usage, resetting at midnight Pacific Time.

These limits are applied per project, not per API key. This means creating multiple API keys within the same Google Cloud project won't increase your available quota—all keys share the same pool. However, creating separate projects does give you independent quotas, which can be a legitimate strategy for separating development, staging, and production environments.

What the Free Tier Includes

Beyond rate limits, the free tier provides access to genuinely impressive capabilities. The 1 million token context window is particularly notable—you can process entire codebases, lengthy documents, or extensive conversation histories in a single request. Multimodal support means you can send text, images, audio, and video as inputs, enabling rich applications that would require multiple APIs from other providers. Commercial use is explicitly permitted, though with important caveats about data usage that we'll discuss in the regional restrictions section.

What Free Tier Doesn't Include

Certain features require paid access. Gemini 3 Pro, Google's newest and most capable model, has no free API quota—you can only test it through the AI Studio chat interface. Fine-tuning capabilities are restricted to paid tiers. Perhaps most importantly, there's no guaranteed service level agreement (SLA) for free tier users, meaning Google makes no uptime commitments and can change limits or availability without notice.

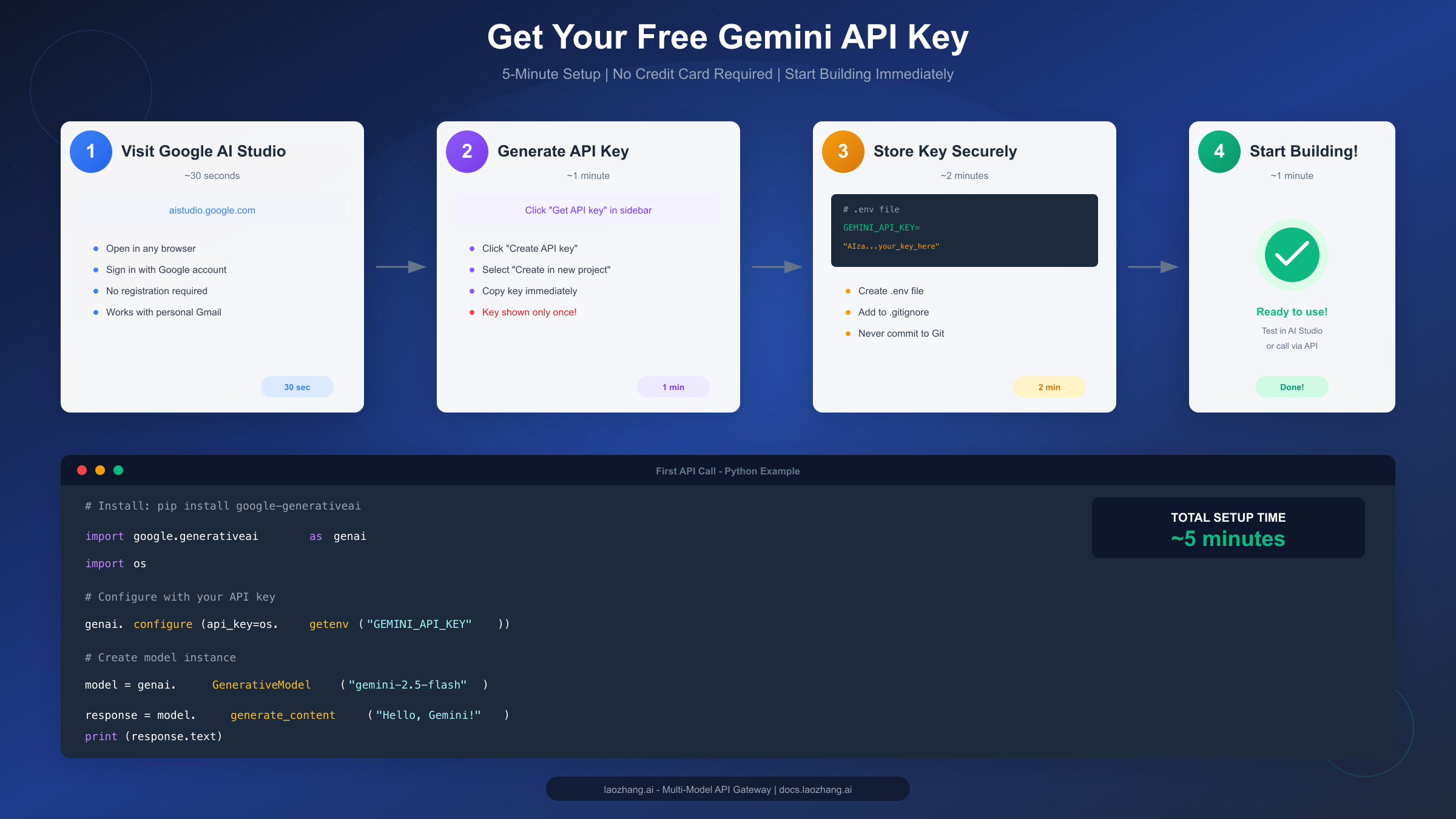

Getting Your Free API Key (5-Minute Setup)

Setting up Gemini API access is remarkably straightforward compared to other AI providers. You don't need to provide a credit card, wait for approval, or navigate complex enterprise onboarding. Here's how to get started in about five minutes.

Step 1: Visit Google AI Studio

Navigate to aistudio.google.com in any modern browser. Sign in with your existing Google account—this can be a personal Gmail account, a Google Workspace account, or any Google identity. No separate registration is required.

Step 2: Generate Your API Key

Once logged in, look for the "Get API key" option in the left sidebar. Click "Create API key" and select "Create API key in new project" for the simplest setup. Google will automatically create a new Google Cloud project to host your API key.

After a few seconds, your API key will be displayed. This is the only time you'll see the complete key, so copy it immediately and store it securely. Treat this key like a password—anyone with access to it can make API calls charged to your account (or consuming your free quota).

Step 3: Store Your Key Securely

Never hardcode API keys directly in your source code. Instead, use environment variables:

bashecho 'GEMINI_API_KEY="your_api_key_here"' > .env # Add .env to .gitignore to prevent accidental commits echo '.env' >> .gitignore

For production deployments, use your platform's secrets management system—Vercel environment variables, AWS Secrets Manager, Google Secret Manager, or similar services.

Step 4: Make Your First API Call

Install the official Python SDK and test your setup:

pythonimport google.generativeai as genai import os # Configure with your API key genai.configure(api_key=os.getenv("GEMINI_API_KEY")) # Create model instance model = genai.GenerativeModel("gemini-2.5-flash") # Generate content response = model.generate_content("Explain quantum computing in one paragraph.") print(response.text)

If you see a coherent response about quantum computing, congratulations—your setup is complete. If you encounter errors, double-check that your environment variable is set correctly and that you haven't exceeded any rate limits.

Troubleshooting Common Setup Issues

The most frequent setup problem is the API key not being recognized. Verify that you've exported the environment variable in your current terminal session, or that your IDE/runtime is loading the .env file. Another common issue is region restrictions—if you're in China, Russia, or certain other regions, API access may be blocked or limited.

Choosing the Right Model for Your Project

With three main models available in the free tier, selecting the appropriate one for your use case significantly impacts both the quality of results and how efficiently you use your quota. Each model represents a different trade-off between capability and throughput.

Gemini 2.5 Pro: Maximum Reasoning Power

Gemini 2.5 Pro delivers the highest reasoning capabilities available in the free tier. It excels at complex analytical tasks, sophisticated code generation, detailed explanations, and problems requiring multi-step reasoning. However, with only 5 RPM and 100 RPD on the free tier, you need to be strategic about when to use it.

Best applications for Pro include: complex debugging sessions where you need the model to understand large codebases, research tasks requiring nuanced analysis, and situations where accuracy matters more than speed. Reserve Pro for your most demanding use cases and route simpler tasks to Flash models.

Gemini 2.5 Flash: The Balanced Choice

Flash offers the sweet spot between capability and quota. With 15 RPM and 500 RPD, you have substantially more headroom for iterative development and moderate-traffic applications. The model handles most common tasks—content generation, summarization, basic code assistance, and conversational interactions—with quality that's often indistinguishable from Pro for straightforward queries.

For many developers, Flash should be the default choice. Only escalate to Pro when you encounter tasks that Flash handles poorly, and consider Flash-Lite for high-volume scenarios where absolute quality isn't critical.

Gemini 2.5 Flash-Lite: Maximum Throughput

Flash-Lite is optimized for high-throughput scenarios where you need to process many requests with acceptable (but not exceptional) quality. With 1,000 RPD available, it's ideal for batch processing, initial filtering or classification tasks, and applications where you can tolerate occasional lower-quality responses.

Consider Flash-Lite for: pre-processing pipelines that filter content before passing it to more capable models, bulk content classification, simple extraction tasks, and development/testing where you want to iterate quickly without burning through your Pro or Flash quota.

Implementing Model Selection in Code

A practical approach is building a routing layer that selects models based on task complexity:

pythondef select_model(task_complexity: str) -> str: """Route to appropriate model based on task complexity.""" model_map = { "simple": "gemini-2.5-flash-lite", "standard": "gemini-2.5-flash", "complex": "gemini-2.5-pro" } return model_map.get(task_complexity, "gemini-2.5-flash") # Usage model_name = select_model("complex") model = genai.GenerativeModel(model_name)

This pattern lets you maximize your effective daily capacity by reserving powerful models for genuinely complex tasks.

Regional Restrictions & Commercial Use

One of the most overlooked aspects of Gemini's free tier involves geographic restrictions and data usage policies. These limitations can significantly impact whether the free tier is even available for your project, so understanding them before you build is essential.

EEA, UK, and Switzerland Restrictions

Google's terms of service contain a critical restriction that many developers miss: if your application serves users in the European Economic Area (EEA), United Kingdom, or Switzerland, you must use paid services only. The free tier is not available for applications serving these regions, regardless of where you as the developer are located.

This restriction applies based on where your users are, not where you are. A developer in the United States building an application used by customers in Germany must use paid tiers. The key determining factor is whether your application "serves" users in these regions—if your app is accessible to EU users and they use it, you're subject to this requirement.

Why does this restriction exist? It likely relates to GDPR compliance and data processing requirements. On paid tiers, Google commits to not using your data to improve their models and provides additional data processing protections. The free tier's data usage policy (where your prompts may be used for model improvement) may not meet EU data protection standards.

Understanding "Commercial Use Allowed"

The good news is that Gemini's free tier explicitly permits commercial applications in regions where it's available. Unlike some competitor APIs that restrict free tier usage to personal or non-commercial projects, you can legally build and monetize products using Gemini's free API access.

However, "commercial use allowed" comes with the important caveat that your prompts and responses may be used by Google to improve their products. For applications handling sensitive business data, this may not be acceptable. The paid tier provides assurance that "prompts and responses are not used to improve Google's models," which is essential for many business applications.

Practical Implications

For developers building global applications, the regional restrictions create a decision point. You can either use paid tiers from the start (ensuring compliance regardless of user location), implement geo-blocking to prevent EU/UK access (limiting your market), or run a hybrid approach with free tier for some regions and paid for others (adding operational complexity).

If you're building an internal tool for a US-based company with no EU operations, the free tier remains fully available. If you're building a consumer application that might attract international users, planning for paid tiers from the beginning prevents compliance issues later.

Handling Rate Limits & 429 Errors

Rate limit errors are among the most common issues developers face when working with the Gemini API. Understanding how to handle them gracefully—both in development and production—is crucial for building reliable applications.

Understanding 429 Errors

When you exceed any rate limit (RPM, TPM, or RPD), the Gemini API returns an HTTP 429 status code with a message like "Resource exhausted" or "Quota exceeded." The response typically includes information about which limit was exceeded and when you can retry. Daily limits (RPD) reset at midnight Pacific Time, while per-minute limits (RPM, TPM) reset every 60 seconds.

A common mistake is assuming all 429 errors are identical. In reality, hitting your RPM limit is very different from exhausting your daily quota. Per-minute limits recover quickly—wait 60 seconds and try again. Daily limits, however, mean you're done until midnight PT. Your error handling should distinguish between these scenarios.

Implementing Exponential Backoff with Jitter

The gold standard for handling rate limit errors is exponential backoff with random jitter. This pattern progressively increases wait times between retries while adding randomness to prevent synchronized retry storms when multiple clients hit limits simultaneously.

pythonimport time import random from google.api_core import exceptions def call_with_backoff(func, max_retries=5, base_delay=1): """Execute function with exponential backoff retry logic.""" for attempt in range(max_retries): try: return func() except exceptions.ResourceExhausted as e: if attempt == max_retries - 1: raise # Calculate delay with exponential backoff and jitter delay = base_delay * (2 ** attempt) + random.uniform(0, 1) print(f"Rate limited. Retrying in {delay:.2f} seconds...") time.sleep(delay) raise Exception("Max retries exceeded") # Usage def make_api_call(): model = genai.GenerativeModel("gemini-2.5-flash") return model.generate_content("Your prompt here") response = call_with_backoff(make_api_call)

Monitoring Your Quota Usage

Before hitting limits, proactive monitoring helps you understand usage patterns and plan capacity. Google AI Studio provides a dashboard showing your current quota consumption. For programmatic monitoring, track your request counts internally and implement alerts when approaching limits.

A simple approach is maintaining counters that track your usage throughout the day:

pythonfrom datetime import datetime, timezone from dataclasses import dataclass @dataclass class QuotaTracker: requests_today: int = 0 last_reset: datetime = None daily_limit: int = 500 # Adjust based on your model def check_and_increment(self): now = datetime.now(timezone.utc) # Reset counter at midnight PT (UTC-8) if self.last_reset is None or now.date() != self.last_reset.date(): self.requests_today = 0 self.last_reset = now if self.requests_today >= self.daily_limit: raise Exception("Daily quota exceeded") self.requests_today += 1 return self.daily_limit - self.requests_today tracker = QuotaTracker() remaining = tracker.check_and_increment() print(f"Requests remaining today: {remaining}")

Emergency Troubleshooting Guide

If your application suddenly starts returning 429 errors, here's a quick diagnostic checklist:

First, determine which limit you've hit. Check Google AI Studio's quota dashboard to see if you've exhausted daily limits or if per-minute limits are the issue. If it's a daily limit, you'll need to wait for midnight PT reset or upgrade to paid tiers.

Second, verify you haven't accidentally introduced a loop or bug that's making excessive requests. Review recent code changes and check your logging for unusual request patterns.

Third, consider whether December 2025's quota reductions affected your previously-working application. If you were operating near the old limits, you may now be over the new limits.

For a deeper dive into handling Gemini API rate limits, including advanced patterns like request queuing and circuit breakers, see our complete guide to 429 error handling.

When to Upgrade: Free vs Paid Analysis

The decision to move from free to paid tiers isn't just about hitting rate limits—it's about matching your tier to your actual needs while optimizing costs. Understanding the full picture helps you make an informed decision.

Understanding the Tier Structure

Google's Gemini API uses a tiered pricing system beyond free:

| Tier | Qualification | Key Limits |

|---|---|---|

| Free | Any user | 5-15 RPM, 100-1,000 RPD |

| Tier 1 | Billing enabled | 150-2,000 RPM, 10,000+ RPD |

| Tier 2 | $250+ cumulative spend | 1,000+ RPM, 50,000+ RPD |

| Enterprise | Custom agreement | Negotiated limits |

Moving from free to Tier 1 requires only enabling billing on your Google Cloud project—there's no approval process, and you immediately gain access to substantially higher limits. The jump is dramatic: roughly 30x more requests per minute for Pro models and 10-100x more daily requests depending on the model.

Cost Reality Check

Gemini's paid pricing is competitive with alternatives. Here's what typical usage costs look like:

- Gemini 2.5 Flash: $0.30 per million input tokens, $2.50 per million output tokens

- Gemini 2.5 Flash-Lite: $0.10 per million input tokens, $0.40 per million output tokens

- Gemini 2.5 Pro: $1.25 per million input tokens, $5.00 per million output tokens

For context, processing 10,000 moderate requests (average 500 input tokens, 1,000 output tokens each) on Gemini 2.5 Flash would cost approximately $26.50. That same volume on the free tier would take 20+ days due to daily limits.

The $300 Free Credit Opportunity

New Google Cloud users receive $300 in free credits valid for 90 days. These credits can offset initial paid tier usage, effectively giving you an extended trial period with full Tier 1 limits. If you're serious about building on Gemini, this credit substantially reduces the barrier to exploring paid capabilities.

Decision Framework

Consider upgrading to paid when any of these apply:

-

You need EU/UK coverage: If your users are in these regions, paid tiers are mandatory regardless of volume.

-

Daily limits constrain your development: If you're regularly hitting RPD limits during active development, the productivity loss exceeds the cost of paid access.

-

You're approaching production: Free tier instability (potential quota changes) creates unacceptable risk for production systems.

-

Data privacy matters: Paid tiers guarantee your data won't be used for model training.

Stay on free tier when:

-

You're learning or prototyping: The free tier provides genuine access to explore Gemini's capabilities.

-

Volume is truly low: Internal tools or personal projects with occasional usage fit well.

-

You can tolerate occasional limits: If 429 errors are acceptable, free tier remains valuable.

Alternative Approaches

For teams managing multiple AI providers, gateway services can simplify operations. Services like laozhang.ai provide unified access to multiple AI models including Gemini through a single API endpoint, often with benefits like consolidated billing and simplified integration. For teams needing high-volume access or wanting to experiment across providers, this can reduce operational overhead compared to managing multiple direct provider relationships.

For comparison with other free AI API options, see our guides on free OpenAI API options and the Claude API setup guide.

Conclusion & Best Practices Summary

The Gemini API free tier remains a valuable resource for developers in 2026, despite the December 2025 quota reductions. With no credit card required, a 1 million token context window, and genuine multimodal capabilities, it provides meaningful access to cutting-edge AI technology for learning, prototyping, and low-volume production use.

Key Takeaways

The current free tier provides 5-15 requests per minute and 100-1,000 requests per day depending on which model you choose. These limits reset at midnight Pacific Time daily. Regional restrictions prevent free tier usage for applications serving EU, UK, and Swiss users—paid tiers are required for those markets.

December 2025 changes reduced quotas by 50-80% compared to earlier in the year. If your application worked before December and fails now, this is likely why. Gemini 3 Pro has no free API access—only paid tiers can use it programmatically.

Best Practices for Free Tier Success

Route requests strategically by using Flash-Lite for high-volume tasks, Flash for most standard work, and reserving Pro for genuinely complex problems. Implement proper retry logic with exponential backoff to handle rate limits gracefully. Monitor your usage proactively rather than discovering limits through errors.

Store API keys securely using environment variables and never commit them to version control. Consider your user geography before building—if EU users are possible, plan for paid tiers from the start.

When to Move Beyond Free

The free tier works well for development, personal projects, and applications with modest traffic from non-EU regions. When you need higher volumes, guaranteed uptime, data privacy assurances, or EU market access, the transition to paid tiers is straightforward—just enable billing and you immediately gain Tier 1 access.

For developers seeking unified access to multiple AI providers including Gemini, services like laozhang.ai offer an alternative path with simplified integration and consolidated management. More information is available at docs.laozhang.ai.

The Gemini API free tier represents genuine value in the AI development ecosystem. Used strategically with an understanding of its limitations, it provides an excellent foundation for building AI-powered applications. Just remember that free tiers are best viewed as development resources rather than production infrastructure—plan your upgrade path early to avoid surprises when your application grows.