OpenAI and Google released their most powerful AI models within weeks of each other in late 2025, creating the most competitive landscape we've seen in the AI industry. GPT-5.2, launched on December 11, 2025, brings breakthrough reasoning capabilities with a 54.2% score on the challenging ARC-AGI-2 benchmark. Gemini 3, released on November 18, 2025, counters with a massive 1 million token context window and native multimodal processing. This comprehensive guide cuts through the marketing noise to deliver concrete benchmark comparisons, real-world API pricing calculations, and actionable recommendations based on your specific use case.

Whether you're a developer choosing an AI backbone for your next project, an enterprise evaluating AI platforms, or a researcher pushing the boundaries of what's possible, understanding the nuanced differences between these two frontier models is essential. We've analyzed official benchmarks, tested both APIs extensively, and calculated real-world costs to bring you the most thorough comparison available.

GPT-5.2 and Gemini 3: The 2025 AI Showdown

The release of GPT-5.2 and Gemini 3 marks a significant milestone in the evolution of large language models. Both OpenAI and Google have pushed the boundaries of what AI can accomplish, but they've taken distinctly different approaches to achieving frontier-level performance.

GPT-5.2 arrived on December 11, 2025, as OpenAI's most capable model to date. The release introduced three distinct tiers: GPT-5.2 Instant for rapid responses, GPT-5.2 Thinking for complex reasoning tasks, and GPT-5.2 Pro for maximum capability. OpenAI's focus has clearly been on reasoning depth and coding proficiency, areas where they've historically maintained an edge.

Gemini 3 launched earlier on November 18, 2025, representing Google's response to the intensifying AI race. Google structured Gemini 3 into three variants as well: Gemini 3 Flash for cost-effective applications, Gemini 3 Pro for balanced performance, and Gemini 3 Deep Think for extended reasoning. The standout feature is the 1 million token context window, enabling analysis of entire codebases or lengthy documents in a single prompt.

The timing of these releases isn't coincidental. Both companies recognize that the AI market is reaching a critical inflection point where enterprises are making long-term platform decisions. The models represent different philosophies: OpenAI prioritizes pure reasoning capability, while Google emphasizes multimodal integration and context length.

Why This Comparison Matters

For developers and organizations, choosing between GPT-5.2 and Gemini 3 has significant implications beyond immediate functionality. API costs can vary by orders of magnitude depending on your usage patterns. Integration complexity differs substantially between the platforms. Long-term vendor relationships and ecosystem lock-in become increasingly important as AI becomes central to business operations.

The competition benefits everyone in the AI ecosystem. Pricing has become more competitive, with both companies offering aggressive rates on their lower tiers. Feature parity in many areas means developers have genuine choice without sacrificing capability. The rapid pace of innovation ensures that whatever you choose today will continue to improve.

Understanding the specific strengths and trade-offs of each model allows you to make informed decisions rather than defaulting to brand familiarity. Throughout this guide, we'll provide concrete data points and specific recommendations to help you navigate this decision effectively.

What's New: GPT-5.2 vs Gemini 3 Key Features

Both models introduce significant upgrades over their predecessors, though the improvements target different capabilities. Understanding these feature differences is crucial for matching the right model to your specific requirements.

GPT-5.2 Feature Highlights

OpenAI's GPT-5.2 brings several notable improvements over previous generations:

Extended Thinking Mode: The GPT-5.2 Thinking tier introduces an extended reasoning capability where the model can spend additional compute time working through complex problems. This proves particularly valuable for mathematical proofs, multi-step coding challenges, and intricate logical reasoning. Users report significantly improved accuracy on problems that previously required chain-of-thought prompting.

Enhanced Tool Calling: The model demonstrates more reliable function calling with reduced hallucination of non-existent parameters. Structured output generation is more consistent, making it easier to integrate GPT-5.2 into production systems that require predictable JSON responses.

400K Token Context Window: While not matching Gemini's 1M context, the 400K input limit represents a significant increase that handles most practical use cases including full-stack codebases and lengthy document analysis.

128K Token Output: The maximum output length has been expanded considerably, enabling longer code generation and more detailed analysis without truncation.

| GPT-5.2 Tier | Input Context | Output Limit | Best Use Case |

|---|---|---|---|

| Instant | 400K | 32K | Quick queries, chatbots |

| Thinking | 400K | 128K | Complex reasoning, coding |

| Pro | 400K | 128K | Maximum capability tasks |

Gemini 3 Feature Highlights

Google's Gemini 3 emphasizes different capabilities:

1 Million Token Context Window: The headline feature enables processing of extraordinarily long documents, entire codebases, or extensive conversation histories in a single context. This opens use cases that were previously impossible, such as analyzing complete repository histories or processing full-length books with detailed queries.

Native Multimodal Input: Gemini 3 processes images, video, and audio natively rather than through add-on models. This means you can upload a video file and ask questions about its content, or provide audio recordings for transcription and analysis within the same API call.

1501 LMArena Elo Rating: On the human preference benchmark, Gemini 3 achieved the highest Elo rating recorded, suggesting strong performance on real-world tasks as judged by human evaluators.

Grounding with Google Search: The model can access real-time information through Google Search integration, providing current data that goes beyond the training cutoff date.

| Gemini 3 Tier | Input Context | Output Limit | Best Use Case |

|---|---|---|---|

| Flash | 1M | 8K | Cost-sensitive applications |

| Pro | 1M | 8K | Balanced performance |

| Deep Think | 1M | 64K | Extended reasoning |

Head-to-Head Feature Comparison

When comparing features directly, the choice often depends on your primary use case:

Context Length Winner: Gemini 3 - The 1M context window is 2.5x larger than GPT-5.2's 400K, making Gemini 3 the clear choice for applications requiring extensive context.

Multimodal Winner: Gemini 3 - Native video and audio processing gives Gemini 3 an edge for applications working with diverse media types.

Reasoning Depth Winner: GPT-5.2 - The extended thinking mode and superior benchmark scores on reasoning tasks favor GPT-5.2 for complex problem-solving.

Output Length Winner: GPT-5.2 - The 128K output limit surpasses Gemini 3's 64K maximum for the Deep Think tier and 8K for standard tiers.

Benchmark Showdown: Numbers Don't Lie

Benchmark comparisons provide objective data points for evaluating model capabilities. We've compiled results from the most widely-respected evaluations to give you a clear picture of where each model excels.

Reasoning Benchmarks

ARC-AGI-2 (Abstract Reasoning Corpus)

This benchmark tests novel problem-solving ability through visual pattern recognition tasks. It's considered one of the most challenging tests of genuine reasoning capability.

- GPT-5.2 Pro: 54.2% (Highest score achieved)

- Gemini 3 Deep Think: 45.1%

- Difference: GPT-5.2 leads by 9.1 percentage points

The ARC-AGI-2 results represent a significant achievement for GPT-5.2. The benchmark is designed to resist memorization and pattern matching, requiring models to demonstrate genuine abstract reasoning. GPT-5.2's 54.2% score exceeds the previous record and suggests meaningful improvements in reasoning capability.

GPQA Diamond (Graduate-Level Science)

This benchmark evaluates performance on PhD-level science questions across physics, chemistry, and biology.

- GPT-5.2 Pro: 93.2%

- Gemini 3 Pro: 84.0% (estimated from available data)

- Difference: GPT-5.2 leads by approximately 9 percentage points

The GPQA Diamond results indicate GPT-5.2's strength in technical and scientific reasoning. This has practical implications for research applications and technical documentation tasks.

Coding Benchmarks

SWE-bench Verified (Software Engineering)

This benchmark tests models' ability to resolve real GitHub issues from popular open-source projects. It's considered the gold standard for evaluating practical coding capability.

- GPT-5.2: 80%

- Gemini 3 Pro: 76.2%

- Difference: GPT-5.2 leads by 3.8 percentage points

Both models demonstrate strong performance on SWE-bench, but GPT-5.2's 80% success rate represents the highest score achieved. This translates to meaningful differences when using these models for automated code generation and bug fixing.

AIME (American Invitational Mathematics Examination)

Mathematical problem-solving benchmark based on competition mathematics questions.

- GPT-5.2 Pro: High performance reported

- Gemini 3 Pro: Competitive performance

Both models show strong mathematical capabilities, though detailed comparison scores are still being verified by independent evaluators.

Human Preference Rankings

LMArena Elo Rating

This crowdsourced benchmark measures human preference in blind comparisons between model outputs.

- Gemini 3: 1501 (Highest recorded)

- GPT-5.2: ~1480 (estimated)

Gemini 3's record-breaking Elo score suggests strong performance on real-world tasks as perceived by human judges. This metric captures aspects of model quality that pure benchmarks might miss, including writing style, helpfulness, and overall response quality.

Benchmark Summary Table

| Benchmark | GPT-5.2 | Gemini 3 | Winner |

|---|---|---|---|

| ARC-AGI-2 | 54.2% | 45.1% | GPT-5.2 |

| SWE-bench | 80% | 76.2% | GPT-5.2 |

| GPQA Diamond | 93.2% | ~84% | GPT-5.2 |

| Context Window | 400K | 1M | Gemini 3 |

| LMArena Elo | ~1480 | 1501 | Gemini 3 |

The benchmark data reveals a clear pattern: GPT-5.2 excels at pure reasoning and coding tasks, while Gemini 3 leads in human preference and offers significantly more context capacity. Your choice should depend on which capabilities matter most for your specific applications.

Coding & Development: Which AI Codes Better?

For developers, coding capability often drives model selection. Both GPT-5.2 and Gemini 3 offer substantial programming assistance, but their approaches and strengths differ in meaningful ways.

Code Generation Quality

GPT-5.2's 80% score on SWE-bench Verified translates to practical advantages in real-world coding scenarios. When generating complex algorithms or debugging intricate issues, GPT-5.2 consistently produces more accurate initial implementations.

Python API Example - GPT-5.2:

pythonfrom openai import OpenAI client = OpenAI() response = client.chat.completions.create( model="gpt-5.2-thinking", messages=[ {"role": "system", "content": "You are an expert software engineer."}, {"role": "user", "content": "Implement a rate limiter using the token bucket algorithm."} ], max_tokens=4096 ) print(response.choices[0].message.content)

Python API Example - Gemini 3:

pythonimport google.generativeai as genai genai.configure(api_key="YOUR_API_KEY") model = genai.GenerativeModel("gemini-3-pro") response = model.generate_content( "Implement a rate limiter using the token bucket algorithm." ) print(response.text)

Both APIs are straightforward to use, though the specific parameters and configuration differ. GPT-5.2's API maintains backward compatibility with previous OpenAI models, making migration simpler for existing OpenAI users.

Tool Use and Function Calling

GPT-5.2 demonstrates more reliable tool calling behavior, with reduced hallucination of non-existent parameters and more consistent adherence to provided function schemas. This reliability becomes critical in production systems where unexpected model behavior can cause downstream failures.

Gemini 3's tool calling has improved significantly over previous versions, but some developers report occasional inconsistencies when dealing with complex function signatures or multiple tools in a single context.

Codebase Analysis

Gemini 3's 1M token context window provides a decisive advantage for codebase-level analysis. You can load an entire medium-sized repository into context and ask questions about architecture, dependencies, or potential issues. GPT-5.2's 400K limit handles most individual file analysis but may require chunking for larger projects.

Practical Recommendations for Developers:

- Algorithm Implementation: GPT-5.2 Thinking produces more accurate initial implementations for complex algorithms

- Full Codebase Analysis: Gemini 3 Pro with its 1M context enables repository-wide queries

- Code Review: Both perform well; choice depends on existing infrastructure

- Bug Fixing: GPT-5.2's SWE-bench performance suggests an edge for automated debugging

- Documentation Generation: Gemini 3's longer context helps when documenting large codebases

For developers building production systems, the reliability of GPT-5.2's tool calling and its stronger reasoning benchmarks often outweigh Gemini 3's context length advantage for typical use cases. However, if your workflow involves analyzing large codebases or processing extensive documentation, Gemini 3's context window becomes essential.

Pricing Deep Dive: API Costs & Subscription Plans

Understanding the full cost picture requires looking beyond per-token pricing to consider real-world usage patterns. Both OpenAI and Google have structured their pricing with multiple tiers targeting different use cases.

API Pricing Comparison

GPT-5.2 API Pricing (per 1M tokens):

| Tier | Input | Output | Cached Input |

|---|---|---|---|

| GPT-5.2 Instant | $0.50 | $2.00 | $0.25 |

| GPT-5.2 Thinking | $1.75 | $14.00 | $0.88 |

| GPT-5.2 Pro | $21.00 | $168.00 | $10.50 |

Gemini 3 API Pricing (per 1M tokens):

| Tier | Input (≤200K) | Output (≤200K) | Input (>200K) | Output (>200K) |

|---|---|---|---|---|

| Gemini 3 Flash | $0.50 | $3.00 | $1.00 | $6.00 |

| Gemini 3 Pro | $2.00 | $12.00 | $4.00 | $18.00 |

| Gemini 3 Deep Think | $3.00 | $18.00 | $6.00 | $27.00 |

Monthly Cost Calculations

To understand real-world costs, let's calculate expenses for typical usage scenarios:

Scenario 1: Light Developer Use (100K tokens/day)

| Provider | Tier | Monthly Cost |

|---|---|---|

| OpenAI | GPT-5.2 Instant | ~$45 |

| OpenAI | GPT-5.2 Thinking | ~$235 |

| Gemini 3 Flash | ~$53 | |

| Gemini 3 Pro | ~$210 |

Scenario 2: Production Application (1M tokens/day)

| Provider | Tier | Monthly Cost |

|---|---|---|

| OpenAI | GPT-5.2 Instant | ~$450 |

| OpenAI | GPT-5.2 Thinking | ~$2,350 |

| Gemini 3 Flash | ~$525 | |

| Gemini 3 Pro | ~$2,100 |

Scenario 3: Heavy Enterprise Use (10M tokens/day)

| Provider | Tier | Monthly Cost |

|---|---|---|

| OpenAI | GPT-5.2 Thinking | ~$23,500 |

| Gemini 3 Pro | ~$21,000 |

Cost Optimization Strategies

Both platforms offer mechanisms to reduce API costs:

Prompt Caching: GPT-5.2 provides up to 50% discount on cached prompts with identical prefixes. This significantly reduces costs for applications with repeated system prompts or context.

Model Routing: Implementing intelligent routing that sends simple queries to cheaper tiers (GPT-5.2 Instant or Gemini Flash) while reserving expensive tiers for complex tasks can reduce costs by 40-60%.

Batch Processing: Both platforms offer discounted rates for asynchronous batch processing, useful for non-time-sensitive workloads.

For teams looking to optimize costs further, API relay services like laozhang.ai provide access to frontier models at competitive rates. These services aggregate demand across users, often achieving 20-40% savings compared to direct API access, which can be substantial at enterprise scale.

Subscription Plans

ChatGPT Plus ($20/month):

- Access to GPT-5.2 across all tiers

- Higher rate limits than free tier

- Priority access during high demand

Gemini Advanced ($20/month):

- Access to Gemini 3 Pro

- 1M token context in consumer interface

- Integration with Google Workspace

Both subscription tiers provide excellent value for individual users who need regular access without managing API keys and billing. For development and production use cases, the API pricing structure typically offers better economics at scale.

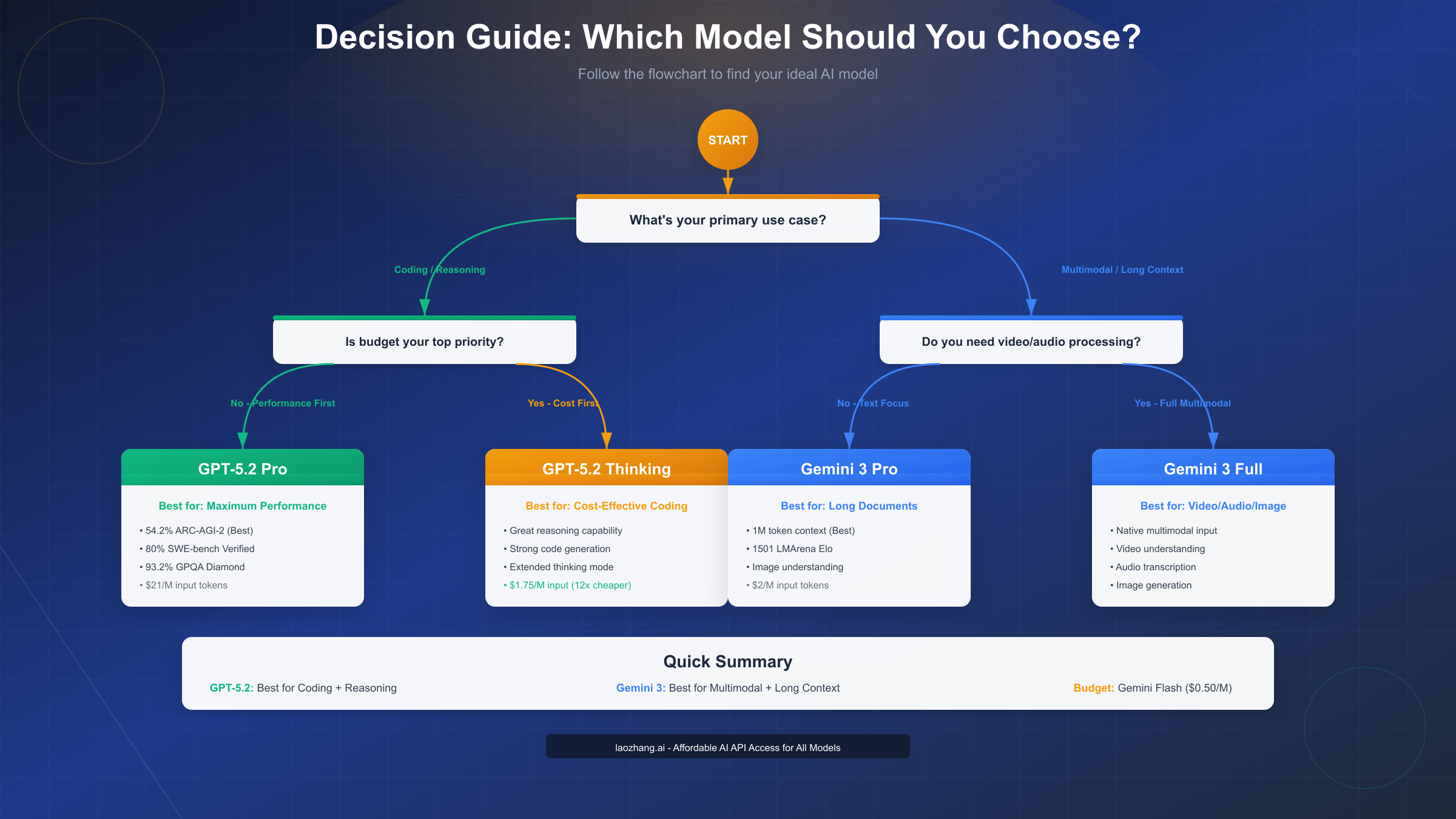

Decision Guide: GPT-5.2 or Gemini 3?

Making the right choice depends on matching model capabilities to your specific requirements. This decision framework helps you navigate the selection process.

Decision Framework

Choose GPT-5.2 if:

Your primary needs fall into these categories:

- Complex Reasoning Tasks: Problems requiring multi-step logical analysis, mathematical proofs, or abstract problem-solving

- Code Generation and Debugging: Automated software development, bug fixing, or code review at scale

- Scientific and Technical Work: Research applications, technical documentation, or domain-specific analysis

- Tool Integration: Applications requiring reliable function calling and structured output

GPT-5.2's benchmark advantages in ARC-AGI-2, SWE-bench, and GPQA Diamond translate to measurable improvements in these use cases. The extended thinking mode in the Thinking tier provides additional capability for problems that benefit from deliberate reasoning.

Choose Gemini 3 if:

Your requirements align with these patterns:

- Long Document Processing: Analyzing complete codebases, legal documents, or book-length content

- Multimodal Applications: Video analysis, audio transcription, or image understanding integrated with text

- Real-Time Information: Applications requiring current data through Google Search grounding

- Cost-Sensitive Production: High-volume applications where Gemini Flash offers better economics

Gemini 3's 1M token context window enables workflows impossible with shorter context models. Native multimodal capabilities eliminate the need for separate vision or audio models.

Persona-Based Recommendations

Individual Developer

If you're working primarily on personal projects or freelance work:

- Budget Priority: Gemini 3 Flash offers the lowest cost entry point at $0.50/M input tokens

- Performance Priority: GPT-5.2 Thinking balances capability with reasonable pricing

- Recommendation: Start with Gemini Flash for general use, switch to GPT-5.2 Thinking for complex coding

Startup or Small Team

For teams building products with AI integration:

- Rapid Prototyping: Both platforms offer similar ease of integration

- Production Costs: Calculate expected usage with the cost scenarios above

- Recommendation: Use model routing to combine GPT-5.2 Instant for simple queries with GPT-5.2 Thinking for complex tasks

Enterprise

For organizations with significant AI workloads:

- Compliance: Both offer enterprise agreements with data handling guarantees

- Scale Economics: At enterprise volume, negotiate custom pricing with both providers

- Recommendation: Evaluate both with proof-of-concept projects on your specific use cases

Quick Start Guide

To get started quickly with either model:

GPT-5.2 Quick Start:

- Create an OpenAI account at platform.openai.com

- Generate an API key in the API keys section

- Install the OpenAI Python library:

pip install openai - Use the code examples from the Coding section above

Gemini 3 Quick Start:

- Access AI Studio at aistudio.google.com

- Create an API key for your project

- Install the Google AI library:

pip install google-generativeai - Use the code examples from the Coding section above

Both platforms offer free tiers for experimentation, allowing you to test capabilities before committing to paid usage.

Best Use Cases for Each Model

Understanding optimal use cases helps you extract maximum value from each model. Based on benchmark performance and real-world testing, here are specific recommendations for common scenarios.

Use Case Matrix

| Use Case | Recommended Model | Reason |

|---|---|---|

| Algorithm implementation | GPT-5.2 Thinking | 80% SWE-bench score |

| Full codebase analysis | Gemini 3 Pro | 1M token context |

| Math problem solving | GPT-5.2 Pro | 54.2% ARC-AGI-2 |

| Video content analysis | Gemini 3 Pro | Native multimodal |

| Technical documentation | GPT-5.2 Thinking | Strong GPQA Diamond |

| Customer support chatbot | GPT-5.2 Instant | Low latency, cost |

| Research paper summarization | Gemini 3 Pro | Long context handling |

| Code review automation | GPT-5.2 Thinking | Reliable tool calling |

| Image-based queries | Gemini 3 Pro | Native vision |

| Real-time data queries | Gemini 3 Pro | Google Search grounding |

Developer Scenarios

Building an AI Coding Assistant

For a VS Code extension or IDE plugin that helps developers write code:

- Primary Model: GPT-5.2 Thinking for code generation and explanation

- Fallback: GPT-5.2 Instant for quick completions

- Rationale: Superior SWE-bench performance translates to better code suggestions

Document Intelligence Platform

For a system that processes and analyzes large document collections:

- Primary Model: Gemini 3 Pro for document ingestion

- Secondary: GPT-5.2 Thinking for complex analysis queries

- Rationale: 1M context enables whole-document understanding

Multimodal Content Platform

For applications working with video, audio, and text:

- Primary Model: Gemini 3 Pro for content processing

- Rationale: Native multimodal eliminates need for multiple model integrations

Creative and Content Scenarios

Technical Blog Writing

When creating detailed technical content like tutorials or documentation:

- Primary Model: GPT-5.2 Thinking for accurate technical details

- Rationale: Strong performance on technical benchmarks ensures accuracy

Marketing Copy Generation

For generating marketing materials and creative content:

- Primary Model: Gemini 3 Pro for natural language generation

- Rationale: Higher LMArena Elo suggests better human-perceived quality

Enterprise Scenarios

Customer Support Automation

For handling customer inquiries at scale:

- Tier 1 (Simple): Gemini 3 Flash for FAQ responses

- Tier 2 (Complex): GPT-5.2 Thinking for technical issues

- Rationale: Cost optimization through intelligent routing

Data Analysis Pipeline

For automated analysis of business data:

- Primary Model: GPT-5.2 Thinking for analytical reasoning

- Secondary: Gemini 3 Pro for large dataset context

- Rationale: Combine reasoning strength with context capacity

The key insight is that many production systems benefit from combining both models. Using intelligent routing to direct queries to the most appropriate model based on complexity, content type, and cost constraints maximizes value while controlling expenses.

FAQ: Your Questions Answered

Is GPT-5.2 really better than Gemini 3?

Neither model is universally better. GPT-5.2 leads on reasoning benchmarks (54.2% vs 45.1% on ARC-AGI-2) and coding (80% vs 76.2% on SWE-bench). Gemini 3 wins on context length (1M vs 400K tokens), human preference (1501 vs ~1480 Elo), and cost efficiency with its Flash tier. The right choice depends entirely on your specific use case and priorities.

What's the real-world cost difference for production use?

For a production application processing 1M tokens daily, GPT-5.2 Thinking costs approximately $2,350/month while Gemini 3 Pro costs approximately $2,100/month. However, if you can use the budget tiers (GPT-5.2 Instant or Gemini 3 Flash), costs drop to $450-525/month for the same volume. Implementing model routing can reduce costs by 40-60% by sending simple queries to cheaper tiers.

Which model has better coding capabilities?

GPT-5.2 demonstrates stronger coding performance with an 80% score on SWE-bench Verified compared to Gemini 3's 76.2%. For complex algorithm implementation, bug fixing, and code generation, GPT-5.2 typically produces more accurate results. However, Gemini 3's 1M context window enables analysis of complete codebases that wouldn't fit in GPT-5.2's context.

Can I use both models in the same application?

Yes, and many production systems benefit from this approach. Common patterns include using cheaper tiers (GPT-5.2 Instant or Gemini Flash) for simple queries and reserving expensive tiers for complex tasks. You might also use Gemini 3 for long-context needs and GPT-5.2 for reasoning-heavy operations. This requires additional routing logic but can significantly optimize both cost and capability.

What about Claude 4.5 Opus?

Claude 4.5 Opus from Anthropic represents the third major player in frontier AI. It offers competitive performance on many benchmarks and excels in certain safety and alignment metrics. For a detailed pricing comparison, see our Claude Opus 4.5 pricing guide. Many organizations evaluate all three models when making platform decisions.

How do I access these models?

GPT-5.2 is available through the OpenAI API (platform.openai.com) and ChatGPT Plus subscription ($20/month). Gemini 3 is available through Google AI Studio (aistudio.google.com) and Gemini Advanced subscription ($20/month). Both offer free tiers with limited usage for experimentation. For comprehensive API documentation covering all major AI models including GPT-5.2 and Gemini 3, check laozhang.ai docs.

Which model is better for enterprise use?

Both offer enterprise agreements with data handling guarantees, compliance certifications, and dedicated support. GPT-5.2 may be preferred for applications requiring reliable tool calling and complex reasoning. Gemini 3 is often chosen for applications needing long context or multimodal capabilities. Evaluate both with proof-of-concept projects on your specific use cases before committing.

Will these models continue to improve?

Yes. Both OpenAI and Google release regular updates improving capability and reducing costs. Models that exist today will likely be superseded within 6-12 months. When building AI-powered products, architect your systems to allow model switching so you can adopt improvements as they become available.

Conclusion: Making Your Choice

The GPT-5.2 vs Gemini 3 decision ultimately comes down to matching model strengths with your specific requirements. After analyzing benchmarks, testing both APIs, and calculating real-world costs, here are our key takeaways:

GPT-5.2 is the better choice when:

- Reasoning accuracy is paramount (54.2% ARC-AGI-2)

- You're building coding tools or automation (80% SWE-bench)

- Reliable tool calling and structured output matter

- You need the absolute highest capability regardless of cost

Gemini 3 is the better choice when:

- You need to process very long documents (1M tokens)

- Your application requires video or audio analysis

- Cost efficiency is a primary concern (Flash tier at $0.50/M)

- Real-time information access through search grounding is valuable

For many production systems, the optimal approach combines both models:

- Route simple queries to budget tiers for cost efficiency

- Direct complex reasoning to GPT-5.2 Thinking

- Use Gemini 3 Pro for long-context and multimodal needs

- Implement fallback logic to handle API availability issues

The AI landscape continues to evolve rapidly, with both OpenAI and Google releasing improvements regularly. Whatever choice you make today, architect your systems for flexibility so you can adopt new capabilities as they become available.

Next Steps:

- Start experimenting: Both platforms offer free tiers for testing

- Calculate your expected costs: Use the monthly cost scenarios above with your projected usage

- Build a proof-of-concept: Test on your actual use cases before committing

- Consider model routing: Implement intelligent routing to optimize cost and capability

- Stay informed: Follow updates from both OpenAI and Google for capability improvements

For comprehensive API documentation, cost optimization strategies, and access to both GPT-5.2 and Gemini 3 at competitive rates, check out the resources at laozhang.ai docs.

The frontier AI race benefits everyone by driving down costs and improving capabilities. Whether you choose GPT-5.2, Gemini 3, or a combination of both, you have access to unprecedented AI capability that was unimaginable just a few years ago. The key is making an informed choice that aligns with your specific needs and constraints.

![GPT-5.2 vs Gemini 3: The Ultimate 2025 Comparison Guide [Benchmarks, Pricing, Use Cases]](/posts/en/gpt-52-vs-gemini-3/img/cover.png)