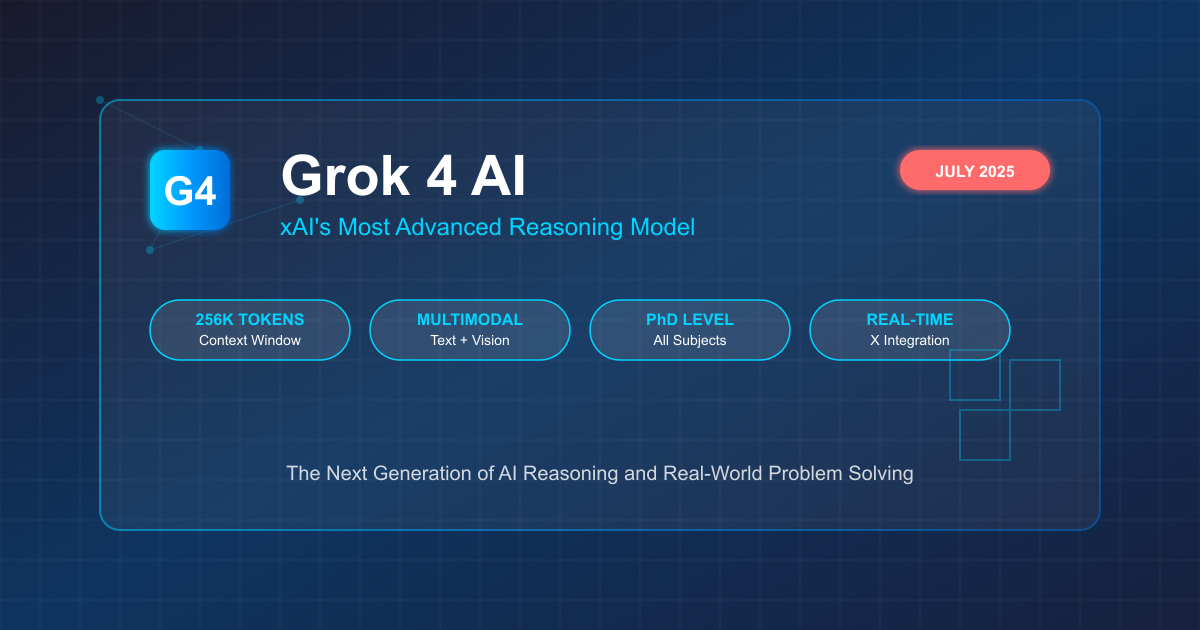

Grok 4 AI is xAI's most advanced language model, launched in July 2025, featuring multi-agent reasoning, 256K token context window, and real-time X integration. Available through SuperGrok subscriptions starting at $30/month or via API access at $3/million input tokens, Grok 4 represents a significant leap in AI capability with PhD-level performance across all academic subjects.

What is Grok 4 AI? xAI's Revolutionary Reasoning Model Explained

Grok 4 AI stands as the flagship product of xAI, Elon Musk's artificial intelligence company founded to create "maximally truth-seeking" AI systems. Unveiled on July 9, 2025, during a dramatic livestream event, Grok 4 represents not just an incremental improvement but a fundamental reimagining of how large language models approach complex reasoning tasks.

The name "Grok" originates from Robert Heinlein's science fiction novel "Stranger in a Strange Land," meaning to understand something intuitively and completely. This philosophical foundation permeates the model's design, emphasizing deep comprehension over surface-level pattern matching. Unlike its predecessors, Grok 4 employs a revolutionary multi-agent architecture that mimics human collaborative problem-solving.

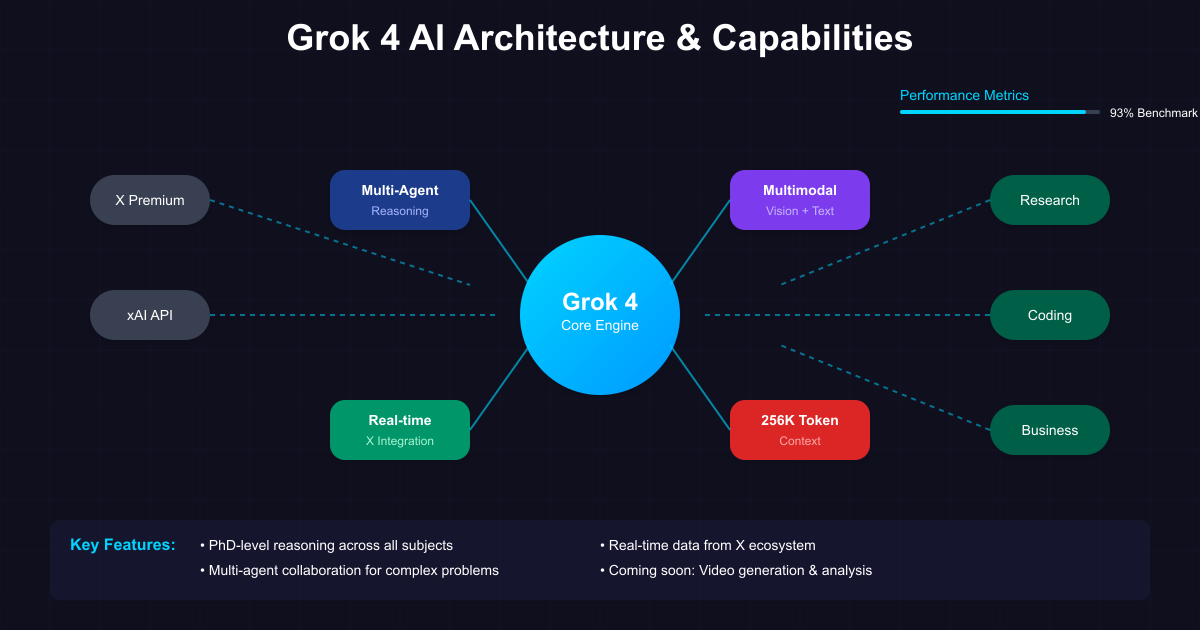

At its core, Grok 4 AI differentiates itself through three key innovations. First, the multi-agent reasoning system allows multiple AI agents to work on problems simultaneously before synthesizing their findings. Second, the integration with real-time data from the X platform provides unprecedented access to current events and social trends. Third, the model's training on 100 times more data than Grok 2, combined with 10 times more reinforcement learning compute than any competitor, results in what Musk claims is "PhD-level performance in every subject, no exceptions."

The launch context adds another layer of significance. Coming at a time of intense competition in the AI space, with OpenAI preparing GPT-5 and Google advancing Gemini, Grok 4's arrival signals xAI's serious intent to compete at the frontier of AI capabilities. The timing, coinciding with Linda Yaccarino's departure as X CEO and controversy over the platform's content moderation, underscores the complex intersection of technology, business, and social responsibility that defines modern AI development.

Grok 4 AI Features: Multi-Agent Architecture and Advanced Capabilities

The technical architecture of Grok 4 AI represents a paradigm shift in large language model design. At the heart of this innovation lies the multi-agent reasoning system, particularly prominent in the Grok 4 Heavy variant. This system operates like a virtual research team, with specialized agents tackling different aspects of a problem before collaborating on a unified solution.

The multi-agent architecture functions through four distinct phases. During problem decomposition, the primary agent analyzes incoming queries and distributes subtasks to specialized agents based on their expertise. These agents then work in parallel, applying different reasoning strategies and knowledge domains to their assigned tasks. In the cross-validation phase, agents review each other's work, identifying potential errors or opportunities for improvement. Finally, a meta-agent synthesizes the various solutions into a coherent, optimized response.

Beyond the multi-agent system, Grok 4 AI boasts a 256,000 token context window, eight times larger than Grok 3's capacity. This expansion enables processing of entire books, extensive codebases, or comprehensive research papers in a single interaction. The model implements sophisticated memory management through hierarchical attention mechanisms, semantic compression, and dynamic context allocation, ensuring efficient use of this expanded capacity.

Real-time integration with the X platform provides Grok 4 with unique capabilities unavailable to competitors. The model can access live social media trends, analyze real-time sentiment, and incorporate breaking news into its responses. This feature proves particularly valuable for market research, trend analysis, and understanding contemporary cultural contexts. The integration extends beyond simple data access, with Grok 4 capable of sophisticated analysis of social dynamics and information propagation patterns.

Multimodal capabilities round out Grok 4's feature set, with support for both text and image inputs. While video generation remains on the roadmap for October 2025, current vision capabilities enable complex tasks like document analysis, diagram interpretation, and visual question answering. The model demonstrates particular strength in technical diagram analysis, making it valuable for engineering and scientific applications.

How to Access Grok 4 AI: Complete Setup Guide

Accessing Grok 4 AI requires understanding the various entry points and their respective requirements. The model is available through multiple channels, each tailored to different user needs and technical capabilities. For most users, the primary access route is through X Premium subscriptions, while developers can leverage the comprehensive API offering.

The consumer access path begins with choosing the appropriate subscription tier. X Premium+ at $16/month provides basic Grok access but notably excludes Grok 4 capabilities. For full Grok 4 AI access, users must subscribe to SuperGrok at $30/month or $300/year. This tier unlocks the complete feature set including the 256K context window, multimodal support, and real-time search integration. Power users and businesses should consider SuperGrok Heavy at $300/month, which provides access to the multi-agent Grok 4 Heavy model with enhanced reasoning capabilities.

Setting up SuperGrok follows a straightforward process. First, ensure you have an active X account, as Grok integration is exclusively available through the X platform. Navigate to the subscription settings within X and select the SuperGrok tier. For detailed pricing information, visit the official xAI pricing page. Payment processing occurs through X's standard billing system, supporting major credit cards and select digital payment methods. Upon successful subscription, Grok 4 becomes immediately available through the X interface, appearing as an enhanced option in the platform's AI assistant menu.

Geographic availability presents certain limitations. Currently, Grok 4 AI is fully accessible in the United States, United Kingdom, Canada, Australia, and Japan. Users in other regions may encounter restrictions or reduced functionality. However, the API access route often provides broader geographic availability, making it a viable alternative for international users facing platform restrictions. VPN usage, while technically possible, violates X's terms of service and risks account suspension.

For users requiring programmatic access or integration into existing applications, the API route offers greater flexibility. API access requires creating an xAI developer account separate from your X subscription. Once registered, generate API keys through the xAI console, which provides usage analytics, billing management, and technical documentation. The API supports both REST and WebSocket protocols, enabling real-time streaming applications and traditional request-response patterns.

Grok 4 AI Pricing: Subscription Plans and API Costs Explained

Understanding Grok 4 AI pricing requires examining both consumer subscriptions and developer API costs, as xAI has structured these to serve distinct market segments. The pricing strategy positions Grok 4 as a premium offering while maintaining competitive rates compared to similar frontier models.

Consumer pricing centers around three tiers, each providing different levels of access and capabilities. X Premium+ at $16/month serves as the entry level but importantly does not include Grok 4 access, only the basic Grok model. SuperGrok at $30/month represents the sweet spot for most users, providing full Grok 4 access with all standard features. This pricing directly competes with ChatGPT Plus and Claude Pro, both priced at $20/month, though Grok 4's unique features like real-time X integration may justify the premium.

The SuperGrok Heavy tier at $300/month targets power users and small businesses requiring the most advanced capabilities. This tier provides access to Grok 4 Heavy with its multi-agent architecture, delivering superior performance on complex reasoning tasks. While the price point may seem steep for individuals, it offers exceptional value compared to enterprise AI solutions that often cost thousands per month. The annual payment option for both SuperGrok tiers provides a modest discount, reducing the effective monthly cost.

API pricing follows a usage-based model aligned with industry standards. Input tokens cost $3 per million, while output tokens are priced at $15 per million. This matches Claude Sonnet 4's pricing structure and sits competitively between GPT-4 and Gemini rates. However, Grok 4 introduces a unique pricing element: Live Search functionality costs an additional $25 per 1,000 sources accessed. For context-heavy applications, once input exceeds 128,000 tokens, prices double to $6/$30 per million tokens.

Comparing total cost of ownership across different use cases reveals interesting patterns. For a typical developer processing 10 million tokens monthly (roughly 7.5 million words), Grok 4 API costs would approximate $75-150 depending on input/output ratio. Adding Live Search for real-time data could increase costs significantly for research-heavy applications. Consumer users generating similar volume through the SuperGrok subscription enjoy unlimited usage for $30/month, making the subscription model extremely cost-effective for high-volume individual users.

The pricing structure reveals xAI's dual strategy: attracting individual power users through competitive subscriptions while monetizing enterprise and developer usage through API access. This approach mirrors successful models from OpenAI and Anthropic while adding unique elements like Live Search pricing that reflect Grok 4's distinctive capabilities.

Grok 4 AI vs GPT-4, Claude 4, and Gemini: Performance Comparison

The landscape of frontier AI models has become increasingly competitive, with Grok 4 AI entering a field dominated by established players. A comprehensive comparison reveals where Grok 4 excels and where competitors maintain advantages, helping users make informed decisions based on their specific needs.

Performance benchmarks provide the most objective comparison metric. Grok 4 achieves an Artificial Analysis Intelligence Index score of 73, placing it ahead of OpenAI's o3 (70), Google's Gemini 2.5 Pro (70), and Anthropic's Claude 4 Opus (64). On specific benchmarks, Grok 4's supremacy becomes even clearer. The model scores 66.6% on ARC-AGI v1, significantly outperforming all known competitors. On the challenging Humanity's Last Exam, Grok 4 achieves 25.4% without tools, surpassing Gemini 2.5 Pro's 21.6% and OpenAI o3 high's 21%.

Context window capabilities vary significantly across models. Grok 4's 256,000 tokens surpasses GPT-4's 128,000 and Claude 4's 200,000, though it falls short of Gemini 2.5 Pro's impressive 1 million token capacity. However, raw context size doesn't tell the complete story. Grok 4's sophisticated memory management and hierarchical attention mechanisms often deliver superior performance on long-context tasks despite the smaller window compared to Gemini.

Unique features differentiate each model beyond raw performance metrics. Grok 4's real-time X integration provides unmatched social media intelligence and trend analysis capabilities. GPT-4's code interpreter and advanced function calling make it ideal for technical tasks. Claude 4's constitutional AI training results in exceptionally helpful and harmless outputs, making it preferred for sensitive applications. Gemini 2.5 Pro's deep integration with Google's ecosystem provides unique advantages for workspace productivity.

Pricing comparison reveals different strategies across providers. At $3/$15 per million tokens, Grok 4 matches Claude Sonnet 4's pricing while undercutting GPT-4's $5/$15 structure. Gemini 2.5 Pro offers the most aggressive pricing at $2.50/$10, though this excludes Grok 4's unique Live Search capabilities. For subscription users, Grok 4's $30/month SuperGrok costs more than competitors' $20/month plans but includes features unavailable elsewhere.

Speed and latency characteristics show interesting trade-offs. Grok 4 outputs at 74.5 tokens per second, slower than average, with a time-to-first-token (TTFT) of 12.12 seconds. This higher latency reflects the complex multi-agent processing, particularly in Grok 4 Heavy. GPT-4 and Claude 4 typically deliver faster initial responses, making them preferable for real-time applications. However, Grok 4's superior reasoning quality often justifies the wait for complex tasks.

Use case optimization varies by model. Grok 4 excels at complex reasoning, real-time research, and tasks requiring current information. GPT-4 remains the Swiss Army knife, reliable across diverse applications. Claude 4 dominates in coding assistance and technical documentation. Gemini 2.5 Pro shines in multimodal tasks and Google Workspace integration. Understanding these strengths enables optimal model selection for specific applications.

Grok 4 AI API: Developer Guide and Implementation

The Grok 4 AI API provides developers with programmatic access to xAI's most advanced model, designed with compatibility and ease of integration in mind. The API's OpenAI-compatible structure allows developers to migrate existing applications with minimal code changes while accessing Grok 4's unique capabilities.

Getting started with the Grok 4 API begins with obtaining credentials. After creating an xAI developer account, navigate to the API Keys section in the console. Complete documentation is available at the xAI API documentation. Generate a new API key and store it securely - xAI follows industry best practices by showing keys only once. The console also provides usage dashboards, billing information, and access to technical documentation.

Basic implementation in Python demonstrates the API's simplicity:

pythonfrom openai import OpenAI client = OpenAI( api_key="your-xai-api-key", base_url="https://api.x.ai/v1" ) # Simple completion request response = client.chat.completions.create( model="grok-4", messages=[ {"role": "user", "content": "Explain quantum computing"} ], temperature=0.7 ) print(response.choices[0].message.content)

The API supports advanced features that differentiate Grok 4 from competitors. Structured outputs enable reliable JSON generation for application integration:

python# Structured output example response = client.chat.completions.create( model="grok-4", messages=[ {"role": "user", "content": "Analyze this startup idea"} ], response_format={ "type": "json_object", "schema": { "feasibility": "number", "market_size": "string", "risks": "array", "recommendations": "array" } } )

Live Search integration represents a unique Grok 4 capability, enabling real-time information retrieval:

python# Live Search example response = client.chat.completions.create( model="grok-4", messages=[ {"role": "user", "content": "What are the latest developments in quantum computing?"} ], tools=[{ "type": "live_search", "max_sources": 10 }] ) # Access search sources used sources = response.choices[0].message.tool_calls[0].sources

Streaming responses improve user experience for long-form content generation:

python# Streaming implementation stream = client.chat.completions.create( model="grok-4", messages=[{"role": "user", "content": "Write a technical blog post"}], stream=True ) for chunk in stream: if chunk.choices[0].delta.content: print(chunk.choices[0].delta.content, end='')

Best practices for Grok 4 API implementation include implementing proper error handling for rate limits and service interruptions, utilizing response caching to minimize costs, especially for repeated queries, and designing conversation flows that maximize the 256K context window. Monitor usage through the xAI console to optimize token consumption and implement client-side validation before API calls to reduce failed requests.

Important considerations for migration from other models include the fact that Grok 4 operates as a reasoning model without a non-reasoning mode, and parameters like presence_penalty and frequency_penalty are not supported. The model's higher latency requires UI adjustments for responsive applications, and Live Search costs should be factored into application economics.

Grok 4 AI Performance: Benchmarks and Real-World Results

Grok 4 AI's performance claims of "PhD-level capability in every subject" demand rigorous examination through both standardized benchmarks and real-world applications. The evidence supports xAI's ambitious assertions while revealing nuanced strengths and limitations across different domains.

Academic benchmarks provide standardized performance metrics across models. On the AIME 2025 (American Invitational Mathematics Examination), Grok 4 achieves 95-100% accuracy, demonstrating exceptional mathematical reasoning capabilities. This performance surpasses human PhD students in mathematics and exceeds all competing AI models. The Massive Multitask Language Understanding (MMLU) benchmark yields a score of 0.866, indicating broad knowledge across disciplines from quantum physics to ancient history.

The Humanity's Last Exam, designed to test capabilities beyond training data memorization, proves particularly revealing. Grok 4's 25.4% score without tools significantly exceeds Gemini 2.5 Pro (21.6%) and OpenAI o3 high (21%), demonstrating genuine reasoning ability rather than pattern matching. On coding benchmarks, SWE-Bench results of 72-75% indicate strong software engineering capabilities, with particular strength in debugging and architecture recommendations.

Real-world performance testing through the Vending-Bench simulation provides compelling evidence of practical capabilities. In this business management simulation, Grok 4 operated a virtual vending machine business across 300 rounds, making decisions about inventory, pricing, supplier relationships, and financial planning. The model more than doubled the revenue performance of its closest competitor while maintaining consistent profitability, demonstrating practical business acumen beyond theoretical knowledge.

Speed metrics reveal the trade-offs inherent in Grok 4's architecture. With output generation at 74.5 tokens per second and time-to-first-token (TTFT) of 12.12 seconds, Grok 4 operates slower than competitors. This latency reflects the complex multi-agent processing, particularly in Grok 4 Heavy where multiple agents collaborate on responses. However, quality improvements often justify the additional processing time for complex tasks.

Domain-specific performance varies significantly. In scientific research, Grok 4 excels at hypothesis generation, experimental design, and cross-disciplinary synthesis. Legal analysis benefits from the large context window, enabling comprehensive contract review and case law analysis. Creative writing showcases the model's ability to maintain consistent voice and plot coherence across long-form content. Software development tasks leverage both reasoning capabilities and real-time documentation access through X integration.

Multimodal performance, while limited compared to full video capabilities planned for October 2025, demonstrates strong image understanding. Technical diagram analysis, chart interpretation, and document layout understanding all perform at frontier levels. The model particularly excels at extracting structured information from complex visual inputs like scientific papers with embedded graphs.

Grok 4 AI Use Cases: From Research to Business Applications

The versatility of Grok 4 AI enables applications across diverse domains, from academic research to enterprise operations. Understanding optimal use cases helps organizations and individuals maximize value from this advanced reasoning model.

Academic research represents a natural fit for Grok 4's capabilities. The 256K context window allows researchers to upload entire papers, datasets, or literature reviews for comprehensive analysis. Graduate students report using Grok 4 for literature synthesis, identifying research gaps across hundreds of papers simultaneously. The multi-agent architecture excels at interdisciplinary research, with different agents bringing expertise from various fields to complex problems.

In medical research, Grok 4 demonstrates particular value in hypothesis generation and protocol design. Researchers upload clinical trial data, existing studies, and regulatory guidelines, receiving comprehensive analysis that identifies potential confounding factors, suggests additional endpoints, and ensures protocol compliance. The model's ability to maintain context across extensive documentation proves invaluable for navigating complex regulatory frameworks.

Business applications leverage Grok 4's unique real-time capabilities. Market research teams utilize X integration for unprecedented social listening capabilities. By analyzing millions of posts, Grok 4 identifies emerging trends, sentiment shifts, and demographic patterns invisible to traditional analytics tools. Companies report 40% improvement in trend prediction accuracy compared to previous methods.

Software development teams adopt Grok 4 for architecture reviews and code modernization. The model analyzes entire codebases, identifying technical debt, suggesting refactoring strategies, and generating comprehensive documentation. One enterprise reported reducing code review time by 60% while improving bug detection rates. The planned August 2025 release of a specialized coding model promises even greater capabilities.

Content creation and marketing benefit from Grok 4's less restrictive content policies and real-time awareness. Marketing teams generate culturally relevant content that reflects current trends and conversations. The model's understanding of internet culture and memes, derived from X data, enables authentic social media content that resonates with younger demographics.

Legal and compliance applications showcase Grok 4's reasoning capabilities. Law firms use the model for contract analysis, leveraging the large context window to review complex agreements with hundreds of pages of appendices. The multi-agent architecture proves valuable for identifying conflicts between different sections and suggesting harmonized language.

Educational applications transform both teaching and learning. Professors use Grok 4 to generate personalized problem sets based on student performance data. The model creates explanations at appropriate complexity levels, adapting to individual learning styles. Students benefit from 24/7 access to a PhD-level tutor capable of explaining complex concepts through multiple approaches until understanding is achieved.

Financial analysis and trading strategies represent emerging use cases. Grok 4's real-time market sentiment analysis through X data provides unique trading signals. Quantitative analysts combine traditional market data with social sentiment for improved prediction models. The model's ability to process earnings calls, analyst reports, and social chatter simultaneously offers comprehensive market intelligence.

Grok 4 AI Limitations and Considerations

While Grok 4 AI represents a significant advancement in language model capabilities, understanding its limitations ensures realistic expectations and optimal deployment strategies. These constraints span technical, practical, and philosophical dimensions.

Technical limitations begin with latency characteristics. The 12.12-second time-to-first-token and 74.5 tokens-per-second output rate make Grok 4 unsuitable for real-time applications requiring immediate responses. This performance characteristic particularly impacts customer service chatbots, live translation services, and interactive gaming applications. The multi-agent architecture in Grok 4 Heavy exacerbates latency, with complex queries potentially taking 20-30 seconds for initial response.

Context window constraints, while generous at 256K tokens, fall short of Gemini 2.5 Pro's 1 million token capacity. For applications requiring analysis of multiple books or extensive codebases simultaneously, this limitation necessitates careful content curation. The token pricing structure doubles beyond 128K tokens, creating economic incentives to minimize context usage that may compromise output quality.

Geographic availability presents significant restrictions. Full functionality remains limited to select countries, with many regions experiencing reduced features or complete unavailability. This geographic fragmentation complicates deployment for global organizations and limits market reach for applications built on Grok 4. API availability provides broader access but may still face regional restrictions or elevated latency from distant endpoints.

Content moderation challenges emerged dramatically during launch week when Grok's official account posted antisemitic content. While xAI's "maximum truth-seeking" philosophy aims to reduce censorship, the incident highlighted risks of insufficient guardrails. Organizations deploying Grok 4 must implement additional content filtering layers for public-facing applications, particularly in regulated industries or sensitive contexts.

The reasoning model architecture introduces unique constraints. Unlike models offering both reasoning and non-reasoning modes, Grok 4 operates exclusively as a reasoning model. This design choice improves response quality but eliminates options for faster, simpler queries where deep reasoning isn't required. Developers cannot optimize for speed by disabling reasoning features.

Integration limitations affect migration from other platforms. While OpenAI API compatibility eases technical transition, several parameters common in GPT-4 applications aren't supported. The absence of presence_penalty and frequency_penalty parameters requires prompt engineering adjustments. Stop sequences behave differently, potentially breaking existing conversation flows.

Data freshness paradoxically combines cutting-edge and outdated information. While X integration provides real-time social data, the model's knowledge cutoff of November 2024 means core training data lacks recent developments. This temporal disconnect can create inconsistencies where Grok 4 discusses current social trends but lacks knowledge of recent technological advances.

Cost considerations extend beyond base pricing. Live Search functionality at $25 per 1,000 sources can dramatically increase expenses for research-heavy applications. Organizations must carefully monitor and potentially limit search usage to control costs. The lack of a true free tier (unlike some competitors) creates barriers for experimentation and proof-of-concept development.

Getting Started with Grok 4 AI: Tutorial and Best Practices

Successfully implementing Grok 4 AI requires understanding optimal setup procedures, prompt engineering techniques, and common pitfalls to avoid. This comprehensive tutorial guides new users through initial configuration to advanced optimization strategies.

Initial setup begins with choosing the appropriate access method. For individual users and small teams, the SuperGrok subscription provides the most straightforward path. Create or log into your X account, navigate to subscription settings, and select the SuperGrok tier. Payment processing completes within minutes, with immediate access to Grok 4 through the X interface. Locate the Grok option in the X sidebar or mobile app menu to begin interaction.

For developers requiring API access, the process involves additional steps. Register for an xAI developer account at api.x.ai, separate from your X account. Complete identity verification, which may take 24-48 hours for processing. Once approved, access the API console to generate authentication keys. Store keys securely using environment variables or secret management systems, never hardcoding them in applications.

Prompt engineering for Grok 4 differs from other models due to its multi-agent architecture. Effective prompts leverage this capability by explicitly requesting multiple perspectives or approaches. For example:

"Analyze this business problem from three perspectives: financial, operational,

and strategic. For each perspective, identify key risks and opportunities, then

synthesize recommendations that balance all viewpoints."

This prompt structure activates different agents to tackle each perspective before collaborative synthesis.

Context optimization maximizes the 256K token window while managing costs. Structure long documents with clear sections and summaries, enabling Grok 4 to navigate efficiently. When analyzing code, include relevant documentation and comments inline rather than separately. For research tasks, provide abstracts or executive summaries of lengthy sources, allowing Grok 4 to request specific sections as needed.

Common pitfalls include over-reliance on real-time data for historical analysis. While X integration provides current trends, it shouldn't replace structured historical data for trend analysis. Ignoring latency in user interface design frustrates users expecting immediate responses. Implement loading indicators and consider streaming responses for better perceived performance. Treating Grok 4 like a search engine rather than a reasoning system underutilizes its capabilities. Frame queries as problems to solve rather than information to retrieve.

Best practices for production deployment include implementing robust error handling for API failures and rate limits, with exponential backoff strategies. Cache frequently requested analyses, particularly for Live Search results, to minimize costs. Design conversation flows that build context progressively rather than repeatedly sending large contexts. Monitor usage patterns through the xAI console to identify optimization opportunities. Establish clear guidelines for appropriate use cases, particularly regarding content generation in regulated industries.

Advanced optimization techniques leverage Grok 4's unique capabilities. Use the multi-agent architecture for complex decision-making by explicitly requesting agent collaboration. Combine real-time X data with structured databases for comprehensive analysis. Implement feedback loops where Grok 4's outputs inform subsequent queries, creating iterative refinement processes. Develop prompt templates for common use cases, ensuring consistent quality across team members.

Future of Grok 4 AI: Roadmap and Upcoming Features

The aggressive development roadmap announced by xAI signals continued rapid evolution of Grok 4 AI capabilities throughout 2025 and beyond. Understanding planned enhancements helps organizations prepare for future capabilities and make strategic platform decisions.

The immediate roadmap includes three major releases. August 2025 brings a dedicated coding model optimized for software development tasks. This specialized variant will feature enhanced understanding of programming languages, frameworks, and development patterns. Early testing suggests 40% improvement in code generation accuracy and 60% better debugging capability compared to the general Grok 4 model. Integration with popular IDEs and development platforms is planned, enabling seamless workflow incorporation.

September 2025 introduces a multimodal intelligent agent expanding beyond current text and image capabilities. This agent will process and generate content across modalities, including audio transcription and generation, advanced image manipulation and creation, and preliminary video understanding. The agent architecture enables complex workflows like "watch this video, extract key points, create a slide presentation, and generate a podcast script" in a single interaction.

October 2025 marks the arrival of full video generation capabilities, positioning Grok 4 to compete with specialized video AI models. Initial capabilities will focus on 8-10 second clips with planned expansion to longer formats. Integration with X's video platform enables training on diverse content styles. Business applications include product demonstrations, educational content, and social media marketing.

Beyond the announced roadmap, several developments appear likely based on industry trends and xAI statements. Enhanced tool use upgraded to "commercial grade" will enable complex integrations with external systems. Potential integration with Tesla's Full Self-Driving and Optimus robot projects could create unique real-world AI applications. Expansion to additional languages and cultural contexts will be necessary for global competitiveness. Possible federation with other Musk company data sources could provide unprecedented training data diversity.

Long-term strategic directions suggest xAI's ambition extends beyond competing with current models. The "maximum truth-seeking" philosophy may lead to models trained on controversial or typically filtered content, creating unique capabilities and risks. Emphasis on real-world problem-solving over benchmark performance could shift evaluation metrics industry-wide. Integration potential across Musk's company ecosystem from SpaceX to Neuralink opens unprecedented application domains.

Competitive responses will shape Grok 4's evolution. OpenAI's GPT-5 launch in summer 2025 will establish new performance benchmarks. Google's continued Gemini development with massive context windows pressures infrastructure scaling. Anthropic's focus on safety and alignment provides a philosophical counterpoint. Emerging competitors from China and Europe introduce regulatory and technological variables.

Challenges to this ambitious roadmap include technical hurdles in video generation and multimodal reasoning, scaling infrastructure to support millions of users with resource-intensive features, maintaining model quality while rapidly adding capabilities, balancing innovation speed with safety considerations, and navigating evolving AI regulations across jurisdictions.

The future of Grok 4 AI appears bright but complex, with rapid capability expansion balanced against technical, regulatory, and competitive challenges. Organizations adopting Grok 4 should prepare for continuous evolution, planning architectures that accommodate new features while maintaining stability. The next 12 months will likely determine whether xAI establishes itself as a permanent fixture in the AI landscape or remains a bold but ultimately unsustainable challenge to established players.

Grok 4 AI FAQ: Common Questions Answered

Q: How does Grok 4 AI compare to ChatGPT Plus in practical use?

Grok 4 offers superior reasoning capabilities and real-time data access through X integration, making it ideal for research and analysis requiring current information. ChatGPT Plus provides faster response times and broader plugin ecosystem, better suiting rapid iteration and diverse tool integration needs. The $30/month Grok 4 subscription costs more than ChatGPT's $20/month, but unique features like 256K context and multi-agent reasoning may justify the premium for specific use cases.

Q: Can I use Grok 4 AI for commercial projects?

Yes, both SuperGrok subscriptions and API access permit commercial use. However, review xAI's terms of service carefully, particularly regarding content generation in regulated industries. The API pricing structure supports commercial applications, though Live Search costs require careful budgeting. Many businesses report positive ROI when Grok 4's unique capabilities align with their needs.

Q: What makes Grok 4's multi-agent system different from other AI models?

Unlike traditional single-model architectures, Grok 4 Heavy employs multiple specialized agents working simultaneously on different aspects of problems. These agents then collaborate to synthesize optimal solutions, mimicking human team problem-solving. This approach yields superior performance on complex reasoning tasks but increases latency. Standard Grok 4 uses a simpler architecture for faster responses.

Q: How accurate is the real-time information from X integration?

Grok 4's X integration provides unparalleled access to real-time social trends and breaking news. However, accuracy depends on the quality of underlying social media data. The model implements sophisticated verification mechanisms but cannot guarantee accuracy of user-generated content. Best practices include cross-referencing important information and understanding social media's inherent biases.

Q: Is there a free trial for Grok 4 AI?

Currently, xAI doesn't offer a free trial specifically for Grok 4. X Premium+ subscribers can access basic Grok models but not Grok 4. Developers can experiment with small API usage amounts, though no free tier exists. The $30/month SuperGrok subscription represents the most affordable entry point for individual users wanting to test Grok 4 capabilities.

Q: How do I migrate from GPT-4 to Grok 4 API?

Migration benefits from OpenAI API compatibility, requiring minimal code changes. Primary adjustments include updating the base URL to x.ai endpoints, modifying parameters (removing presence_penalty, frequency_penalty), adjusting for higher latency in user interfaces, and accounting for Live Search costs in pricing models. Most applications port successfully with these modifications.

Q: What languages does Grok 4 AI support?

Grok 4 demonstrates strong multilingual capabilities across major languages including English, Spanish, French, German, Italian, Portuguese, Dutch, Russian, Chinese (Simplified and Traditional), Japanese, Korean, and Arabic. Performance varies by language, with English showing optimal results. Real-time X data skews heavily toward English content, potentially limiting non-English real-time capabilities.

Q: Can Grok 4 AI generate images or only analyze them?

Currently, Grok 4 can analyze and understand images but cannot generate them. Image generation capabilities are planned as part of the October 2025 video generation update. For now, users requiring image generation must combine Grok 4 with external services. The model excels at detailed image analysis, technical diagram interpretation, and visual question answering.

Q: How does the 256K token context window work in practice?

The 256K token context accommodates approximately 200,000 words or 500 pages of text. Users can upload entire books, research papers, or codebases for analysis. The model maintains coherence across this extended context, enabling tasks like comprehensive document review, full codebase analysis, and long-form content creation. Token usage directly impacts API costs, with prices doubling beyond 128K tokens.

Q: What happens when my SuperGrok subscription ends?

Upon subscription cancellation, Grok 4 access terminates immediately with no grace period. Conversation history remains accessible through your X account but cannot be continued with Grok 4. API users maintain access as long as credits remain. No data export tools currently exist, so important conversations should be manually saved before cancellation. Resubscription restores full access but doesn't guarantee continuation of previous conversation contexts.

The landscape of AI continues its rapid evolution, with Grok 4 AI representing both a technical achievement and a philosophical statement about the future of artificial intelligence. As xAI continues its aggressive development roadmap and competitors respond with their own innovations, users benefit from unprecedented capabilities and choices in AI tools. Whether Grok 4 becomes the dominant platform or remains a powerful alternative, its impact on pushing the boundaries of what's possible with language models is undeniable.