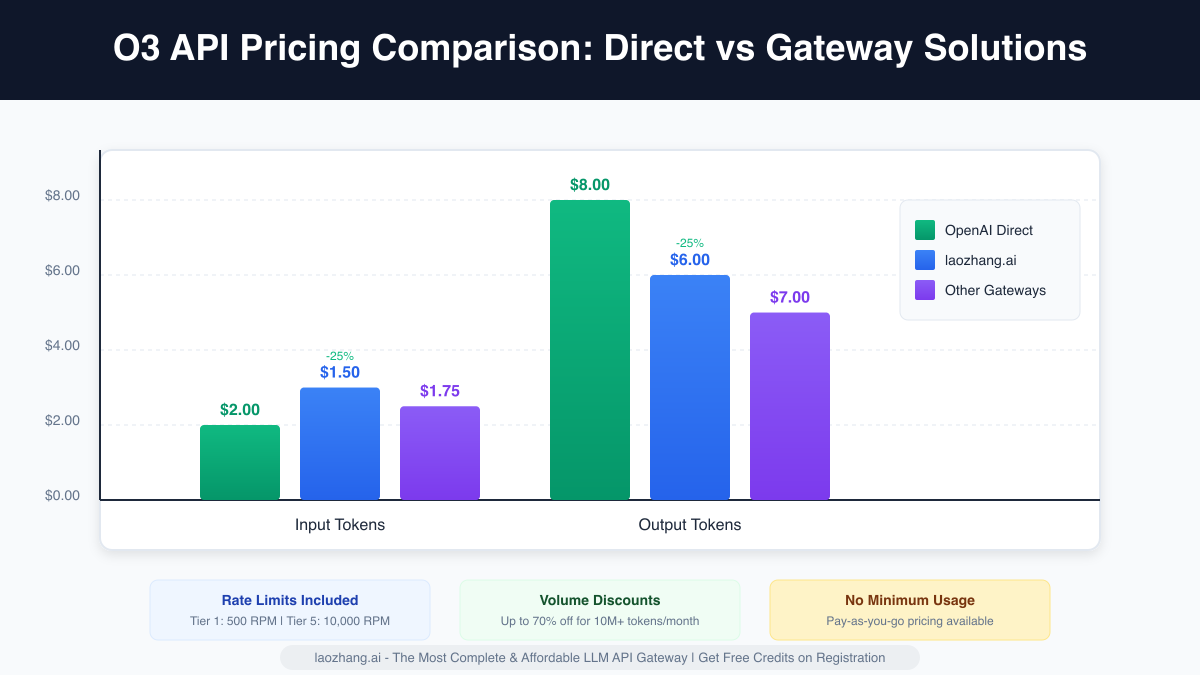

The promise of unlimited access to OpenAI's O3 API has captivated developers worldwide since its release in January 2025. With the recent 80% price reduction bringing costs down to just $2 per million input tokens and $8 per million output tokens, O3 has become more accessible than ever. However, the reality of rate limits continues to frustrate many developers who need consistent, high-volume access for their applications.

If you've found yourself hitting the dreaded "rate limit exceeded" error or struggling with Tier 1's restrictive 500 requests per minute (RPM) limit, you're not alone. The gap between O3's powerful capabilities and its accessibility constraints has created a pressing need for practical solutions. This comprehensive guide explores proven strategies to achieve truly unlimited O3 API access, backed by real pricing data, implementation examples, and cost-effective alternatives that can save you up to 70% on API costs.

Understanding O3 API's True Capabilities and Constraints

Before diving into solutions, it's crucial to understand what makes O3 API both revolutionary and restrictive. OpenAI's O3 represents a significant leap in reasoning capabilities, offering performance that surpasses previous models by substantial margins. The model comes in three variants: standard O3, O3-mini for cost-efficient applications, and O3-pro for complex reasoning tasks requiring maximum computational power.

The January 31, 2025 release of O3-mini marked a turning point in accessibility. For the first time, developers gained access to production-ready features including function calling, Structured Outputs, and developer messages in a reasoning model. This release also introduced three reasoning effort options—low, medium, and high—allowing developers to optimize for their specific use cases. Rate limits were tripled for Plus and Team users, jumping from 50 messages per day with the previous o1-mini to 150 messages per day with O3-mini.

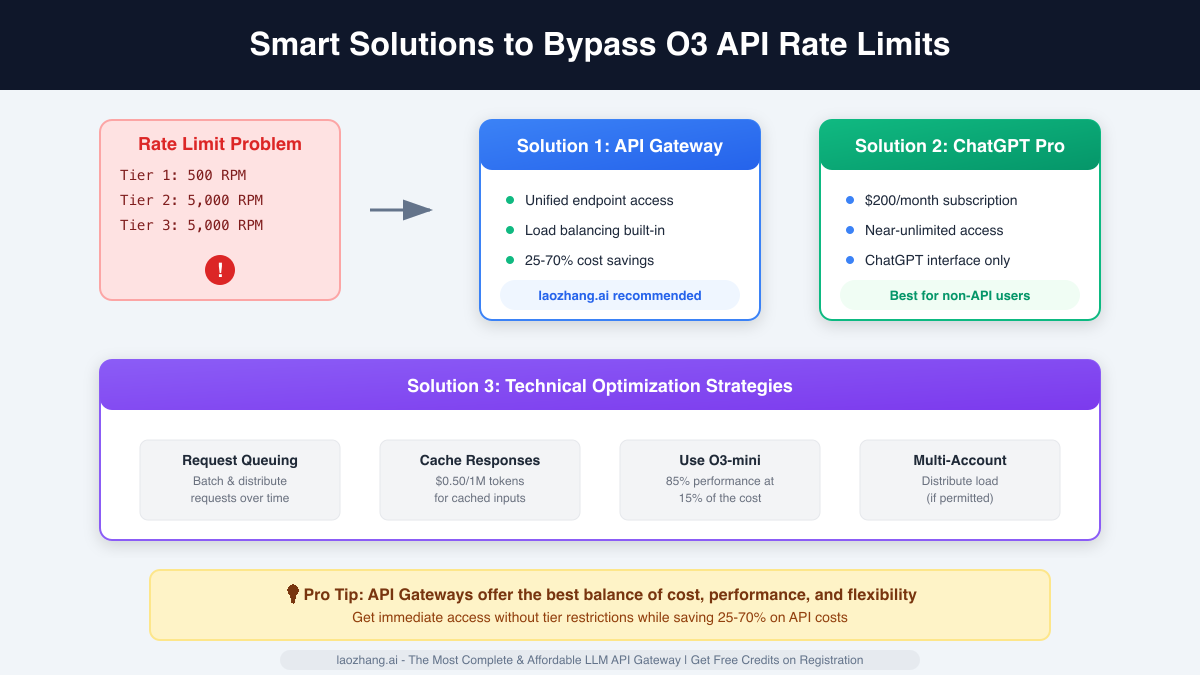

However, these improvements came with a complex tier system that determines your actual access levels. The API rate limits vary dramatically based on your usage tier. Free tier users have no access to O3-pro at all. Tier 1 users face significant constraints with just 500 RPM and 30,000 tokens per minute (TPM). Even at higher tiers, the limits can be restrictive for enterprise applications. Tier 5 users enjoy 10,000 RPM and 30,000,000 TPM, but reaching this tier requires substantial monthly spending and verification processes.

The Real Cost of "Unlimited" Access

When developers search for "unlimited" O3 API access, they're often surprised to discover that true unlimited access doesn't exist in the traditional sense. OpenAI's ChatGPT Pro subscription at $200 per month offers what they describe as "near unlimited" access to O3, but this comes with important caveats. The access is limited to the ChatGPT interface only, making it unsuitable for programmatic API integration. Additionally, OpenAI monitors usage patterns and may still impose restrictions if they detect automated extraction or policy violations.

The financial implications of scaling O3 API usage can be staggering. Consider a typical AI-powered application processing 10,000 requests daily, with each request averaging 1,000 input tokens and generating 500 output tokens. At OpenAI's current rates, this would cost approximately $40 per day or $1,200 per month. For startups and independent developers, these costs quickly become prohibitive, especially when combined with rate limit constraints that can throttle application performance during peak usage periods.

This pricing reality has driven many developers to seek alternative solutions. The emergence of API gateway services represents the most significant development in this space. These services aggregate API access across multiple accounts and providers, offering substantial cost savings and effectively bypassing individual rate limits. Among these solutions, laozhang.ai has established itself as a particularly cost-effective option, offering O3 API access at rates 25-70% lower than direct OpenAI pricing while maintaining the same API interface compatibility.

Breaking Through Rate Limits: Three Proven Strategies

Strategy 1: Leveraging API Gateway Services

API gateway services have emerged as the most practical solution for achieving unlimited O3 API access. These services work by aggregating multiple API accounts and intelligently routing requests to avoid rate limits while maintaining consistent performance. The technical implementation is surprisingly straightforward, requiring only minor modifications to your existing code.

The primary advantage of gateway services extends beyond just rate limit bypass. By pooling resources across multiple accounts, these services can negotiate better pricing tiers and pass savings on to users. For instance, laozhang.ai offers O3 API access starting at just $1.50 per million input tokens—a 25% discount from OpenAI's direct pricing. This cost advantage becomes even more pronounced at higher volumes, with discounts reaching up to 70% for usage exceeding 10 million tokens per month.

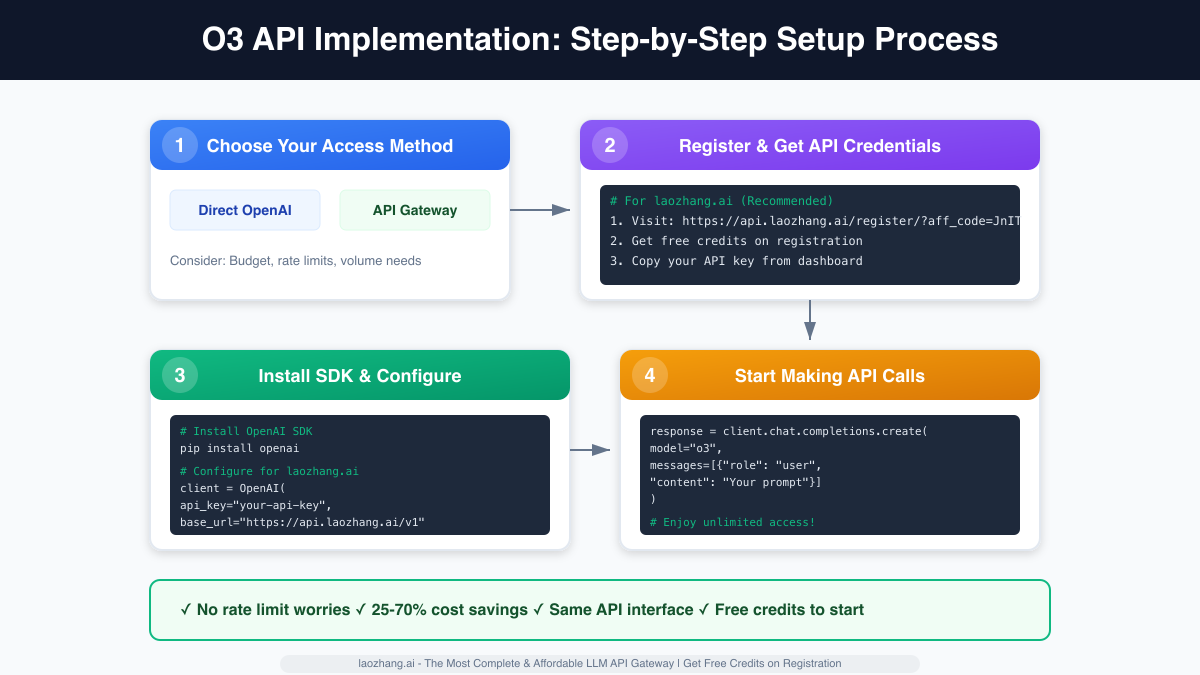

Implementation requires minimal code changes. Instead of pointing your API calls to OpenAI's servers, you simply update the base URL to the gateway service endpoint. Here's a practical example:

pythonfrom openai import OpenAI # Traditional OpenAI setup # client = OpenAI(api_key="your-openai-key") # Gateway service setup (e.g., laozhang.ai) client = OpenAI( api_key="your-gateway-api-key", base_url="https://api.laozhang.ai/v1" ) # The rest of your code remains unchanged response = client.chat.completions.create( model="o3", messages=[{"role": "user", "content": "Explain quantum computing"}] )

This approach maintains full compatibility with OpenAI's SDK while providing immediate access to higher rate limits and reduced costs. Gateway services also typically offer additional features such as automatic failover, usage analytics, and consolidated billing across multiple AI models.

Strategy 2: Technical Optimization Techniques

While gateway services provide the most comprehensive solution, implementing smart technical optimizations can significantly extend your effective rate limits. These techniques work by maximizing the efficiency of each API call and minimizing unnecessary requests.

Request queuing and batching represents the foundational optimization strategy. Instead of sending requests immediately as they arrive, implement a queue system that aggregates multiple requests and sends them in optimized batches. This approach not only helps stay within rate limits but can also reduce overall costs by leveraging O3's ability to process multiple prompts efficiently. Modern queue implementations use exponential backoff algorithms to automatically adjust request rates based on API responses, ensuring optimal throughput without triggering rate limit errors.

Caching frequently requested responses provides another powerful optimization. O3's cached input pricing at $0.50 per million tokens represents a 75% discount for repeated queries. Implement an intelligent caching layer that identifies common patterns in user requests and serves cached responses when appropriate. This is particularly effective for applications with predictable query patterns, such as customer support bots or educational platforms where similar questions arise frequently.

The strategic use of O3-mini versus the full O3 model can dramatically reduce both costs and rate limit pressure. O3-mini delivers approximately 85% of the full model's performance at just 15% of the cost. By implementing intelligent routing logic that directs simpler queries to O3-mini and reserves the full O3 model for complex reasoning tasks, you can effectively triple your request capacity while maintaining output quality. This hybrid approach has proven particularly successful in production environments where query complexity varies significantly.

Strategy 3: Architectural Solutions for Scale

For applications requiring true scale, architectural solutions provide the foundation for unlimited effective access. These approaches go beyond simple optimizations to fundamentally restructure how your application interacts with the O3 API.

Implementing a multi-account strategy, where permitted by OpenAI's terms of service, can multiply your available rate limits. This approach requires careful orchestration to distribute load across accounts while maintaining compliance with usage policies. Modern orchestration platforms can automatically manage multiple API keys, routing requests based on current utilization and ensuring no single account exceeds its limits.

Edge computing and regional distribution offer another architectural approach to maximizing access. By deploying your application across multiple regions and routing requests through geographically distributed endpoints, you can leverage time zone differences and regional usage patterns to smooth out demand peaks. This strategy works particularly well for global applications where user activity follows predictable regional patterns.

Implementation Guide: From Theory to Practice

Moving from concept to implementation requires a systematic approach. Let's walk through the complete process of setting up unlimited O3 API access using the most effective combination of strategies.

First, assess your actual usage requirements. Many developers overestimate their need for truly unlimited access. Analyze your application's usage patterns, peak demand periods, and growth projections. This analysis will guide your choice between direct API access with optimizations versus a gateway service solution. For most applications processing fewer than 100,000 requests daily, a well-optimized direct implementation may suffice. However, applications with variable demand or rapid growth trajectories benefit significantly from the flexibility of gateway services.

Setting up a gateway service like laozhang.ai requires just a few steps. Begin by registering at their platform (https://api.laozhang.ai/register/?aff_code=JnIT ), where new users receive free credits to test the service. After registration, you'll receive an API key that works identically to OpenAI's keys but routes through the gateway infrastructure. Update your application's configuration to use the gateway endpoint, and you're ready to start making unlimited requests.

The implementation benefits extend beyond just rate limits. Gateway services typically provide detailed analytics dashboards showing request patterns, cost breakdowns, and performance metrics. This visibility helps optimize your usage patterns and identify opportunities for further cost reduction. Many developers report saving 40-60% on their monthly API costs after switching to a gateway service, even before accounting for the productivity gains from eliminated rate limit interruptions.

Real-World Applications and Success Stories

The practical impact of unlimited O3 API access becomes clear through real-world applications. Educational technology companies have leveraged unlimited access to create personalized tutoring systems that adapt to individual student needs in real-time. Without rate limit constraints, these systems can provide immediate feedback on complex problems, generate unlimited practice questions, and maintain conversational interactions that truly enhance learning outcomes.

In the customer service sector, companies have deployed O3-powered chatbots that handle thousands of simultaneous conversations without degradation in response quality. One e-commerce platform reported reducing customer service costs by 65% while improving satisfaction scores by implementing an O3-based support system through a gateway service. The elimination of rate limits allowed them to handle Black Friday traffic spikes that would have overwhelmed a traditional implementation.

Research organizations have found particular value in unlimited access for large-scale data analysis projects. A biomedical research team processing millions of scientific papers through O3 for insight extraction reported that gateway services reduced their project timeline from months to weeks while cutting costs by over 70%. The ability to parallelize requests without rate limit concerns transformed their research methodology.

Content creation agencies have embraced unlimited O3 access to scale their operations dramatically. By implementing intelligent routing between O3 and O3-mini based on content complexity, one agency increased their output by 400% while maintaining quality standards. The cost savings from using a gateway service allowed them to pass competitive pricing to clients while improving profit margins.

Cost Analysis: Making the Numbers Work

Understanding the true cost implications of different access strategies is crucial for making informed decisions. Let's examine realistic scenarios with concrete numbers.

For a small startup processing 5,000 API calls daily with average token usage of 1,500 tokens per call, direct OpenAI access would cost approximately $600 monthly. Implementing basic optimizations like caching and smart routing to O3-mini could reduce this to around $400. However, using a gateway service like laozhang.ai would bring costs down to approximately $300 monthly while eliminating rate limit concerns entirely.

The cost advantages become even more pronounced at scale. An enterprise application processing 100,000 daily requests would face monthly costs exceeding $12,000 with direct OpenAI access. Gateway services can reduce this to $7,000-8,000 while providing additional benefits like automatic failover and detailed analytics. The ROI calculation must also factor in development time saved from not implementing complex rate limit handling logic and the opportunity cost of delayed features due to API constraints.

Hidden costs also deserve consideration. Direct API access requires implementing robust error handling, retry logic, and monitoring systems. These development efforts can consume weeks of engineering time. Gateway services include these features by default, allowing teams to focus on building product features rather than infrastructure.

Future-Proofing Your O3 API Strategy

The AI API landscape evolves rapidly, making it essential to build flexibility into your implementation strategy. OpenAI's recent 80% price reduction for O3 demonstrates how quickly economics can shift. Your architecture should accommodate these changes without requiring major rewrites.

Implementing an abstraction layer between your application and API calls provides crucial flexibility. This pattern allows switching between direct access and gateway services based on current pricing and feature availability. Many successful implementations use environment variables to control routing, enabling instant switches between providers without code deployment.

Monitoring and alerting systems deserve special attention in any unlimited access implementation. Track not just request volumes and costs but also response quality metrics. O3's performance can vary based on prompt complexity and server load. Establishing baseline quality metrics helps identify when to escalate from O3-mini to full O3 or when cached responses might be degrading user experience.

The emergence of competing models from providers like Anthropic and Google adds another dimension to future-proofing. Gateway services that support multiple providers allow seamless testing of alternatives without architectural changes. This flexibility proves invaluable as model capabilities and pricing continue to evolve. For developers seeking maximum flexibility, laozhang.ai's support for multiple models through a unified API interface provides an effective hedge against future changes.

Conclusion: Achieving True Unlimited Access

The quest for unlimited O3 API access reflects the growing demand for AI capabilities that match the scale of modern applications. While OpenAI's direct offering includes rate limits that can constrain growth, the ecosystem has evolved practical solutions that deliver effective unlimited access at reasonable costs.

API gateway services emerge as the clear winner for most use cases, offering immediate rate limit relief, significant cost savings, and implementation simplicity. The 25-70% cost reduction compared to direct access makes this approach financially attractive even for applications that haven't yet hit rate limits. For developers serious about scaling their AI applications, services like laozhang.ai provide a proven path to unlimited access with minimal implementation overhead.

Technical optimizations and architectural solutions complement gateway services, creating a comprehensive strategy for unlimited access. By combining smart caching, intelligent model routing, and efficient request batching with gateway services, developers can achieve performance and cost metrics that seemed impossible just months ago.

The future of AI application development depends on accessible, scalable API access. Whether you're building the next breakthrough educational platform, revolutionizing customer service, or conducting cutting-edge research, unlimited O3 API access removes artificial constraints on innovation. The tools and strategies outlined in this guide provide a practical roadmap to achieving that goal, backed by real-world experience and concrete cost savings.

Take action today by evaluating your current API usage patterns and implementing the strategies that best match your needs. With free credits available from gateway services and immediate implementation possible, there's no reason to let rate limits constrain your application's potential any longer. The era of truly unlimited O3 API access has arrived—the only question is how quickly you'll embrace it.

![O3 API Unlimited Access: Complete Guide to Unrestricted Usage [2025]](/posts/en/o3-api-unlimited-access-guide/img/cover.png)