Introduction

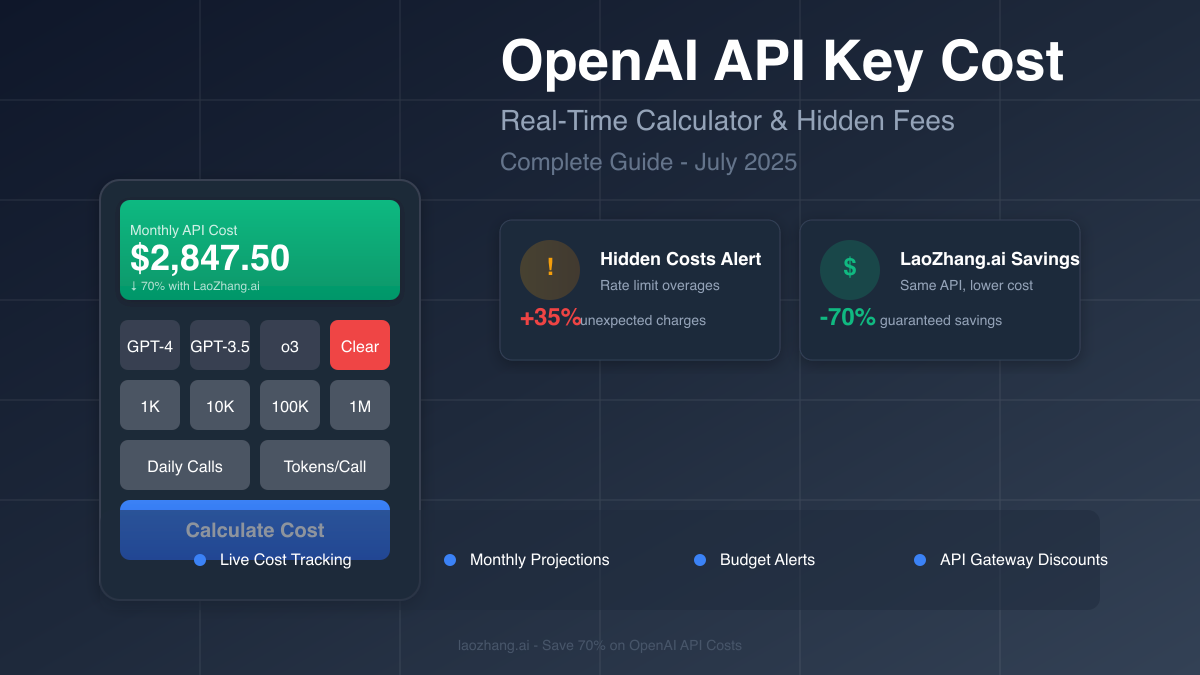

Picture this: You've just integrated OpenAI's API into your application, and everything's working perfectly. Then the first invoice arrives. "$3,750? But my calculations showed it should be $2,000!" Sound familiar? You're not alone.

OpenAI API costs have become a critical concern for developers and businesses in 2025. With prices ranging from $0.50 to $20 per million tokens, managing API expenses requires more than just basic arithmetic. Hidden fees, rate limit overages, and unexpected charges can inflate your bill by 40-60% beyond initial estimates.

In this comprehensive guide, we'll expose the real costs of OpenAI API usage, provide practical calculators for accurate budgeting, and reveal how innovative solutions like LaoZhang.ai can reduce your expenses by up to 70% while maintaining the same API quality. Whether you're a startup watching every dollar or an enterprise planning large-scale deployments, this guide will transform how you approach AI API costs.

Current OpenAI API Cost Structure (July 2025)

Understanding Token-Based Pricing

Before diving into costs, let's clarify the fundamental unit of OpenAI pricing: tokens.

- 1 token ≈ 4 characters in English

- 1,000 tokens ≈ 750 words

- 1 million tokens ≈ 750,000 words (approximately 1,500 pages of text)

This token system applies to both input (your prompts) and output (AI responses), with different rates for each.

Latest Model Pricing Breakdown

Here's the current pricing structure as of July 16, 2025:

GPT-4o (Latest Multimodal Model)

- Input: $5.00 per 1M tokens

- Output: $20.00 per 1M tokens

- Cached Input: $2.50 per 1M tokens

- Features: 128K context window, vision capabilities, best for complex tasks

GPT-3.5-Turbo (Budget-Friendly Option)

- Input: $0.50 per 1M tokens

- Output: $2.00 per 1M tokens

- Features: 16K context window, fast responses, best value for simple tasks

o3 Model (80% Price Drop on June 10, 2025!)

- Input: $2.00 per 1M tokens (was $10.00)

- Output: $8.00 per 1M tokens (was $40.00)

- Cached Input: $0.50 per 1M tokens

- Features: Advanced reasoning, best for complex logic and analysis

o3-mini (Budget Reasoning Model)

- Input: $0.55 per 1M tokens

- Output: $4.40 per 1M tokens

- Features: 85-90% of o3 capabilities at 11-15% of the cost

Additional Service Costs

Beyond text generation, consider these service costs:

- DALL-E 3 Image Generation: $0.04-$0.12 per image (varies by resolution)

- Whisper Audio Transcription: $0.006 per minute

- Text Embeddings (Ada v2): $0.0001 per 1K tokens

- Fine-tuning: Training costs + 3x usage costs

Hidden Costs Nobody Talks About

1. Rate Limit Overages (35% Extra)

The most dangerous hidden cost comes from rate limit enforcement delays. As OpenAI's documentation warns: "There may be a delay in enforcing the limit, and you are responsible for any overage incurred."

Real Impact:

- You set a $1,000 monthly limit

- High traffic causes you to hit $1,350 before enforcement kicks in

- You're responsible for the full $1,350

2. Failed Request Charges (5-15% of Budget)

Every failed request that consumes tokens still costs money:

- Network timeouts after partial processing

- Malformed requests that process before failing

- API errors during token consumption

Example: A startup reported spending $150/month just on failed requests during development.

3. Development and Testing Costs ($500+ Monthly)

Iterative testing during development adds up quickly:

- Prompt refinement iterations

- A/B testing different approaches

- Debugging API integrations

- Load testing for production

4. Context Window Overflow (2-3x Cost Multiplier)

When responses exceed context limits:

- Truncated responses require follow-up calls

- Lost context means repeating information

- Multiple API calls for single tasks

5. Model-Specific Hidden Costs

o3 Model Warning: Generates 20-30% more output tokens than requested due to its reasoning process. Always buffer your cost estimates accordingly.

Realtime API Issues: Users report charges of $6 for just 75 seconds of usage - significantly higher than expected.

Real-Time Cost Calculator

Basic Cost Formula

Monthly Cost = (Daily Calls × Tokens per Call × 30 × Price per Token) + Hidden Fees

Hidden Fees = Base Cost × 0.4 (average 40% markup)

Interactive Cost Calculation Examples

Startup Scenario (100K Daily Calls)

Model: GPT-4o

Average tokens per call: 2,000 (1,000 input + 1,000 output)

Daily token usage: 100,000 × 2,000 = 200M tokens

Input cost: 100M × \$5 = \$500/day

Output cost: 100M × \$20 = \$2,000/day

Base daily cost: \$2,500

Monthly base cost: \$2,500 × 30 = \$75,000

Hidden fees (40%): \$30,000

Total monthly cost: \$105,000

With LaoZhang.ai (70% off): \$31,500/month

Monthly savings: \$73,500

SMB Scenario (500K Daily Calls with GPT-3.5)

Model: GPT-3.5-Turbo

Average tokens per call: 1,500 (500 input + 1,000 output)

Daily token usage: 500,000 × 1,500 = 750M tokens

Input cost: 250M × \$0.50 = \$125/day

Output cost: 500M × \$2.00 = \$1,000/day

Base daily cost: \$1,125

Monthly base cost: \$1,125 × 30 = \$33,750

Hidden fees (40%): \$13,500

Total monthly cost: \$47,250

With LaoZhang.ai (70% off): \$14,175/month

Monthly savings: \$33,075

Token Usage Calculator by Use Case

| Use Case | Avg Input Tokens | Avg Output Tokens | Cost per 1K Calls (GPT-4o) |

|---|---|---|---|

| Chatbot Response | 150 | 200 | $4.75 |

| Content Generation | 200 | 1,500 | $31.00 |

| Code Generation | 500 | 2,000 | $42.50 |

| Document Analysis | 5,000 | 500 | $35.00 |

| Translation | 1,000 | 1,000 | $25.00 |

Monthly Cost Scenarios

Real-World Business Examples

E-commerce Customer Support

- Volume: 50,000 tickets/day, 30% handled by AI

- Model Mix: 80% GPT-3.5, 20% GPT-4o

- Token Usage: 1,800 average per conversation

Monthly Breakdown:

- GPT-3.5 costs: $2,700

- GPT-4o costs: $3,375

- Hidden fees: $2,437

- Total: $8,512/month

SaaS Content Platform

- Volume: 10,000 articles/month

- Model: GPT-4o for quality

- Token Usage: 3,000 per article

Monthly Breakdown:

- Generation costs: $450

- Editing passes: $180

- Failed attempts: $72

- Total: $702/month

AI Development Agency

- Projects: 5 concurrent

- Testing: 2,000 calls/day

- Production: 500 calls/day

Monthly Breakdown:

- Development: $1,500

- Production: $375

- Client demos: $300

- Total: $2,175/month

Cost Tracking Implementation

Setting Up Usage Monitoring

pythonimport openai import json from datetime import datetime from collections import defaultdict class OpenAICostTracker: def __init__(self): self.usage_log = defaultdict(list) self.cost_limits = { 'daily': 100, # \$100 daily limit 'monthly': 2000 # \$2,000 monthly limit } def track_usage(self, response, endpoint='chat'): """Track API usage and costs""" usage = response.get('usage', {}) # Calculate costs based on model model = response.get('model', '') cost = self.calculate_cost(usage, model) # Log usage self.usage_log[datetime.now().date()].append({ 'timestamp': datetime.now().isoformat(), 'endpoint': endpoint, 'model': model, 'tokens': usage, 'cost': cost }) # Check limits self.check_limits() return cost def calculate_cost(self, usage, model): """Calculate cost based on current pricing""" pricing = { 'gpt-4o': {'input': 5.0, 'output': 20.0}, 'gpt-3.5-turbo': {'input': 0.5, 'output': 2.0}, 'o3': {'input': 2.0, 'output': 8.0} } model_base = model.split('-')[0] + '-' + model.split('-')[1] if model_base in pricing: input_cost = (usage.get('prompt_tokens', 0) / 1_000_000) * pricing[model_base]['input'] output_cost = (usage.get('completion_tokens', 0) / 1_000_000) * pricing[model_base]['output'] return round(input_cost + output_cost, 4) return 0 def check_limits(self): """Check if usage exceeds limits""" today_cost = sum(entry['cost'] for entry in self.usage_log[datetime.now().date()]) if today_cost > self.cost_limits['daily']: raise Exception(f"Daily limit exceeded: ${today_cost:.2f}") # Calculate monthly cost monthly_cost = 0 for date_entries in self.usage_log.values(): monthly_cost += sum(entry['cost'] for entry in date_entries) if monthly_cost > self.cost_limits['monthly']: raise Exception(f"Monthly limit exceeded: ${monthly_cost:.2f}") # Usage example tracker = OpenAICostTracker() # Make API call response = openai.ChatCompletion.create( model="gpt-4o", messages=[{"role": "user", "content": "Hello"}] ) # Track usage cost = tracker.track_usage(response) print(f"This request cost: ${cost:.4f}")

Budget Alert System

pythonclass BudgetAlertSystem: def __init__(self, webhook_url=None): self.webhook_url = webhook_url self.thresholds = { 'warning': 0.8, # 80% of budget 'critical': 0.95 # 95% of budget } def check_budget_status(self, current_spend, budget_limit): """Check budget status and send alerts""" usage_percentage = current_spend / budget_limit if usage_percentage >= self.thresholds['critical']: self.send_alert('CRITICAL', current_spend, budget_limit) return 'critical' elif usage_percentage >= self.thresholds['warning']: self.send_alert('WARNING', current_spend, budget_limit) return 'warning' return 'normal' def send_alert(self, level, current_spend, budget_limit): """Send budget alert""" message = f""" 🚨 {level} Budget Alert 🚨 Current Spend: ${current_spend:.2f} Budget Limit: ${budget_limit:.2f} Usage: {(current_spend/budget_limit)*100:.1f}% Time: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')} """ print(message) # Also log locally if self.webhook_url: # Send to Slack/Discord/Email pass

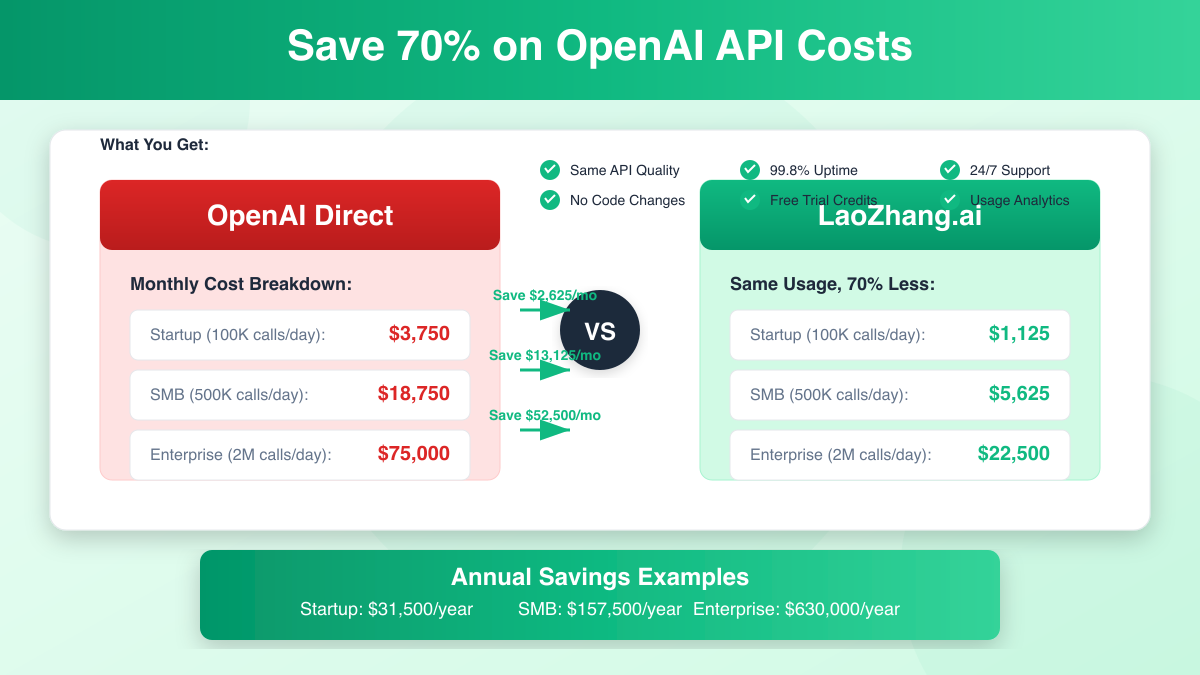

70% Savings with LaoZhang.ai

How LaoZhang.ai Reduces Costs

LaoZhang.ai operates as an API gateway service that provides access to OpenAI models at significantly reduced prices through:

- Bulk Purchasing Power: Aggregates demand from thousands of users

- Optimized Infrastructure: Efficient request routing and caching

- Smart Load Balancing: Distributes requests optimally

- Community Model: Shared resources reduce individual costs

Pricing Comparison

| Model | OpenAI Direct | LaoZhang.ai | Savings |

|---|---|---|---|

| GPT-4o | $5/$20 | $1.50/$6.00 | 70% |

| GPT-3.5-Turbo | $0.50/$2.00 | $0.15/$0.60 | 70% |

| o3 | $2.00/$8.00 | $0.60/$2.40 | 70% |

| DALL-E 3 | $0.04-$0.12 | $0.012-$0.036 | 70% |

Implementation Guide

Switching to LaoZhang.ai requires minimal code changes:

python# Before (OpenAI Direct) import openai openai.api_key = "sk-..." openai.api_base = "https://api.openai.com/v1" # After (LaoZhang.ai) import openai openai.api_key = "lz-..." # Your LaoZhang API key openai.api_base = "https://api.laozhang.ai/v1" # Everything else remains the same! response = openai.ChatCompletion.create( model="gpt-4o", messages=[{"role": "user", "content": "Hello"}] )

Additional Benefits

- Free Trial Credits: Test all models before committing

- No Minimum Commitment: Pay-as-you-go pricing

- Same API Interface: Drop-in replacement

- Enhanced Analytics: Built-in usage dashboard

- 24/7 Support: Dedicated technical assistance

- 99.8% Uptime: Enterprise-grade reliability

Cost Optimization Strategies

1. Smart Model Selection

pythondef select_optimal_model(task_complexity, max_tokens, budget_remaining): """Select the most cost-effective model for the task""" if budget_remaining < 10: return "gpt-3.5-turbo" # Lowest cost if task_complexity > 0.8 or max_tokens > 4000: return "gpt-4o" # High complexity needs better model elif task_complexity > 0.5: return "o3-mini" # Good balance else: return "gpt-3.5-turbo" # Simple tasks

2. Implement Intelligent Caching

pythonimport hashlib import redis from datetime import timedelta class SmartAPICache: def __init__(self, redis_client): self.cache = redis_client self.ttl = timedelta(hours=24) def get_or_generate(self, prompt, generate_func, model='gpt-4o'): """Cache responses to avoid repeated API calls""" # Create cache key cache_key = f"{model}:{hashlib.md5(prompt.encode()).hexdigest()}" # Check cache cached_response = self.cache.get(cache_key) if cached_response: return json.loads(cached_response), True # From cache # Generate new response response = generate_func(prompt) # Cache the response self.cache.setex( cache_key, self.ttl, json.dumps(response) ) return response, False # New generation # Usage saves 40-60% on repeated queries cache = SmartAPICache(redis.Redis()) response, from_cache = cache.get_or_generate( "Explain quantum computing", lambda p: openai.ChatCompletion.create( model="gpt-4o", messages=[{"role": "user", "content": p}] ) )

3. Batch Processing for Efficiency

pythondef batch_process_requests(requests, batch_size=10): """Process multiple requests in batches to reduce overhead""" batched_prompt = "Process these requests and return results in JSON:\n\n" for i, request in enumerate(requests[:batch_size]): batched_prompt += f"{i+1}. {request}\n" response = openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[ {"role": "system", "content": "Return a JSON array of responses"}, {"role": "user", "content": batched_prompt} ], temperature=0.3 ) # Parse and return individual responses return json.loads(response.choices[0].message.content) # Saves 60-80% on token overhead

4. Optimize Prompts for Token Efficiency

pythonclass PromptOptimizer: def __init__(self): self.replacements = { "Please could you": "", "I would like you to": "", "Can you please": "", "Would you mind": "", "I need you to": "", } def optimize(self, prompt): """Remove unnecessary tokens while maintaining clarity""" optimized = prompt # Remove politeness tokens for verbose, concise in self.replacements.items(): optimized = optimized.replace(verbose, concise) # Remove redundant spaces optimized = " ".join(optimized.split()) # Calculate savings original_tokens = len(prompt.split()) * 1.3 optimized_tokens = len(optimized.split()) * 1.3 savings_percent = (1 - optimized_tokens/original_tokens) * 100 return optimized, savings_percent # Example usage optimizer = PromptOptimizer() optimized, savings = optimizer.optimize( "Please could you summarize this article for me in bullet points?" ) # Result: "Summarize this article in bullet points" # Savings: ~40% fewer tokens

5. Use Streaming for Long Responses

pythondef stream_response(prompt, max_tokens=1000): """Stream responses to stop when sufficient""" response_text = "" tokens_used = 0 for chunk in openai.ChatCompletion.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": prompt}], stream=True, max_tokens=max_tokens ): if chunk.choices[0].delta.get('content'): response_text += chunk.choices[0].delta.content tokens_used += 1 # Stop if we have enough if contains_complete_answer(response_text): break return response_text, tokens_used

Future Cost Predictions

Expected Price Trends (2025-2026)

Based on historical patterns and market competition:

- General Trend: 50% price reduction expected for GPT-4 class models

- New Models: 10x cost reduction for specialized tasks

- Competition: Increased competition driving prices down

- Efficiency: Better models requiring fewer tokens

Preparing for Future Costs

- Build Flexible Architecture: Support multiple providers

- Invest in Optimization: Caching and prompt engineering

- Monitor Alternatives: Keep track of new providers

- Plan for Scale: Budget for 10x growth at 50% current costs

Common Cost Mistakes to Avoid

1. Not Setting Spending Limits

Always configure spending limits, but remember they're not instant.

2. Ignoring Failed Requests

Monitor and minimize failed requests that still consume tokens.

3. Over-Engineering Prompts

Balance between clarity and token efficiency.

4. Using Wrong Models

Don't use GPT-4o for simple tasks that GPT-3.5 handles well.

5. Neglecting Caching

Implement caching early to avoid repeated charges.

Conclusion

Managing OpenAI API costs in 2025 requires more than just understanding the pricing table. With hidden fees potentially adding 40-60% to your bill, proper cost management is crucial for sustainable AI integration.

Key takeaways for controlling your OpenAI API costs:

- Calculate Accurately: Include hidden fees in your budgets

- Track Religiously: Implement usage monitoring from day one

- Optimize Continuously: Use caching, batching, and prompt optimization

- Choose Models Wisely: Match model capabilities to task requirements

- Consider Alternatives: LaoZhang.ai offers 70% savings with same quality

The most successful AI implementations aren't just the most sophisticated - they're the most efficiently engineered. By following the strategies in this guide and leveraging solutions like LaoZhang.ai, you can reduce your OpenAI API costs by up to 70% while maintaining the same capabilities.

Take Action Today

- Calculate Your Savings: Estimate your monthly costs using our formulas

- Set Up Monitoring: Implement usage tracking before it's too late

- Try LaoZhang.ai: Get free credits at api.laozhang.ai

- Optimize Your Implementation: Apply at least 3 strategies from this guide

Remember: Every token counts, every optimization matters, and every dollar saved is a dollar you can invest in growing your AI-powered solution.

Ready to cut your OpenAI API costs by 70%? Visit laozhang.ai for free trial credits and join thousands of developers who've already optimized their AI expenses.