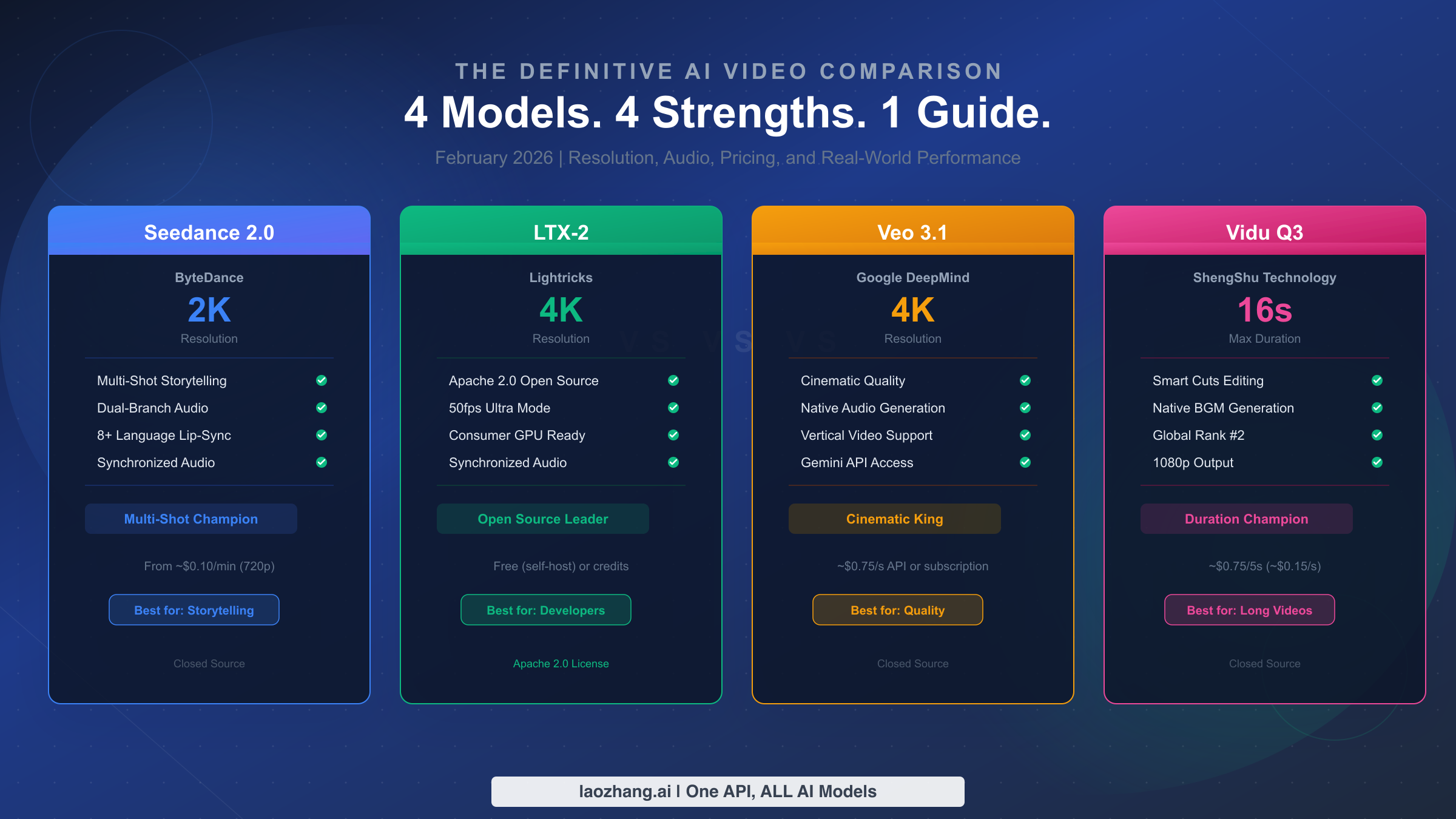

Among the four leading AI video generators released in early 2026, each model carves out a distinct niche: Seedance 2.0 leads in multi-shot storytelling with native 2K output and 8-language lip-sync, LTX-2 is the only fully open-source option offering 4K at 50fps on consumer GPUs, Veo 3.1 delivers Google's state-of-the-art 4K cinematic quality with native audio, and Vidu Q3 stands alone with 16-second generation and intelligent Smart Cuts. This guide compares all four across resolution, audio, pricing, and real-world performance to help you choose the right model for your workflow.

TL;DR — Quick Comparison

Before diving deep, here is a master comparison table that captures the essential differences across all four models. Each one launched between January 6 and February 8, 2026, and each targets a fundamentally different use case. Understanding these differences upfront will save you hours of research, because the "best" model depends entirely on what you are building, how much you can spend, and whether you need API access or a polished consumer experience.

| Feature | Seedance 2.0 | LTX-2 | Veo 3.1 | Vidu Q3 |

|---|---|---|---|---|

| Developer | ByteDance | Lightricks | Google DeepMind | ShengShu Technology |

| Release Date | Feb 8, 2026 | Jan 6, 2026 | Jan 13, 2026 | Jan 30, 2026 |

| Max Resolution | 2K (2048×1080) | Up to 4K | Up to 4K | 1080p |

| Max Duration | Multi-shot | 6–10s (15s soon) | 8s | 16s |

| Frame Rate | 24fps | Up to 50fps | 24fps | 24fps |

| Audio | Dual-branch sync, 8+ languages | Synchronized audio | Native audio | Native audio + BGM |

| Open Source | No | Yes (Apache 2.0) | No | No |

| Starting Price | ~$0.10/min (720p) | Free (self-host) | Google AI Pro subscription | ~$0.75/5s |

Key takeaways: If your priority is telling a multi-scene story with synchronized dialogue, Seedance 2.0 is unmatched. If you want full control over your pipeline with open-source freedom and 4K output, LTX-2 is the clear choice. If cinematic quality at 4K with Google's ecosystem backing matters most, Veo 3.1 delivers. And if you need the longest single-clip duration with built-in editing intelligence, Vidu Q3 is the only model generating up to 16 seconds natively.

Seedance 2.0 — ByteDance's Multi-Shot Revolution

ByteDance released Seedance 2.0 on February 8, 2026, and it immediately distinguished itself from every other model on this list with a capability none of them offer: native multi-shot video generation. While competing models produce single continuous clips, Seedance 2.0 can generate coherent multi-scene narratives where characters, settings, and storylines maintain consistency across shots. This is not simply stitching clips together in post-production—the model architecturally understands narrative structure and generates transitions, camera angles, and scene compositions that feel like they were directed by a human filmmaker.

The technical foundation of Seedance 2.0 centers on its dual-branch audio architecture, which processes dialogue and environmental sound through separate neural pathways before merging them into a unified output. In practice, this means the model can generate a character speaking in one of eight supported languages with accurate lip synchronization while simultaneously producing contextually appropriate ambient sounds—footsteps on gravel, rain against a window, crowd noise in a café. Most competing models treat audio as a single undifferentiated stream, which leads to muddy output where dialogue competes with sound effects. Seedance 2.0's separation of concerns produces notably cleaner audio with distinct spatial positioning for each layer. If you have been following how this model compares against other top contenders, our comparison of Seedance 2 with Sora 2 and Kling 3 provides additional context on where ByteDance's offering stands in the broader landscape.

The resolution ceiling of 2K (2048×1080) places Seedance 2.0 below the 4K-capable LTX-2 and Veo 3.1, but this tradeoff is deliberate. ByteDance has optimized for generation speed and multi-shot coherence rather than raw pixel count. A typical 2K multi-shot sequence generates in approximately 60 seconds, which is competitive with models producing single shots at similar resolution. For social media creators who primarily distribute on platforms that compress to 1080p anyway, the 2K ceiling rarely becomes a practical limitation. The real question is whether your workflow requires the multi-shot storytelling capability that makes Seedance 2.0 unique, or whether you would be better served by a model that maximizes resolution or duration instead.

Pricing follows a tiered structure that scales with resolution: approximately $0.10 per minute at 720p, $0.30 per minute at 1080p, and $0.80 per minute at full 2K output (NxCode guide, February 2026). This makes Seedance 2.0 the most affordable option at lower resolutions, especially for creators producing high volumes of social media content where 720p or 1080p is perfectly acceptable. The 8-language lip-sync capability adds particular value for international content creators who need to localize videos across markets without re-recording voiceovers or manually adjusting mouth movements—a process that traditionally requires expensive post-production work.

LTX-2 — The Open-Source 4K Powerhouse

Lightricks launched LTX-2 on January 6, 2026, making it the first model in this comparison to reach the market, and it arrived with a proposition that fundamentally changes the economics of AI video generation: it is fully open-source under the Apache 2.0 license. This means you can download the model weights, run them on your own hardware, modify the architecture, and integrate it into commercial products without paying a single dollar in API fees or licensing costs. For developers building video generation into their own products, this is not a marginal advantage—it eliminates the single largest ongoing cost in the entire workflow.

The technical specifications of LTX-2 are genuinely impressive for an open-source model. It supports output resolution up to 4K, a frame rate of up to 50fps in its Ultra tier, and produces synchronized audio alongside the video output. The model operates in three distinct tiers—Fast, Pro, and Ultra—each trading generation speed against quality and resolution. The Fast tier generates lower-resolution clips suitable for previewing and iteration within seconds, the Pro tier handles 1080p output at 24fps for production-quality work, and the Ultra tier unlocks the full 4K at 50fps capabilities for projects where maximum fidelity matters. Current maximum duration spans 6 to 10 seconds depending on the tier, with Lightricks publicly announcing that 15-second generation is coming in a near-term update.

The real question every potential LTX-2 user needs to answer is whether self-hosting is practical for their situation, and the honest answer requires some math. Running LTX-2 at its full capability requires a GPU with at least 24GB of VRAM—an NVIDIA RTX 4090 or equivalent. If you already own this hardware, your marginal cost per video is essentially the electricity consumed during generation, which works out to fractions of a cent per clip. If you need to rent GPU time, services like RunPod and Vast.ai offer RTX 4090 instances at approximately $0.40–0.60 per hour, and generating a 10-second 1080p clip typically takes 2–4 minutes of compute time. That translates to roughly $0.02–0.04 per clip for the actual generation—dramatically cheaper than any cloud API, but requiring technical expertise to set up and maintain the infrastructure. Lightricks also offers LTX Studio, a cloud-based platform with a credits system for users who want the quality of LTX-2 without managing their own GPU deployment, though at higher per-video costs comparable to competing cloud APIs.

The Apache 2.0 license carries strategic implications beyond immediate cost savings. Because you control the model and the deployment, you are never subject to API rate limits, usage policies, content restrictions, or pricing changes imposed by a third-party provider. For studios building AI video generation into their production pipeline, this independence from external providers eliminates a category of business risk that closed models inherently carry. The active open-source community around LTX-2 also means community-contributed fine-tunes, optimizations, and integrations appear regularly, extending the model's capabilities in ways that a single corporate provider cannot match.

Veo 3.1 — Google's Cinematic 4K Engine

Google DeepMind released Veo 3.1 on January 13, 2026, and it represents the highest raw quality ceiling in this comparison. When you need footage that looks like it was shot by a professional cinematographer—with naturalistic lighting, physically accurate motion, and cinematic depth of field—Veo 3.1 produces results that consistently outperform every other model in blind quality evaluations. The model sits at approximately ELO 1220 on the Video Arena benchmark (as of February 2026), placing it among the top 3-4 video generation models globally. For a detailed Sora 2 vs Veo 3.1 comparison, including head-to-head quality analysis, we have covered that matchup extensively in a separate guide.

Veo 3.1 is available in two tiers through the Google AI subscription system. The Fast variant, included with the Google AI Pro plan, generates 8-second videos with native audio at good quality with faster turnaround. The standard Veo 3.1, available with the Google AI Ultra plan, delivers state-of-the-art cinematic quality at up to 4K resolution with the same 8-second maximum duration and native audio generation (Gemini official page, verified February 10, 2026). Both tiers support vertical video output—a detail that matters enormously for creators targeting TikTok, Instagram Reels, and YouTube Shorts, where vertical format is the expected default rather than a cropped afterthought.

One of Veo 3.1's most distinctive features is "Ingredients to Video," which allows users to provide multiple reference images—a character portrait, a location photo, a style reference—and have the model synthesize them into a coherent video that incorporates elements from all inputs. This is genuinely useful for brand content where you need generated video to match existing visual assets, or for narrative projects where character consistency across scenes is essential. While Seedance 2.0 addresses multi-scene consistency through its multi-shot architecture, Veo 3.1 approaches the problem from the input side by letting you control the visual ingredients that go into each generation.

For programmatic access, Veo 3.1 is available through the Gemini API, making it straightforward to integrate into existing applications that already use Google's AI infrastructure. The API pricing of approximately $0.75 per second of generated video (official API documentation, February 2026) places Veo 3.1 at the premium end of the market—a 10-second clip costs roughly $7.50 through the API—but the subscription model through Google AI Pro and Ultra plans offers significantly better economics for regular users who generate multiple videos per month. The 8-second maximum duration is the most notable limitation: it is shorter than Vidu Q3's 16 seconds and lacks the multi-shot capability of Seedance 2.0, meaning you will need to stitch clips together in post-production for any content longer than 8 seconds.

Vidu Q3 — The 16-Second Duration Champion

ShengShu Technology launched Vidu Q3 on January 30, 2026, and its headline specification immediately separates it from the competition: 16-second maximum generation duration, which is double or more what any other model on this list can produce in a single clip. For content types where temporal continuity matters—product demonstrations, scene-setting establishing shots, character monologues, or any narrative that needs more than 8 seconds to develop—Vidu Q3 eliminates the need for multi-clip stitching that other models require. The model ranks #2 globally and #1 among Chinese-origin models on the Artificial Analysis benchmark (January 2026), confirming that the extended duration does not come at the expense of visual quality.

Smart Cuts is the feature that transforms Vidu Q3 from a long-duration generator into a genuine editing assistant. Rather than producing a single continuous 16-second shot, Smart Cuts intelligently segments the generated content into logical scenes with appropriate transitions, effectively producing a rough edit from a single prompt. This mirrors how a human editor would approach raw footage: identifying natural break points, varying shot composition between cuts, and maintaining narrative flow across the segments. For solo creators who lack editing expertise or time, Smart Cuts reduces the gap between generated output and publishable content more effectively than any other model's post-processing features.

The native background music generation capability further distinguishes Vidu Q3 from competitors. While all four models in this comparison produce some form of audio alongside video, Vidu Q3 is the only one that generates contextually appropriate background music as a native output. The model analyzes the visual content and prompt to select musical characteristics—tempo, mood, instrumentation—that complement the scene. This is particularly valuable for social media content where music is not optional but essential for engagement, and where sourcing licensed music separately adds both cost and workflow complexity. The dialogue audio quality in Vidu Q3 is competitive with the other three models, and the addition of native BGM gives it a complete audio package that others currently cannot match.

At 1080p maximum resolution, Vidu Q3 sits below both LTX-2 and Veo 3.1 in pixel count. This is a meaningful limitation for professional production work where 4K delivery is standard, but for the vast majority of social media and web content, 1080p remains the practical ceiling that platforms actually display. The pricing model at approximately $0.75 per 5 seconds (roughly $0.15 per second, WaveSpeedAI comparison, January 2026) positions Vidu Q3 competitively for its unique combination of duration and features, especially when you factor in the Smart Cuts editing and BGM generation that would otherwise require separate tools and additional expense.

The practical workflow advantage of Vidu Q3 becomes most apparent when you compare the effort required to produce a 30-second social media clip across all four models. With Veo 3.1, you would need to generate four separate 8-second clips, prompt each one carefully for visual and tonal consistency, then stitch them together in an editor while sourcing background music separately. With Seedance 2.0, the multi-shot capability handles continuity but you would still need to source music externally. With LTX-2, you face the same clip-by-clip assembly workflow plus the infrastructure management overhead. With Vidu Q3, you generate two 16-second clips that already include Smart Cuts scene segmentation and native BGM, then trim to your final length—a workflow that might take five minutes compared to 30 minutes or more with the alternatives. This time-saving compounds dramatically for creators who produce content daily, and it explains why Vidu Q3 has gained rapid adoption specifically among high-volume social media producers despite not leading in raw resolution or cinematic quality metrics.

Head-to-Head: Resolution, Audio, and Multi-Shot Compared

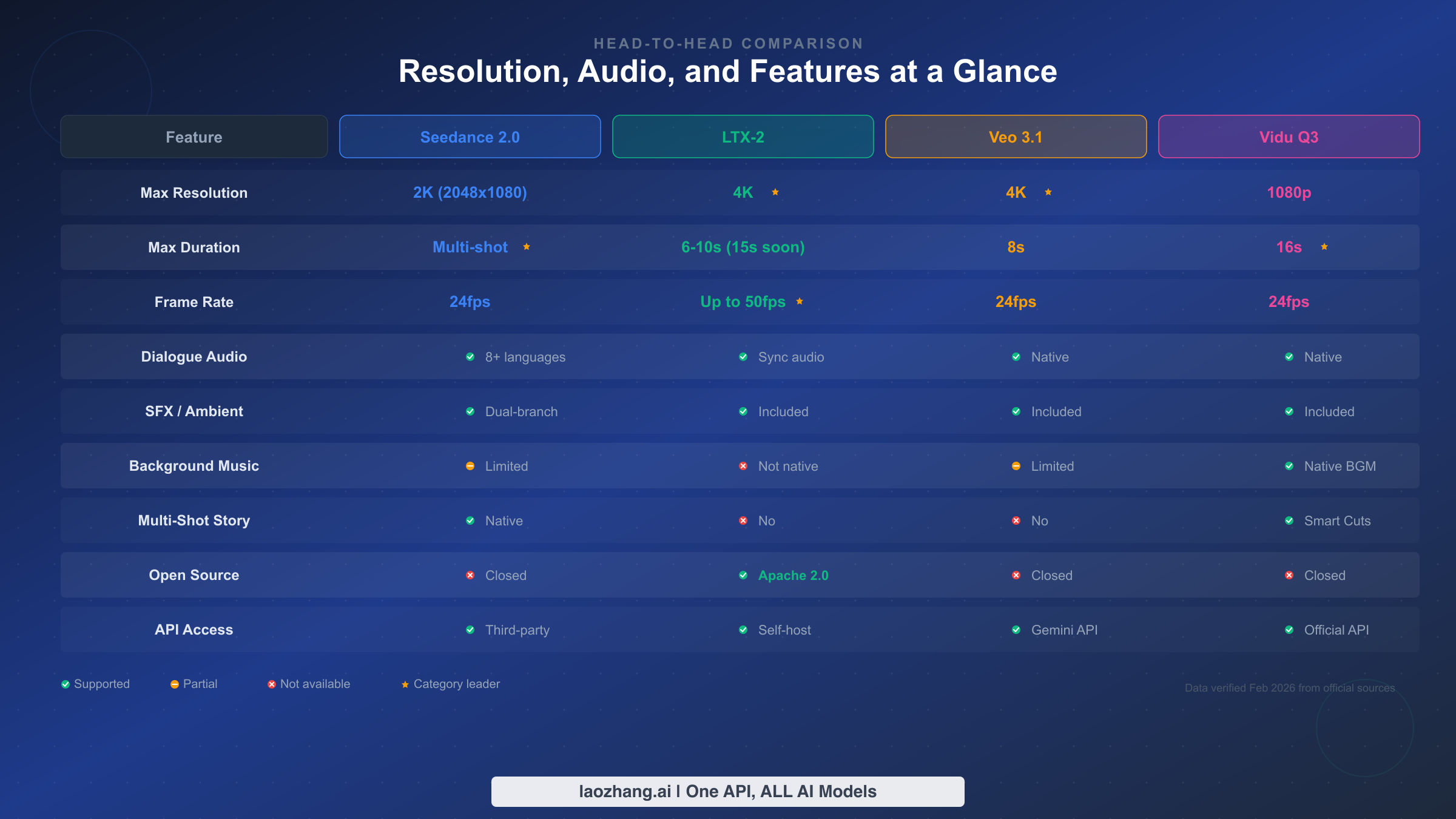

Now that each model has been examined individually, the critical question is how they compare directly on the dimensions that matter most for production decisions. Resolution, audio capabilities, and multi-shot features represent the three axes along which these models most dramatically diverge, and understanding these differences in combination—not isolation—is what separates an informed choice from a guesswork decision.

Resolution tells only part of the story. LTX-2 and Veo 3.1 both advertise 4K output, but the practical quality at that resolution differs significantly. Veo 3.1's 4K output exhibits the cinematic depth and naturalistic lighting that has earned it top-tier benchmark rankings, while LTX-2's 4K output—impressive for an open-source model—shows slightly more artifacts in fine detail areas like hair, water, and fabric textures. Seedance 2.0's 2K ceiling is sharp and clean within its resolution class, and Vidu Q3's 1080p output is well-optimized for its target platform of social media distribution. The resolution hierarchy of Veo 3.1 > LTX-2 > Seedance 2.0 > Vidu Q3 is clear on paper, but in practice, the gap narrows dramatically when content is consumed on mobile devices at compressed bitrates.

| Audio Capability | Seedance 2.0 | LTX-2 | Veo 3.1 | Vidu Q3 |

|---|---|---|---|---|

| Dialogue | 8+ languages with lip-sync | Basic sync | Native generation | Native generation |

| Sound Effects | Dual-branch (separate from dialogue) | Included in audio stream | Included in audio stream | Included in audio stream |

| Ambient Sound | Contextual generation | Contextual generation | Contextual generation | Contextual generation |

| Background Music | Limited | Not native | Limited | Native BGM generation |

| Audio Architecture | Dual-branch (dialogue + environment) | Single stream | Single stream | Single stream + BGM track |

Audio is the true differentiator in 2026. In 2025, resolution was the primary battleground for AI video models. In 2026, native audio quality has become the feature that most dramatically separates these four models. Seedance 2.0's dual-branch architecture produces the cleanest dialogue because it processes speech and environmental sound independently, preventing the muddy mixing that plagues single-stream approaches. Veo 3.1 generates audio that sounds the most natural and cinematic, with ambient sound that genuinely matches the visual scene. Vidu Q3 wins on completeness by including background music generation that none of the others offer natively. LTX-2's audio is functional but represents the area with the most room for community improvement, as the open-source model continues to receive contributions that enhance its audio capabilities.

Multi-shot and storytelling capabilities create the widest gap. Seedance 2.0 is the only model with true native multi-shot generation, where the model itself produces coherent multi-scene narratives. Vidu Q3's Smart Cuts feature provides intelligent segmentation of generated content, which achieves a similar editing-like result but from a different architectural approach—rather than generating multiple shots as a unified sequence, it creates a single long clip and then identifies where to place cuts and transitions. LTX-2 and Veo 3.1 both produce single continuous clips and require external editing workflows to create multi-scene content, making them less suitable for narrative-driven projects unless you are prepared to invest in post-production assembly. This distinction matters most for content types that tell stories—brand narratives, educational walkthroughs, product demonstrations with multiple angles—where the coherence between shots directly impacts viewer comprehension and engagement.

Generation speed varies significantly across models and directly impacts creative iteration. When you are exploring prompts and refining your creative direction, the time between pressing "generate" and seeing the result determines how many iterations you can realistically test. LTX-2's Fast tier produces preview-quality clips in seconds, allowing rapid experimentation before committing to a higher-quality generation in Pro or Ultra mode. Seedance 2.0's multi-shot sequences take approximately 60 seconds at 2K resolution, which is reasonable given the complexity of producing coherent multi-scene output. Veo 3.1 and Vidu Q3 generation times depend on server load and selected quality tier, but both typically deliver results within 1-3 minutes for standard requests. For workflows that involve heavy experimentation—trying different prompts, camera angles, and style directives—LTX-2's tiered speed approach provides the best creative iteration loop.

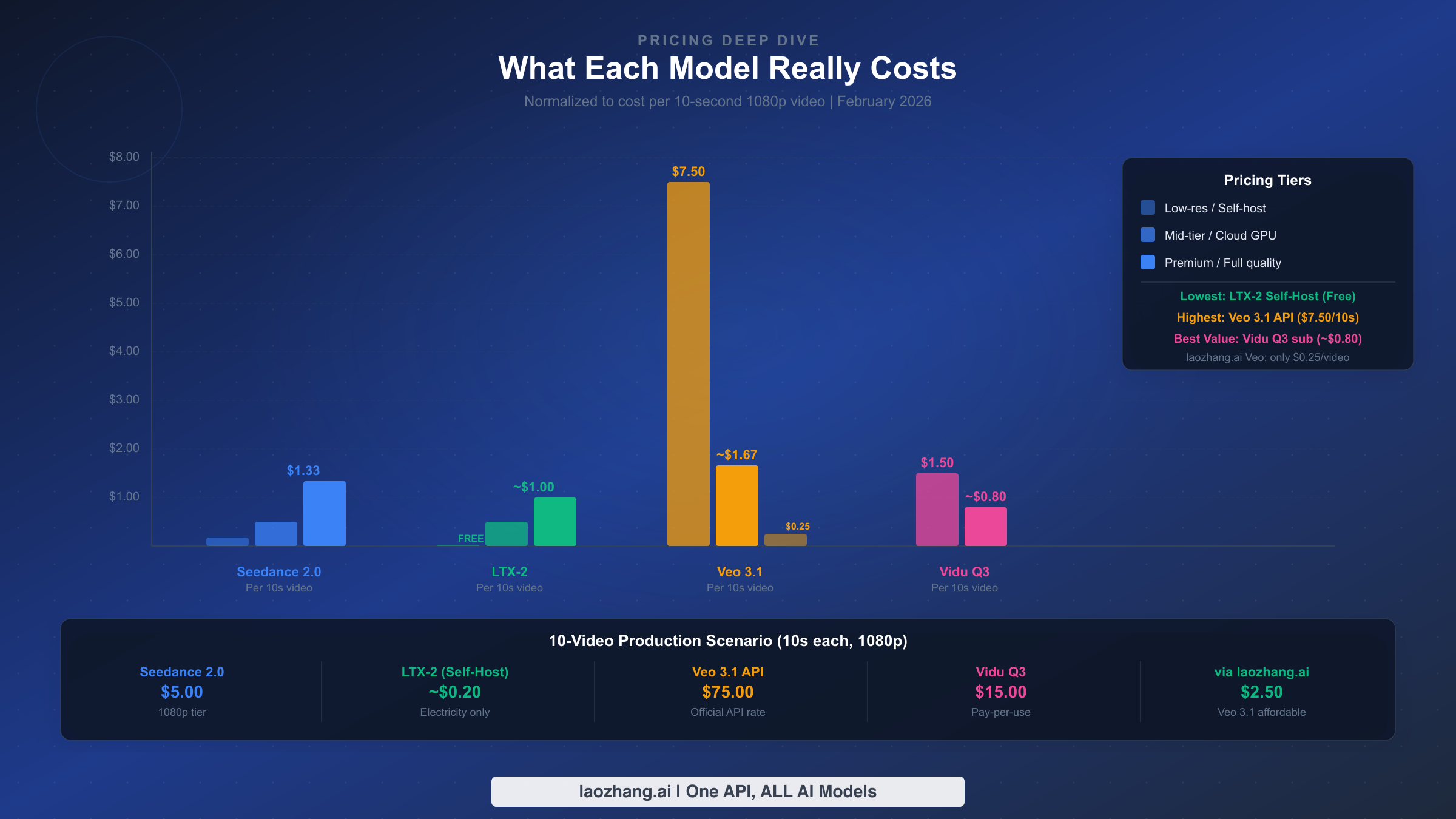

What Each Model Really Costs: A Pricing Deep Dive

Pricing is where the abstract differences between these models become concrete business decisions, and it is also where the comparison becomes most complex because each model uses a fundamentally different pricing structure. Normalizing all four models to a common unit—cost per 10-second 1080p video—reveals differences that range from essentially free to premium pricing, with several surprising nuances that the headline numbers do not capture.

Seedance 2.0 uses a straightforward per-minute pricing model that scales with resolution. At 720p, the cost is approximately $0.10 per minute, making a 10-second clip roughly $0.017—almost negligible for individual creators. At 1080p, the cost rises to approximately $0.30 per minute ($0.05 per 10 seconds), and at full 2K resolution, it reaches $0.80 per minute ($0.13 per 10 seconds). This tiered approach rewards creators who match resolution to their distribution platform rather than defaulting to maximum quality, and it makes Seedance 2.0 the cheapest cloud option for high-volume production at 720p or 1080p (NxCode guide, February 2026).

LTX-2 presents the most complex pricing landscape because "free" depends heavily on your infrastructure situation. Self-hosting on hardware you already own costs only electricity—fractions of a cent per clip. Renting GPU time on RunPod or Vast.ai costs approximately $0.40–0.60 per hour for an RTX 4090 instance, translating to $0.02–0.04 per 10-second 1080p clip. LTX Studio's cloud platform charges credits that work out to approximately $1.00 per 10-second clip when including audio generation. The self-hosting option is dramatically cheaper than any competitor, but the upfront investment in hardware (an RTX 4090 costs $1,600–2,000) or the technical expertise required for cloud GPU deployment creates a barrier that is effectively invisible in per-clip cost comparisons.

Veo 3.1 sits at the premium end with API pricing of approximately $0.75 per second of generated video, making a 10-second clip cost roughly $7.50 through the Gemini API (official API documentation, verified February 10, 2026). The subscription model through Google AI Pro and Ultra plans reduces this cost significantly for regular users, with the subscription effectively averaging to approximately $1.67 per 10-second clip depending on monthly generation volume. For a detailed breakdown, see Veo 3.1's per-second pricing structure. For developers seeking more affordable API access to Veo 3.1, third-party providers like laozhang.ai offer Veo 3.1 at $0.15 per request for the fast variant and $0.25 per request for the standard variant—a fraction of the official API cost that makes production-scale usage economically viable. The same platform also provides access to affordable Sora 2 API alternatives at $0.15 per request, giving developers a unified access point for multiple video generation models.

Vidu Q3 charges approximately $0.75 per 5-second generation at 1080p, which normalizes to $1.50 per 10-second clip (WaveSpeedAI comparison, January 2026). When factoring in the Smart Cuts editing and native BGM generation that would otherwise require separate tools, the effective value proposition improves. Subscription plans bring the per-clip cost down to approximately $0.80 for regular users, making it competitive with Seedance 2.0's 1080p pricing while offering double the clip duration.

The 10-video production scenario crystallizes these differences. Producing ten 10-second clips at 1080p costs approximately $5.00 with Seedance 2.0, $0.20–0.40 with self-hosted LTX-2, $75.00 with Veo 3.1's API (or $2.50 through laozhang.ai), and $15.00 with Vidu Q3's pay-per-use pricing. These numbers make it clear that pricing alone does not determine the right choice—the model that costs more may save far more in post-production time, especially when native audio, BGM, or multi-shot editing reduces the work required after generation.

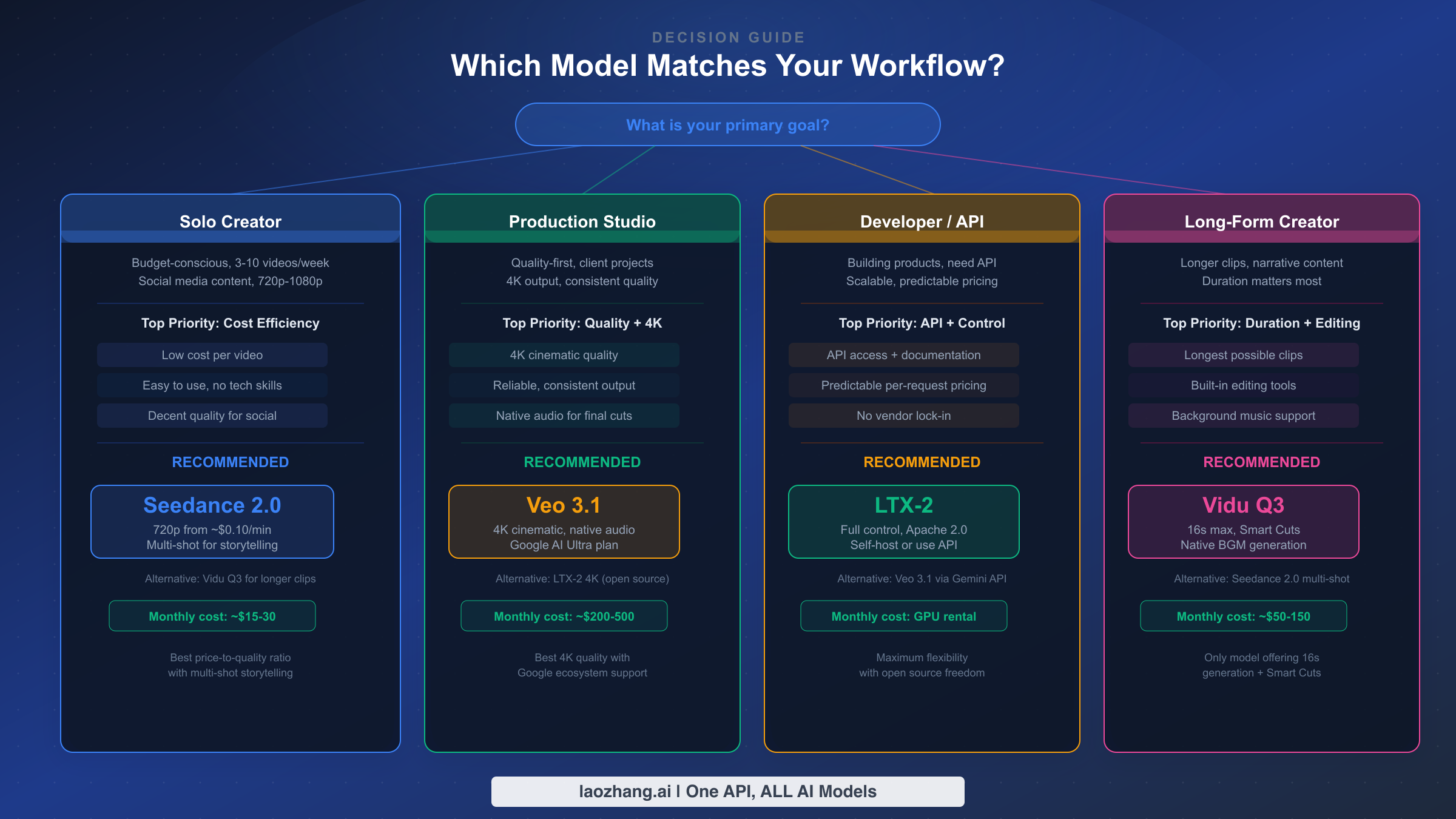

Which Model Should You Choose? Matching Models to Workflows

The comparison data above is only useful if it translates into an actionable decision for your specific situation. Rather than offering a generic "it depends" conclusion, this section maps four concrete workflow scenarios to specific model recommendations, based on the priority each user type actually has when choosing an AI video generation tool. Your real-world constraints—budget, technical capability, output volume, and quality requirements—determine which model's strengths align with your needs.

Solo creators producing social media content should start with Seedance 2.0. When you are making 3–10 videos per week for platforms like TikTok, YouTube Shorts, or Instagram Reels, your primary constraint is cost per video, not maximum resolution. Seedance 2.0's 720p tier at $0.10 per minute makes high-volume production genuinely affordable—a typical month of 30 short-form videos costs under $15. The multi-shot storytelling capability adds a creative dimension that helps your content stand out from the single-clip output that other models produce, and the 8-language lip-sync opens international audiences without additional production cost. If your content relies heavily on longer clips with music, consider Vidu Q3 as an alternative, where the 16-second duration and native BGM generation align well with social media formats that prioritize sustained viewer engagement over visual fidelity.

Production studios working on client projects should invest in Veo 3.1. When clients expect 4K delivery, cinematic quality, and professional-grade audio, Veo 3.1's output quality justifies its premium pricing. The Google AI Ultra subscription provides predictable monthly costs for regular production, and the Ingredients to Video feature enables the kind of controlled, brand-consistent output that client work demands. The 8-second clip limitation requires post-production assembly for longer sequences, but studios already have editing workflows in place. LTX-2 serves as a compelling secondary option for studios willing to invest in GPU infrastructure, offering 4K output at dramatically lower per-clip costs with the flexibility of open-source customization, though the quality ceiling does not quite match Veo 3.1 for the most demanding cinematic work.

Developers building products with video generation should strongly consider LTX-2 as their primary model. The Apache 2.0 license means zero per-query costs when self-hosting, no API rate limits to architect around, no content policy restrictions beyond your own, and no dependency on a third-party provider who might change pricing or terms of service. The technical overhead of managing GPU infrastructure is a one-time setup cost that becomes negligible once your deployment pipeline is established. For developers who need access to multiple models without managing separate infrastructure, unified API platforms like laozhang.ai provide a single endpoint for Veo 3.1, Sora 2, and other video models with consistent pricing and documentation, reducing integration complexity significantly.

Long-form narrative creators should prioritize Vidu Q3 for any project where clip duration matters. Producing a 60-second video requires 8 separate clips with Veo 3.1 (8 seconds each), 6 clips with LTX-2 (10 seconds each), or just 4 clips with Vidu Q3 (16 seconds each). Fewer clip boundaries mean fewer continuity breaks, fewer editing transitions to manage, and a more cohesive final product. The Smart Cuts feature further reduces post-production work by providing intelligent scene segmentation within each 16-second generation, and the native BGM generation eliminates the separate step of sourcing and synchronizing background music. For narrative content where story arc development benefits from longer uninterrupted shots, no other model in this comparison can match what Vidu Q3 offers.

The Bottom Line

Each of these four models represents a genuine best-in-class capability that no competitor in this comparison matches. Seedance 2.0 owns multi-shot storytelling and multilingual lip-sync. LTX-2 owns open-source freedom and self-hosting economics. Veo 3.1 owns cinematic quality and 4K fidelity. Vidu Q3 owns maximum duration and built-in editing intelligence. The model landscape in early 2026 has matured to the point where choosing the right tool means understanding what you specifically need, rather than chasing a single "best" model that does not exist.

The pricing reality reveals that the most expensive option (Veo 3.1 API at $7.50 per 10 seconds) costs over 375 times more than the cheapest (self-hosted LTX-2 at ~$0.02 per 10 seconds). This extraordinary range means that the first question in your decision process should not be "which model has the best quality" but rather "what is the minimum quality threshold my project requires, and which model meets that threshold at the lowest total cost including post-production?" A model that costs 10x more per clip but saves you 30 minutes of editing per clip is actually cheaper in any workflow where your time has value.

For anyone still undecided, a practical approach is to start with the model that matches your most common use case and expand your toolkit as your needs evolve. There is no penalty for using multiple models—a solo creator might use Seedance 2.0 for daily social content and switch to Veo 3.1 for occasional premium projects, while a developer might self-host LTX-2 for high-volume automated generation and integrate Vidu Q3 through its API for content that requires longer durations. The era of choosing a single AI video model for all needs is over; the 2026 landscape rewards users who understand each tool's strengths and apply them strategically.

The AI video generation market is evolving with remarkable speed—all four models in this comparison launched within a five-week window—and each is likely to receive significant updates throughout 2026. LTX-2's open-source community will continue pushing quality improvements and optimizations. Google will continue advancing Veo's capabilities within the Gemini ecosystem. ByteDance's rapid iteration on Seedance suggests that multi-shot features will only become more sophisticated. And ShengShu Technology's benchmark-leading position with Vidu Q3 suggests that even longer durations and smarter editing features are on the horizon. The model you choose today should serve your current needs, with the understanding that the competitive landscape will continue to improve your options going forward.

Frequently Asked Questions

Which AI video generator has the best quality in 2026?

For raw cinematic quality at 4K resolution, Veo 3.1 currently ranks highest on the Video Arena benchmark at approximately ELO 1220. However, "best quality" depends on your output format—Seedance 2.0 produces the cleanest dialogue audio through its dual-branch architecture, LTX-2 offers the highest frame rate at 50fps Ultra, and Vidu Q3 achieves the best results for longer narrative clips where consistency across 16 seconds matters more than per-frame perfection.

Is LTX-2 really free to use?

Yes, LTX-2 is fully open-source under the Apache 2.0 license, and the model weights are freely downloadable from Hugging Face. The "free" caveat is that you need GPU hardware to run it—either your own (RTX 4090 or equivalent with 24GB+ VRAM) or rented cloud GPU time at approximately $0.40–0.60 per hour. Self-hosting makes each generated clip cost fractions of a cent in electricity, but the initial hardware investment or cloud GPU learning curve is the real cost.

Can I use these models through an API for my application?

All four models offer some form of programmatic access. LTX-2 can be self-hosted with full API control. Veo 3.1 is accessible through the Gemini API at approximately $0.75 per second, or through third-party providers at significantly lower costs. Seedance 2.0 and Vidu Q3 both offer API access through their respective platforms and through third-party aggregators. For developers needing a unified API across multiple video models, services like laozhang.ai provide a single integration point with consistent documentation and competitive pricing.

Which model is best for social media content creation?

For high-volume social media production on a budget, Seedance 2.0 at $0.10 per minute (720p) offers the best price-to-quality ratio with the added bonus of multi-shot storytelling. For social content that benefits from longer clips and built-in music, Vidu Q3's 16-second duration and native BGM generation make it the strongest choice. For premium social content where visual quality is the primary differentiator, Veo 3.1's cinematic output stands above the rest, though at a higher cost per clip.