Google's Veo 3.1 represents the latest evolution in AI video generation, but with great creative power comes the responsibility of content authenticity. In January 2026, understanding how Veo 3.1 watermarks work has become essential for content creators, researchers, journalists, and anyone concerned about AI-generated media authenticity. This comprehensive guide breaks down everything you need to know about Veo's dual watermarking system, from the visible corner watermark to the invisible SynthID technology that Google has embedded in over 10 billion pieces of content worldwide.

The January 2026 update to Veo 3.1 introduced "Ingredients to Video" capabilities, 4K upscaling, and native vertical video support, but the watermarking system remains a constant presence across all generated content. Whether you're trying to create professional videos without visible watermarks, verify if content you've encountered is AI-generated, or simply understand how Google's transparency efforts work, this guide provides the authoritative answers based on official documentation and expert analysis.

Understanding Veo 3.1's Dual Watermarking System

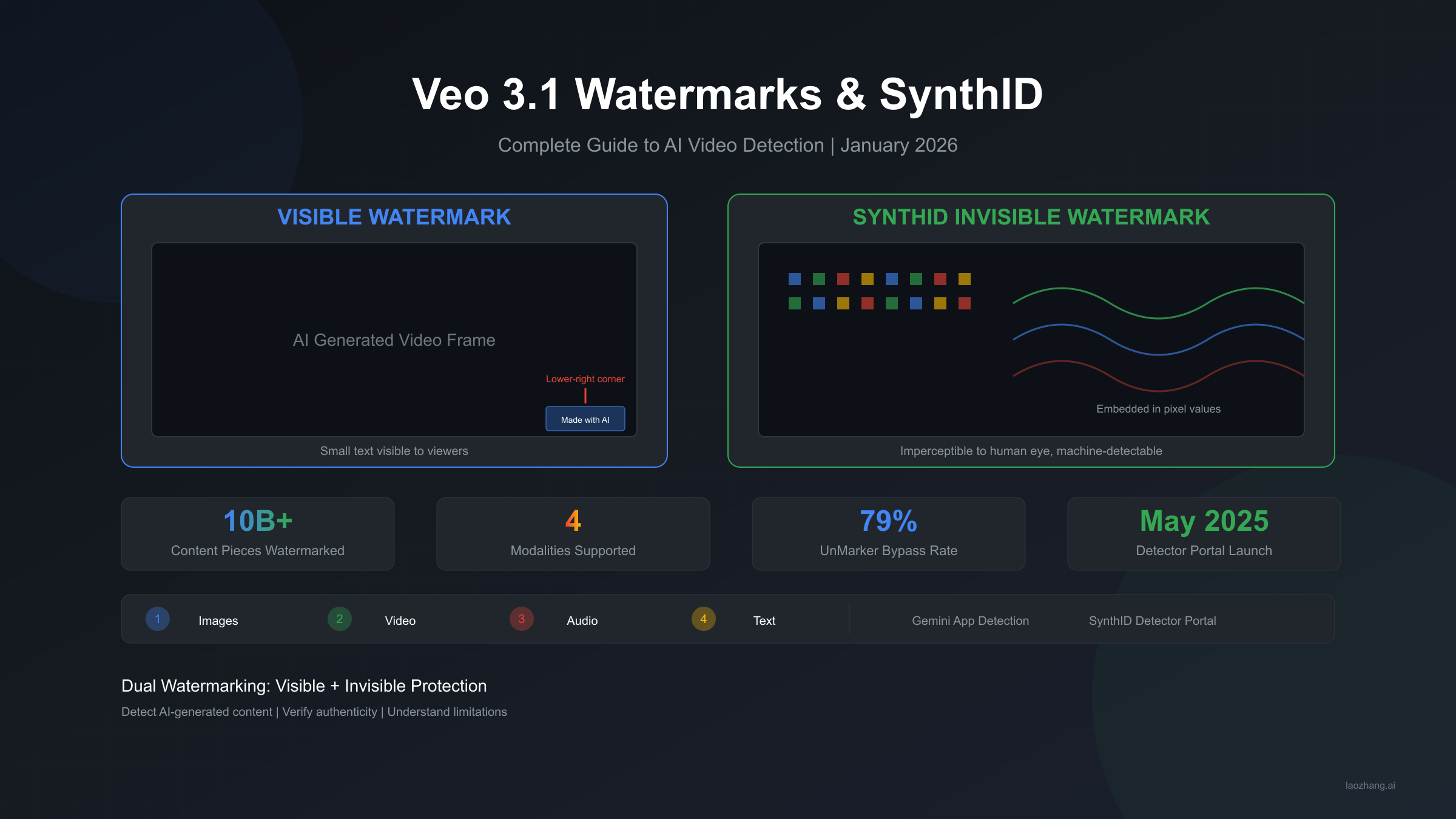

When Google released Veo 3 in June 2025, it introduced a dual-layer approach to content authenticity that balances consumer awareness with forensic verification. Every video generated through Veo receives two distinct watermarks: a visible text overlay and an invisible digital signature embedded throughout the content. This combination addresses two different needs that Google identified in their transparency research.

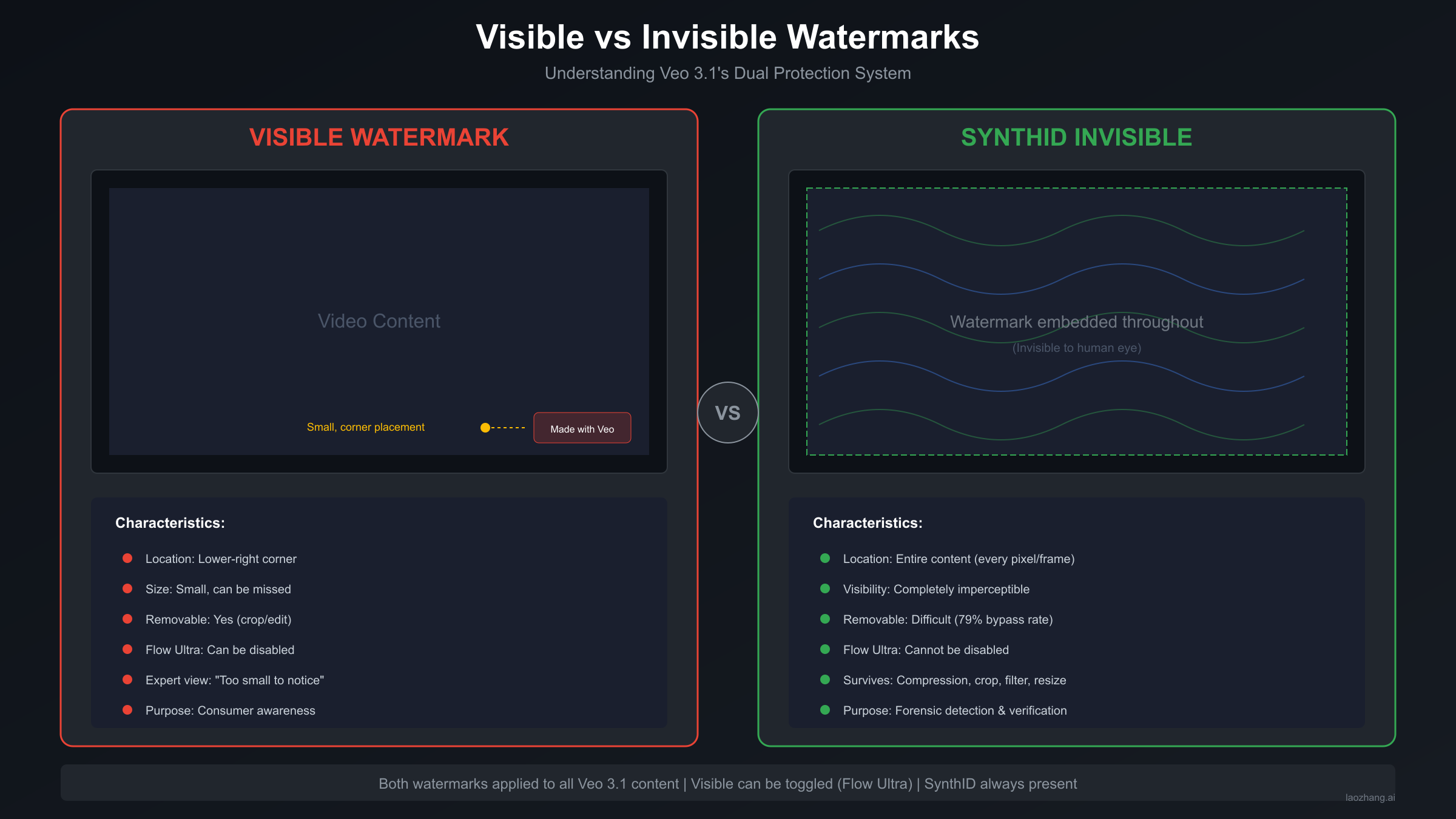

The visible watermark serves immediate consumer awareness. When someone scrolls through social media and encounters a Veo-generated video, the small text in the corner provides an instant signal that the content was created using AI. This addresses the concern that viewers might be deceived by realistic AI-generated content, particularly in contexts where authenticity matters. However, as digital forensics expert Hany Farid from UC Berkeley has noted, "this small watermark is unlikely to be apparent to most consumers who are moving through their social media feed at a break-neck clip."

The invisible SynthID watermark provides forensic-level verification. Unlike the visible watermark that can be cropped or edited away, SynthID embeds machine-readable signals directly into the pixel values of each frame. These signals are designed to survive common modifications including compression, cropping, filtering, frame rate changes, and color adjustments. When someone needs to definitively verify whether content was generated by Google's AI tools, SynthID provides that capability.

The decision to implement both systems reflects Google's understanding that different stakeholders have different needs. A casual social media user benefits from visible indicators, while journalists investigating potential deepfakes need robust verification tools that cannot be easily defeated.

| Watermark Type | Purpose | Location | Can Be Removed | Detection Method |

|---|---|---|---|---|

| Visible | Consumer awareness | Lower-right corner | Yes (crop/edit) | Visual inspection |

| SynthID | Forensic verification | Throughout content | Difficult (79% bypass rate) | Gemini App / Detector Portal |

Understanding this dual system is crucial because it explains why some Veo videos appear with watermarks while others don't, and why removal of the visible watermark doesn't eliminate the content's AI fingerprint. The watermarking policy has evolved since Veo's initial release, with Google adding more visibility while maintaining the invisible protection layer.

The Visible Watermark - What You Actually See

The visible watermark on Veo-generated videos appears as small text in the lower-right corner of the frame. Introduced during Veo 3's expansion to international markets in June 2025, this watermark typically reads "Made with AI" or similar text indicating the content's artificial origin. The implementation reflects Google's response to mounting pressure for AI transparency, though the execution has drawn criticism from experts who argue it doesn't go far enough.

Location and appearance matter for effectiveness. Google chose to place the watermark in the lower-right corner, following conventions established by television networks and streaming services for their own branding. However, the font size and opacity have been deliberately kept subtle to avoid significantly impacting the viewing experience. This balance between visibility and aesthetics has been controversial. Mashable journalists reported requiring several seconds to locate the watermark even while actively searching for it, and on mobile devices the text becomes even more difficult to notice.

Platform-specific implementations vary significantly. The visible watermark appears by default in the Gemini App and through standard API access, but Google has created exceptions for certain use cases. Most notably, Flow Ultra subscribers can toggle the visible watermark off entirely, though they remain bound by Google's policy requiring disclosure of AI-generated content. This exception recognizes that professional content creators may have legitimate reasons to present clean video output while still disclosing AI involvement through other means.

| Platform | Visible Watermark | Can Disable | SynthID Present |

|---|---|---|---|

| Gemini App | Yes (default) | No | Always |

| Flow (Free) | Yes | No | Always |

| Flow (Ultra) | Optional | Yes (toggle) | Always |

| YouTube Shorts | Yes | No | Always |

| Gemini API | Yes (default) | No | Always |

| Vertex AI | Yes (default) | No | Always |

Expert criticism focuses on practical effectiveness. Hany Farid, a professor at UC Berkeley's School of Information and a leading expert in digital forensics, has been particularly vocal about the watermark's limitations. His assessment that the watermark is "unlikely to be apparent to most consumers" reflects a fundamental tension in Google's approach. A watermark that doesn't disrupt viewing may also fail to achieve its awareness goals. The small size means the watermark can be cropped away in seconds using basic video editing tools, and social media platforms often automatically crop content to different aspect ratios during upload.

The visible watermark represents Google's most accessible attempt at AI content identification, but its effectiveness depends heavily on viewer attention and content context. For scenarios requiring definitive verification, the invisible SynthID system provides more robust protection.

SynthID Invisible Watermark - How It Works

SynthID represents Google DeepMind's most sophisticated approach to content authenticity, embedding imperceptible digital signatures directly into AI-generated content at the moment of creation. Since its announcement, Google reports that over 10 billion pieces of content have been watermarked with SynthID across four modalities: images via Imagen, video via Veo, audio via Lyria, and text via Gemini. This scale makes it the most widely deployed invisible AI watermarking system in existence.

The technical architecture uses dual neural networks for visual content. SynthID employs paired neural networks that have been trained together through an adversarial process. The embedder network learns to inject watermark patterns into pixel values in ways that are imperceptible to human vision but detectable by the paired detector network. The training process subjects both networks to repeated transformations including JPEG compression, filtering, rotation, noise addition, and resizing. The embedder receives penalties when watermark strength degrades through these transformations, creating a feedback loop that strengthens resilience over time.

For video content, SynthID operates on a frame-by-frame basis with temporal consistency. Each frame receives its own watermark embedding, but the process maintains coherence across the video timeline. This design allows the watermark to survive even if portions of the video are trimmed or if individual frames are extracted as images. The watermark signal is described as "holographically distributed," meaning that even cropped fragments can retain detectable information.

Text watermarking uses a fundamentally different approach called tournament sampling. When a language model generates text, SynthID modifies token selection through seeded competitions. The process generates pseudorandom g-values for each potential token using context hashing, then runs multi-layer elimination tournaments where tokens compete based on model likelihood plus watermark bias. The resulting watermark is statistical rather than syntactic, invisible to readers, and doesn't affect the meaning or quality of the generated text. However, as Google acknowledges, text watermarks are "less effective on factual responses" and lose confidence when text is "thoroughly rewritten, or translated to another language."

| Modification | SynthID Survival (Video) | Detection Confidence |

|---|---|---|

| JPEG/H.264 compression | High | 85-95% |

| Cropping (up to 50%) | High | 75-90% |

| Color filtering | High | 80-95% |

| Frame rate change | High | 85-95% |

| Resolution scaling | High | 80-90% |

| Heavy editing/compositing | Variable | 40-70% |

Understanding what SynthID can and cannot do is crucial. SynthID is specifically a "signed vs unsigned" detector - it confirms the presence of its own signature but cannot identify whether content without a watermark is AI-generated or authentic. This means SynthID won't detect AI-generated images from ChatGPT, Midjourney, DALL-E, or any non-Google AI tool. The technology exists to verify Google AI content specifically, not to serve as a universal deepfake detector. For more technical background on SynthID's capabilities, see our detailed SynthID explainer.

How to Detect AI-Generated Videos

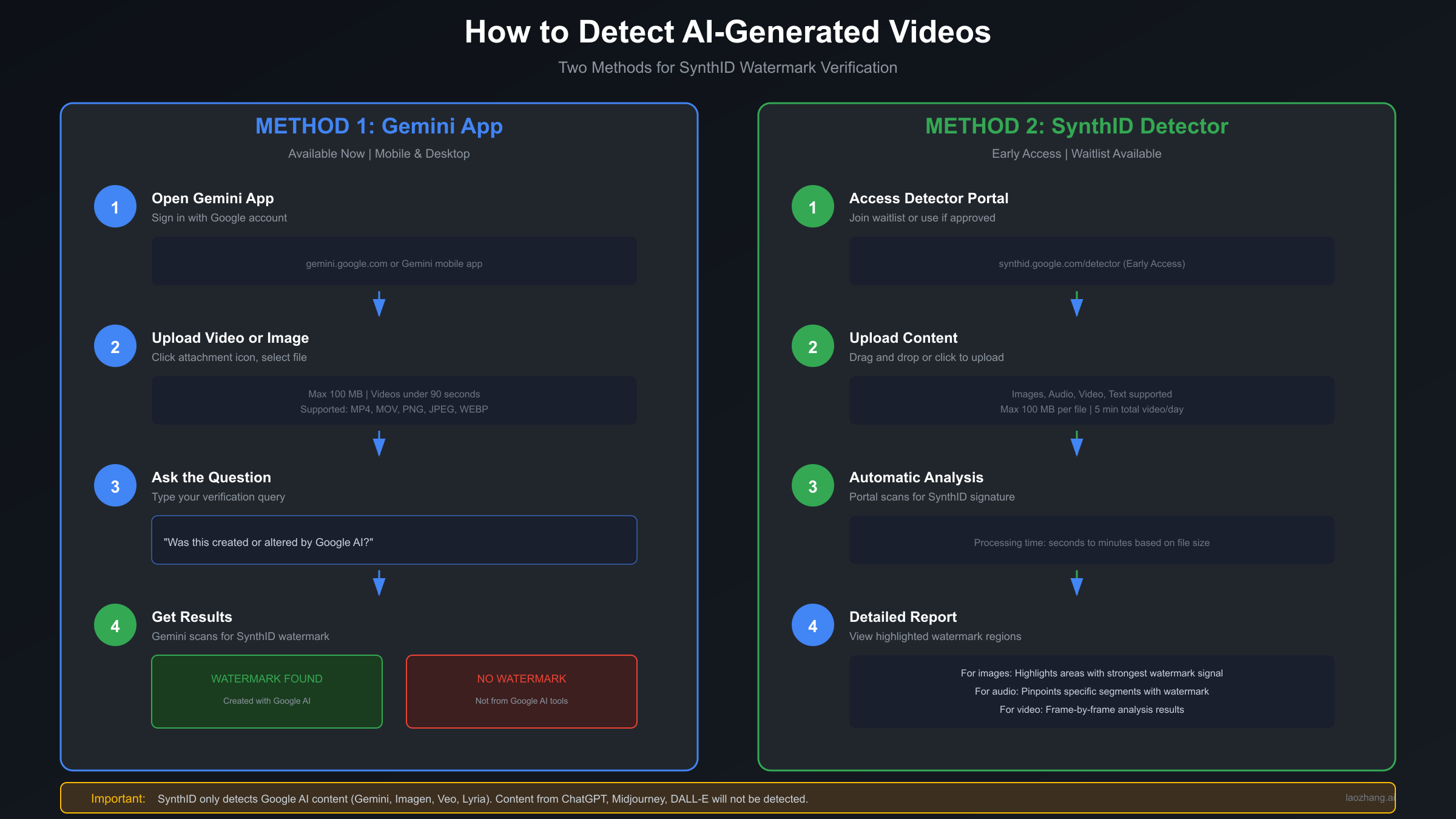

Verifying whether video content was generated by Google's AI tools requires access to SynthID detection capabilities. As of January 2026, Google provides two primary methods for verification: the Gemini App (available now to all users) and the SynthID Detector Portal (currently in early access). Both methods can identify content created with Veo, Imagen, and other Google AI tools.

The Gemini App method is accessible to anyone with a Google account. Simply open the Gemini App on mobile or desktop, sign in with your Google account, and upload the video you want to verify. Then ask Gemini directly: "Was this created or altered by Google AI?" Gemini will scan the content for SynthID watermarks and report whether it detects any signature. This method works with videos under 90 seconds and 100 MB in file size. For images, crop tightly around the subject before uploading to maximize detection accuracy.

The SynthID Detector Portal provides more detailed analysis but has limited access. Announced at Google I/O 2025 on May 20th, the Detector Portal offers professional-grade verification with visual highlighting of watermarked regions. For images, it indicates areas where watermark signal is strongest. For audio, it pinpoints specific segments containing watermarks. For video, it provides frame-by-frame analysis results. However, access is currently limited to early testers including journalists, media professionals, and researchers who have joined the waitlist.

File requirements and limitations apply to both methods. Video files must be under 100 MB and less than 90 seconds for the Gemini App, with a daily limit of 5 minutes total video verification. The Detector Portal has similar constraints. Supported formats include MP4, MOV, PNG, JPEG, and WEBP. Screenshots should be cropped tightly around the content rather than including surrounding interface elements, and collages of multiple images should be verified separately.

pythonimport google.generativeai as genai genai.configure(api_key="YOUR_GEMINI_API_KEY") model = genai.GenerativeModel('gemini-2.0-flash') # Upload video file video_file = genai.upload_file("suspected_ai_video.mp4") # Query for SynthID watermark response = model.generate_content([ video_file, "Was this video created or altered by Google AI? Check for SynthID watermark." ]) print(response.text)

Interpreting results requires understanding the system's scope. A positive detection means the content was definitively created or modified using Google AI tools with SynthID watermarking enabled. A negative result has two possible interpretations: either the content was not created with Google AI, or the watermark was successfully removed or degraded below detection threshold. SynthID cannot verify content created by other AI systems like OpenAI's Sora, Runway's Gen-3, or Pika Labs. For guidance on generating your own Veo 3.1 content, see our comprehensive video generation guide.

Can SynthID Watermarks Be Removed?

The question of watermark removal has significant implications for content authenticity, misinformation, and the practical utility of AI watermarking as a verification mechanism. Research published at IEEE S&P 2025 demonstrates that SynthID watermarks can be attacked, though the reality is more nuanced than simple "removed" or "not removed" outcomes.

The UnMarker attack achieves a 79% bypass rate against SynthID. Developed by researchers and published as "UnMarker: A Universal Attack on Defensive Image Watermarking," this tool represents the first practical universal attack on defensive watermarking systems. Unlike previous attacks requiring detector feedback or knowledge of the watermarking scheme, UnMarker employs adversarial optimizations to disrupt the frequency spectra of watermarked images. The research team tested their approach against seven watermarking schemes including Google's SynthID and Meta's StableSignature, demonstrating high success rates across different systems.

Google disputes the 79% figure and emphasizes real-world conditions. Google's position is that SynthID remains robust under typical usage scenarios and that laboratory attacks may not reflect practical threat models. The company acknowledges that SynthID is "not infallible" but argues that the cost-benefit analysis still favors watermarking. Most casual attempts to remove watermarks through common editing operations like compression, cropping, or filtering do not successfully eliminate the SynthID signature.

Most "removal" degrades detection confidence rather than completely eliminating signatures. The practical implication is that watermark removal exists on a spectrum. Sophisticated attackers using specialized tools may successfully bypass detection, while typical editing operations preserve enough signal for verification. This creates a gray area where heavily processed content may yield uncertain results from detection tools.

| Removal Method | Success Rate | Quality Impact | Technical Skill Required |

|---|---|---|---|

| Simple cropping | Low (10-30%) | Minimal | None |

| Compression | Low (5-20%) | Variable | None |

| Color manipulation | Low (15-35%) | Minimal | Low |

| UnMarker attack | High (79%) | Minimal-Moderate | High |

| Complete regeneration | N/A (new watermark) | N/A | Variable |

Legal and ethical considerations surround watermark removal. While removing watermarks from content you've legitimately created may be acceptable under Google's terms (particularly for Flow Ultra subscribers who can toggle visible watermarks), removing watermarks to misrepresent content origin raises ethical and potentially legal concerns. Google's usage policies require disclosure of AI-generated content in certain contexts, and removing identification measures to deceive audiences violates these terms. For an in-depth analysis of removal techniques and their implications, see our SynthID removability guide.

Platform-Specific Watermark Behavior

Understanding how watermarks behave across different Google platforms helps creators choose the right tool for their needs and set appropriate expectations for their content. The January 2026 Veo 3.1 update expanded availability while maintaining the core watermarking policies established with Veo 3.

Gemini App provides the most straightforward access with full watermarking. The consumer-facing Gemini App applies both visible and SynthID watermarks to all generated video content without exception. The visible watermark appears in the lower-right corner and cannot be disabled. This approach maximizes transparency for the primary consumer audience while ensuring forensic verification remains possible. The Gemini App also serves as the primary detection tool for verifying other content's AI origin.

Flow offers tiered watermark control based on subscription level. Google's dedicated video creation platform implements different policies for free and paid users. Free tier users receive both visible and SynthID watermarks with no removal options. Ultra subscribers (the premium tier) gain the ability to toggle off the visible watermark while SynthID remains always present. This distinction acknowledges that professional creators may have legitimate needs for clean output while still maintaining accountability through invisible watermarking.

API access through Gemini API and Vertex AI serves developer needs. Both programmatic interfaces apply SynthID watermarks to generated content by default. The visible watermark is also present in standard API output. Enterprise customers using Vertex AI may have additional configuration options, but SynthID embedding is not optional at any tier. This ensures that applications built on Google's video generation capabilities maintain content authenticity regardless of how the API is integrated.

| Platform | Primary Users | Visible WM Default | Visible WM Removable | SynthID |

|---|---|---|---|---|

| Gemini App | Consumers | Yes | No | Always |

| Flow Free | Creators | Yes | No | Always |

| Flow Ultra | Pro Creators | Yes | Yes (toggle) | Always |

| YouTube Shorts | Creators | Yes | No | Always |

| YouTube Create | Mobile Creators | Yes | No | Always |

| Google Vids | Workspace Users | Yes | No | Always |

| Gemini API | Developers | Yes | No | Always |

| Vertex AI | Enterprise | Yes | Contact Sales | Always |

YouTube integration represents special handling. Videos generated with Veo and uploaded to YouTube receive platform-specific labeling in addition to the embedded watermarks. YouTube's transparency labels may appear in the video description or through other disclosure mechanisms that YouTube is developing for AI-generated content. The interaction between Veo watermarks and YouTube's own content policies continues to evolve as the platform develops its approach to AI-generated media.

Limitations and What SynthID Can't Do

While SynthID represents a significant advancement in AI content verification, understanding its limitations is essential for anyone relying on it for verification purposes. The technology works within specific boundaries that users must understand to avoid false confidence or incorrect conclusions.

SynthID only detects Google AI content. This fundamental limitation means that videos created with OpenAI's Sora, Runway's Gen-3, Pika Labs, Minimax's Hailuo, Kling AI, or any non-Google video generation tool will return negative results even if they are definitively AI-generated. SynthID checks for its own signature specifically - it doesn't perform general AI detection analysis. If you try to verify a Midjourney image or ChatGPT-assisted content, SynthID will report no watermark found, which doesn't mean the content is authentic.

The fragmentation problem affects the entire AI detection ecosystem. Each major AI provider is developing their own watermarking system: Google has SynthID, Meta has StableSignature, and others are implementing various approaches. These systems don't interoperate. A video verified clean by SynthID might carry Meta's watermark if created with their tools. Until industry standardization occurs (if it ever does), verification requires knowing which tool created the content in the first place.

Detection confidence degrades with heavy modification. While SynthID is designed to survive common editing operations, extensive manipulation can reduce or eliminate the watermark signal. Combining multiple AI-generated clips, adding significant overlays, or running content through additional AI processing may produce results below detection threshold. The system works best with content that has been minimally modified from its original generation.

| Scenario | SynthID Capability | Alternative Approach |

|---|---|---|

| Verify Veo-generated video | Full detection | N/A - SynthID is optimal |

| Verify OpenAI/Sora content | Cannot detect | Visual analysis, metadata |

| Verify Runway/Midjourney | Cannot detect | Platform-specific checks |

| Heavily edited composite | Reduced confidence | Manual forensic analysis |

| Content without any watermark | Cannot determine origin | Expert forensic examination |

General AI detection remains an unsolved problem. For content that lacks embedded watermarks, Gemini can provide "general observations about common AI attributes such as unusual lighting, repetitive patterns, and errors in small details." However, these heuristic approaches become less reliable as AI generation quality improves. The most realistic AI-generated content may exhibit none of these tells, while some authentic content may coincidentally display similar artifacts. Relying on visual analysis alone for authenticity determination carries significant uncertainty.

For a broader comparison of AI video generation tools and their respective strengths, see our comprehensive AI video models comparison.

Future of AI Video Watermarking

The watermarking landscape continues evolving as regulatory pressure increases, industry partnerships expand, and technical capabilities advance. Understanding where the technology is heading helps creators and platforms prepare for upcoming changes.

NVIDIA partnership expands SynthID beyond Google. Google has partnered with NVIDIA to embed SynthID watermarks in videos generated by NVIDIA's Cosmos preview NIM microservice. This marks the first major expansion of SynthID to non-Google platforms and signals a potential path toward broader industry adoption. As more AI video tools incorporate SynthID, the verification ecosystem becomes more comprehensive.

Regulatory pressure accelerates disclosure requirements. The EU AI Act and similar regulations worldwide are establishing legal requirements for AI content disclosure. These regulations may mandate specific watermarking implementations or disclosure mechanisms beyond voluntary industry efforts. Content creators operating internationally should anticipate more stringent requirements emerging in 2026 and beyond.

Industry standardization efforts continue. Initiatives like C2PA (Coalition for Content Provenance and Authenticity) are working toward interoperable content authenticity standards. While current watermarking systems remain fragmented by platform, future developments may enable cross-platform verification. The technical challenges are significant, but the direction suggests eventual convergence.

For developers and creators needing robust multi-provider solutions, services like laozhang.ai provide unified API access across different AI models including Veo 3.1, offering consistent interfaces while each provider maintains their own watermarking policies. This approach lets you focus on creation while platforms handle authenticity measures according to their respective implementations.

Recommendations for creators navigating watermarks today:

First, understand your platform's specific watermark policies before committing to content creation. Flow Ultra's toggle option may be worth the subscription for professional use cases requiring clean output. Second, maintain records of your AI-generated content creation including prompts, settings, and original outputs. This documentation can help establish provenance if questions arise later. Third, plan for disclosure requirements by incorporating AI generation acknowledgment into your workflows rather than treating it as an afterthought. Fourth, stay informed about policy changes as both platform rules and regulations evolve rapidly. Fifth, consider watermarking as part of the content lifecycle rather than an obstacle - the transparency it provides may become a competitive advantage as audiences increasingly value authenticity signals.

The dual watermarking system in Veo 3.1 represents Google's current balance between creator flexibility and content authenticity. As the technology and regulatory landscape mature, expect continued refinement of how AI-generated content is marked, detected, and disclosed. For the latest updates and technical documentation, visit docs.laozhang.ai for implementation guides and API references.