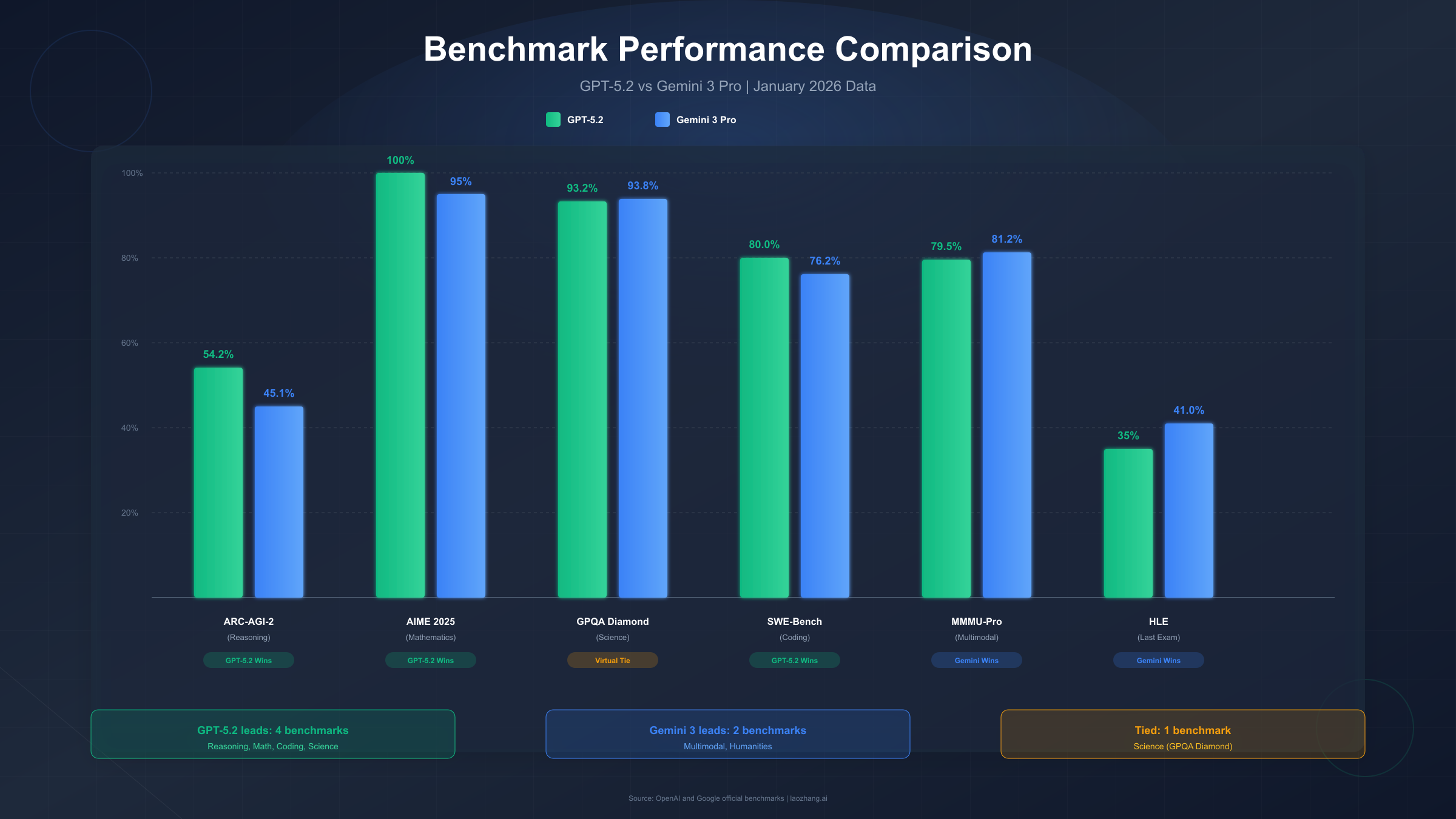

OpenAI's GPT-5.2 and Google's Gemini 3 Pro represent the cutting edge of artificial intelligence in January 2026. In direct benchmark comparisons, GPT-5.2 leads in abstract reasoning with a 54.2% score on ARC-AGI-2 compared to Gemini's 45.1%, achieves perfect 100% on the AIME 2025 mathematics test, and scores 80% on the SWE-Bench coding benchmark. Gemini 3 Pro counters with superior multimodal capabilities, scoring 81.2% on MMMU-Pro versus GPT-5.2's 79.5%, and offers a massive 1-million-token context window for long document processing. API pricing slightly favors GPT-5.2 at $1.75 per million input tokens versus Gemini's $2.00, though Gemini often provides faster output at 148 tokens per second compared to GPT-5.2's 102 tokens per second.

Understanding GPT-5 and Gemini: The Current Model Landscape

The artificial intelligence landscape has evolved dramatically since August 2025, when OpenAI unveiled GPT-5 during a livestream event that marked a significant departure from incremental model updates. This release introduced a unified system architecture featuring multiple sub-models—including main, mini, thinking, and nano variants—along with a real-time router that dynamically selects the optimal model based on conversation complexity, tool requirements, and user intent. The GPT-5 family superseded all previous OpenAI models including GPT-4, GPT-4o, GPT-4.1, GPT-4.5, and reasoning models like o3 and o4-mini.

OpenAI's release strategy accelerated following GPT-5's debut. In October 2025, the company launched GPT-5.1, which balanced intelligence and speed for agentic and coding tasks. When Google released Gemini 3 Pro on November 18, 2025, reports emerged of a "code red" response within OpenAI, leading to the rapid deployment of GPT-5.2 on December 11, 2025. This latest model represents the most capable GPT variant for professional knowledge work, featuring three tiers: Instant for quick responses, Thinking for deeper reasoning, and Pro for maximum capability.

Google's Gemini evolution followed a parallel trajectory. Gemini 2.5 Pro established Google's competitive position in mid-2025, offering impressive multimodal capabilities and integration with Google's ecosystem. The November 2025 release of Gemini 3 Pro Preview pushed boundaries further, emphasizing multimodal understanding across text, images, video, and audio while maintaining a massive 1-million-token context window. Google positioned this release as their most advanced generative AI model, built specifically for agentic workflows and deep reasoning tasks.

Understanding this timeline matters because benchmark comparisons must account for which specific model versions are being tested. When articles reference "GPT-5 vs Gemini," they might mean different version combinations. For the most current comparison relevant to January 2026, we focus primarily on GPT-5.2 (including its Thinking and Pro variants) versus Gemini 3 Pro and its Deep Think mode. Both companies continue rapid iteration, so readers should verify version numbers when consulting benchmark data.

Benchmark Performance: Head-to-Head Comparison

Benchmark testing reveals distinct strengths for each model, though interpreting these results requires understanding what each test actually measures and why it matters for practical applications. The most discussed benchmark in recent months has been ARC-AGI-2, designed specifically to test genuine reasoning ability while resisting memorization—a weakness that plagued earlier evaluation methods.

Abstract Reasoning and Problem Solving

The ARC-AGI-2 benchmark produced perhaps the most striking results in the GPT-5.2 announcement. OpenAI's model scored 52.9% in Thinking mode and 54.2% in Pro mode, significantly outperforming both Claude Opus 4.5 at 37.6% and Gemini 3 Deep Think at 45.1%. This gap matters because ARC-AGI-2 tests the ability to solve novel problems that cannot be memorized from training data—the type of reasoning required when encountering genuinely new situations.

What does this mean practically? When you need an AI to figure out something it hasn't seen before—whether that's understanding a unique business problem, developing a novel algorithm, or reasoning through an unprecedented situation—GPT-5.2's reasoning advantage becomes meaningful. However, the 54.2% score also means it fails nearly half the time on these challenging tasks, reminding us that even frontier models have significant limitations.

Mathematics Performance

Mathematics benchmarks tell a cleaner story. GPT-5.2 achieved a perfect 100% on AIME 2025, the American Invitational Mathematics Examination, without using any external tools. Gemini 3 Pro reached approximately 95%, and only achieved 100% when code execution was enabled. On more challenging mathematical frontiers, GPT-5.2 scored 40.3% on FrontierMath, a benchmark designed to test mathematical reasoning at the limits of current AI capabilities.

For users needing reliable mathematical computation—whether for financial modeling, scientific calculations, or educational applications—these scores suggest GPT-5.2 as the more dependable choice. The difference between 100% and 95% might seem small, but in production systems where mathematical errors cascade, that 5% gap can be significant.

Science and Graduate-Level Questions

Both models perform exceptionally well on GPQA Diamond, a graduate-level science benchmark. GPT-5.2 Pro scored 93.2% while Gemini 3 Deep Think achieved 93.8%—effectively a tie within reasonable variance. This near-parity suggests that for general scientific question-answering, either model will perform comparably well. The choice between them for science applications should consider other factors like context length, multimodal needs, or ecosystem integration rather than raw benchmark scores.

Interestingly, Gemini 3 Deep Think maintains the highest published score on "Humanity's Last Exam" at 41.0% without tools, a benchmark specifically designed to test the limits of AI knowledge and reasoning. Google's model also achieved gold-medal performance on both the International Mathematical Olympiad and International Collegiate Programming Contest World Finals, demonstrating strength in competition-level problem solving.

Coding and Software Development

Coding benchmarks have become critical differentiators as more developers integrate AI into their workflows. On SWE-bench Verified, which tests real bug fixes from GitHub repositories, GPT-5.2 scores approximately 80.0%, slightly ahead of Gemini 3 Pro's 76.2%. Claude Opus 4.5 currently leads this benchmark at 80.9%, though early results remain somewhat unstable. For comprehensive comparisons of AI coding tools, you can explore our detailed OpenAI API pricing guide which covers cost considerations for development workflows.

GPT-5.2 demonstrated particular strength on SWE-Bench Pro, achieving roughly 55.6% and highlighting improved multi-language and multi-file coding performance. This matters for real-world development where projects span multiple files and programming languages. Gemini 3 Pro remains capable in coding tasks but often produces simpler code with better readability—a trade-off some developers prefer.

Coding and Development Capabilities

Beyond benchmark scores, practical coding performance involves factors like code quality, explanation clarity, debugging capability, and integration with development workflows. Both models have evolved significantly in their ability to assist developers, but they approach the task differently.

GPT-5.2's architecture emphasizes tool use and multi-step reasoning, making it particularly effective for complex debugging scenarios. When presented with error messages, GPT-5.2 tends to trace through potential causes systematically, often identifying root causes that require understanding multiple interacting components. The model's improved performance on multi-file coding benchmarks reflects this architectural strength—it can maintain coherence across files and reason about how changes in one location affect behavior elsewhere.

Gemini 3 Pro brings different strengths to development work. Its massive 1-million-token context window means developers can feed entire codebases for analysis without hitting context limits. For large-scale code review, architectural analysis, or understanding legacy systems, this extended context proves invaluable. The model's integration with Google Cloud services also provides advantages for teams already working within Google's ecosystem.

Language Support and Versatility

Both models support major programming languages including Python, JavaScript, TypeScript, Java, C++, Go, and Rust. However, testing reveals nuanced differences in less common languages and frameworks. GPT-5.2 generally produces more idiomatic code in mainstream languages, while Gemini 3 Pro sometimes offers better handling of Google-specific technologies like Flutter, Dart, and Angular.

For developers building with multiple AI models, services like laozhang.ai provide unified API access to both GPT-5.2 and Gemini 3 Pro, allowing you to switch between models based on task requirements without managing multiple integrations. This flexibility becomes valuable when different parts of a project benefit from different model strengths.

Code Generation Quality

In practical code generation, GPT-5.2 tends toward more sophisticated solutions that leverage advanced language features and design patterns. This approach excels when working on complex systems but can overcomplicate simple tasks. Gemini 3 Pro often produces cleaner, more readable code that's easier to maintain but may miss optimization opportunities in performance-critical sections.

The choice between models often depends on team composition and project phase. Early-stage projects benefiting from rapid prototyping might favor Gemini's readability. Mature systems requiring careful debugging and optimization might lean toward GPT-5.2's analytical depth.

Multimodal and Vision Capabilities

Multimodal AI—the ability to understand and generate content across text, images, video, and audio—represents a key battleground between these models. Google's Gemini architecture was designed from the ground up as a multimodal system, while OpenAI's GPT-5.2 added multimodal capabilities to an architecture originally optimized for text.

Gemini 3 Pro excels in vision tasks, scoring 81.2% on MMMU-Pro compared to GPT-5.2's 79.5%. This benchmark tests multimodal understanding and reasoning across diverse content types. The gap widens for video understanding, where Gemini 3 Pro's native video processing outperforms GPT-5.2's frame-by-frame analysis approach. For applications involving document analysis with embedded images, chart interpretation, or video content understanding, Gemini 3 Pro currently offers the stronger solution.

GPT-5.2's multimodal capabilities, while trailing Gemini's in raw benchmarks, provide better integration with the model's reasoning strengths. When analyzing images requires complex logical inference—understanding cause-and-effect in diagrams, for instance—GPT-5.2's reasoning advantage partially compensates for its vision limitations. The model also offers strong image generation capabilities through integration with DALL-E 3, though this represents a different use case than image understanding.

Audio and Real-Time Processing

Both models now support audio input and output, enabling voice-based interactions. Gemini 3 Pro's tighter integration with Google's speech recognition and synthesis provides lower latency for real-time conversational applications. GPT-5.2's audio capabilities work well but feel like a separate system integrated with the core model rather than a native capability.

For developers building voice-first applications or real-time translation systems, Gemini's audio integration currently offers smoother implementation. Applications prioritizing reasoning quality over interaction speed might still prefer GPT-5.2 despite the integration overhead.

Pricing Comparison: API and Subscription Costs

Cost considerations often determine practical model selection, particularly for applications processing significant volumes. Both companies offer tiered pricing with substantial differences in structure and optimization potential.

API Pricing Structure

GPT-5.2's API pricing starts at $1.75 per million input tokens and $14 per million output tokens for the standard tier. OpenAI offers a significant 90% discount for cached inputs, reducing repeat-query costs dramatically for applications that reuse context. The pricing tier structure means costs vary based on usage patterns and pre-commitment levels.

Gemini 3 Pro prices at approximately $2.00 per million input tokens and $12 per million output tokens. Google's batch processing option offers roughly 50% cost reduction for non-time-sensitive workloads. For high-volume applications with flexible timing requirements, this batch pricing can substantially reduce total costs.

Cost-Per-Task Analysis

Raw per-token pricing tells only part of the story. GPT-5.2's efficiency often results in fewer tokens needed to accomplish the same task, potentially offsetting higher per-token output costs. For a typical customer support query requiring 500 input tokens and 200 output tokens, GPT-5.2 costs approximately $0.0037 while Gemini 3 Pro costs approximately $0.0034—a negligible difference at the query level but significant at scale.

Where cost differences become meaningful is in complex tasks requiring extended reasoning. GPT-5.2's Thinking and Pro modes consume more tokens but often solve problems in fewer attempts. Gemini 3 Pro's faster output speed (148 tokens/second versus 102 tokens/second) reduces wall-clock time but doesn't affect token-based costs.

Consumer Subscription Options

For individual users, ChatGPT Plus at $20/month provides access to GPT-5.2 with usage caps. ChatGPT Pro at $200/month removes most caps and enables GPT-5.2 Pro mode for maximum capability. Google's Gemini Advanced costs $19.99/month and includes 2TB of cloud storage alongside AI access—additional value for users needing storage.

Both platforms offer free tiers, but with significant limitations. Gemini's free tier uses less capable models than the Advanced subscription, while ChatGPT's free tier provides limited GPT-5 access before defaulting to older models. For serious usage, paid subscriptions remain necessary.

For teams seeking to test multiple models without committing to multiple subscriptions, API aggregation services offer an alternative. The Gemini API pricing guide provides detailed cost breakdowns for Google's offerings specifically.

Real-World Use Cases: When to Use Each

Abstract benchmarks and pricing only matter insofar as they translate to practical outcomes. Different applications naturally favor different model strengths, and understanding these patterns helps make appropriate selections.

Enterprise Knowledge Work

For professional knowledge workers handling complex analysis, report generation, and strategic planning, GPT-5.2's reasoning capabilities provide tangible advantages. The model excels at synthesizing information from multiple sources, identifying logical inconsistencies, and producing nuanced analysis. OpenAI claims GPT-5.2 "beats or ties with top industry professionals on 70.9% of comparisons" on real knowledge work tasks—a metric suggesting genuine utility for white-collar automation.

Gemini 3 Pro offers advantages for knowledge work involving extensive documentation. Its 1-million-token context window means entire policy manuals, contract libraries, or research paper collections can be analyzed in a single query. For legal review, compliance checking, or academic research requiring deep document understanding, this extended context proves valuable.

Software Development

Development workflows benefit from both models depending on the specific task. GPT-5.2 shines in debugging complex issues, particularly those involving subtle logical errors or race conditions. Its ability to reason through code execution and identify non-obvious failure modes saves significant developer time. The model also produces more sophisticated test cases, often catching edge cases that developers might miss.

Gemini 3 Pro excels at code review for large pull requests, architectural documentation, and understanding legacy codebases. When developers need to understand a new codebase quickly, feeding substantial portions to Gemini's massive context window accelerates onboarding. The model's integration with Google Cloud also simplifies deployment for teams using GCP.

Content Creation and Marketing

Both models perform well for content creation, but with different strengths. GPT-5.2 produces more varied and creative writing, with better ability to maintain consistent voice across long-form content. The model handles nuanced tone adjustments effectively—shifting from professional to casual or adapting to different brand voices.

Gemini 3 Pro offers advantages for content involving research synthesis or fact-checking against web sources. Its real-time web access provides current information without the knowledge cutoff limitations affecting GPT-5.2. For content requiring the latest statistics, news references, or trend analysis, Gemini's grounding capabilities reduce hallucination risk.

Data Analysis and Research

Scientific and analytical work benefits from GPT-5.2's mathematical precision and logical reasoning. The perfect AIME score reflects reliable mathematical computation, important for statistical analysis, financial modeling, or scientific calculations. Researchers trusting AI for numerical work will find GPT-5.2 more dependable.

Gemini 3 Pro's multimodal capabilities suit research involving visual data—analyzing charts, graphs, experimental images, or video recordings. The model's ability to process these diverse inputs natively, combined with extended context for large datasets, creates advantages for data-rich research environments.

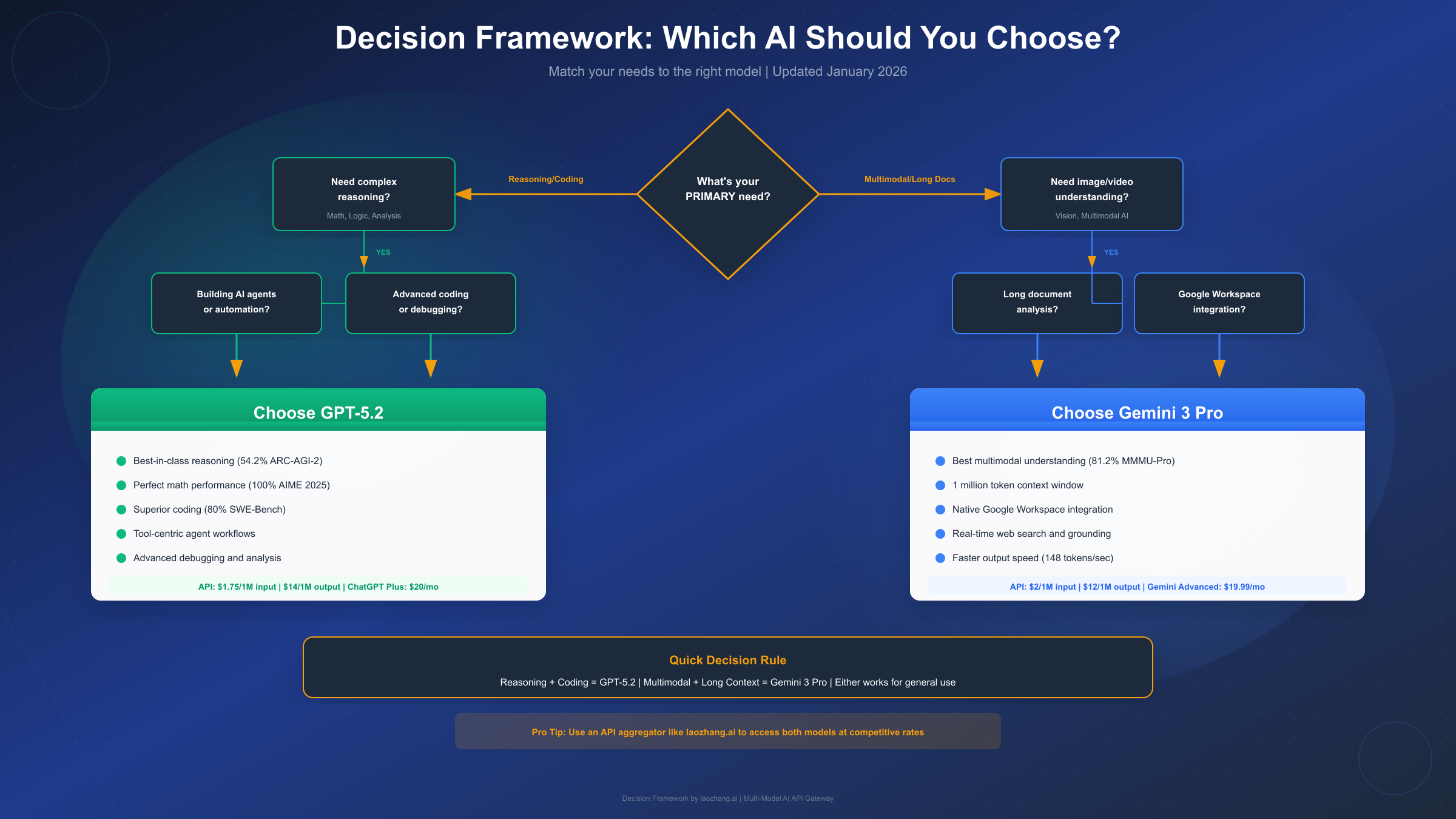

Which Should You Choose? Decision Framework

Selecting between GPT-5.2 and Gemini 3 Pro requires matching model capabilities to specific requirements. Rather than declaring a universal winner, consider these decision factors:

Choose GPT-5.2 when:

Your primary needs involve complex reasoning, mathematical computation, or sophisticated coding tasks. The model's benchmark leadership in ARC-AGI-2, AIME, and SWE-bench reflects genuine advantages for these applications. Organizations building AI agents, automation systems, or tool-using applications will find GPT-5.2's architecture well-suited to these patterns.

Teams already invested in OpenAI's ecosystem through Azure integration, existing API implementations, or ChatGPT Enterprise will face lower switching costs remaining with GPT-5.2. The model's continuing updates and OpenAI's development velocity suggest ongoing improvements.

Choose Gemini 3 Pro when:

Multimodal understanding, extended context processing, or Google ecosystem integration drive your requirements. Applications analyzing images, processing video, or handling documents with mixed media will benefit from Gemini's native multimodal architecture.

The 1-million-token context window proves decisive for long document analysis, codebase understanding, or applications requiring extensive context retention. If your use cases regularly exceed 256K tokens—GPT-5.2's effective context limit—Gemini becomes the practical choice.

Organizations using Google Workspace will find seamless integration valuable. Gemini's access to Gmail, Docs, and Drive context enables workflows impossible with standalone models. For Google Cloud Platform users, Gemini's native GCP integration simplifies deployment and reduces operational overhead.

Consider using both:

Many organizations achieve optimal results by using both models for different tasks. GPT-5.2 handles reasoning-heavy work while Gemini 3 Pro processes multimodal content. API aggregation through services like laozhang.ai enables this hybrid approach without managing multiple integrations, with pricing that matches or beats direct access while providing access to the full range of model capabilities.

The choice between models is not binary. As both companies continue rapid development, today's gaps may narrow or shift. Building systems flexible enough to switch models based on task requirements provides the most resilient long-term architecture.

FAQ: Common Questions Answered

Is GPT-5 better than Gemini for coding?

GPT-5.2 currently leads most coding benchmarks, scoring 80% on SWE-bench Verified compared to Gemini 3 Pro's 76.2%. More importantly, GPT-5.2 excels at complex debugging and multi-file reasoning. However, Gemini 3 Pro's extended context window proves advantageous for understanding large codebases. For most development tasks, GPT-5.2 provides an edge; for code review of extensive PRs or architectural analysis, Gemini's context advantage matters more.

Which is cheaper: GPT-5 or Gemini API?

Input pricing slightly favors GPT-5.2 at $1.75/1M tokens versus Gemini's $2.00/1M. Output pricing favors Gemini at $12/1M versus GPT-5.2's $14/1M. Total cost depends on your input/output ratio and whether you can leverage GPT-5.2's 90% cached input discount or Gemini's batch processing discount. For typical conversational use, costs are comparable. For applications with high output volume, Gemini often costs less. For applications with repeated contexts, GPT-5.2's caching provides significant savings.

Can Gemini 3 Pro handle longer documents than GPT-5?

Yes, significantly. Gemini 3 Pro supports up to 1 million tokens of context—roughly 750,000 words or several books. GPT-5.2 effectively handles around 256K tokens with diminishing performance at the upper limits. For analyzing lengthy documents, entire codebases, or extensive conversation histories, Gemini's context advantage is decisive.

Which model is better for business use?

Both models serve business applications well, but for different scenarios. GPT-5.2 excels at knowledge work, complex analysis, and automated decision-making where reasoning quality determines outcomes. Gemini 3 Pro provides advantages for businesses heavily invested in Google Workspace, needing multimodal capabilities, or processing extensive documentation. Enterprise plans for both offer security, compliance, and support features required for business deployment.

How fast are GPT-5 and Gemini?

Gemini 3 Pro outputs approximately 148 tokens per second versus GPT-5.2's 102 tokens per second—roughly 45% faster raw output. However, wall-clock time for task completion often favors GPT-5.2's higher efficiency, as it typically requires fewer tokens to accomplish the same goal. For real-time conversational applications where perceived speed matters, Gemini feels faster. For batch processing where completion matters more than incremental output, the difference is less significant.

Will GPT-5.2 or Gemini 3 Pro receive updates?

Both models will continue receiving updates. OpenAI's development cadence suggests GPT-5.3 or beyond may appear in 2026. Google continues iterating on Gemini with frequent capability additions. Building applications that can switch between model versions and providers provides the most future-proof architecture. Our Gemini 3.0 API guide covers the latest integration patterns for Google's offerings.

Which is better for image understanding?

Gemini 3 Pro leads on multimodal benchmarks with 81.2% on MMMU-Pro versus GPT-5.2's 79.5%. For video understanding specifically, Gemini's native video processing significantly outperforms GPT-5.2's frame-by-frame approach. If your application centers on image or video analysis, Gemini currently provides the stronger solution.

The AI landscape continues evolving rapidly. While this comparison reflects the state of play in January 2026, readers should verify specific capabilities and pricing against current documentation before making significant commitments. Both GPT-5.2 and Gemini 3 Pro represent remarkable achievements in artificial intelligence, and either can serve most applications effectively—the choice ultimately depends on matching specific strengths to specific requirements.