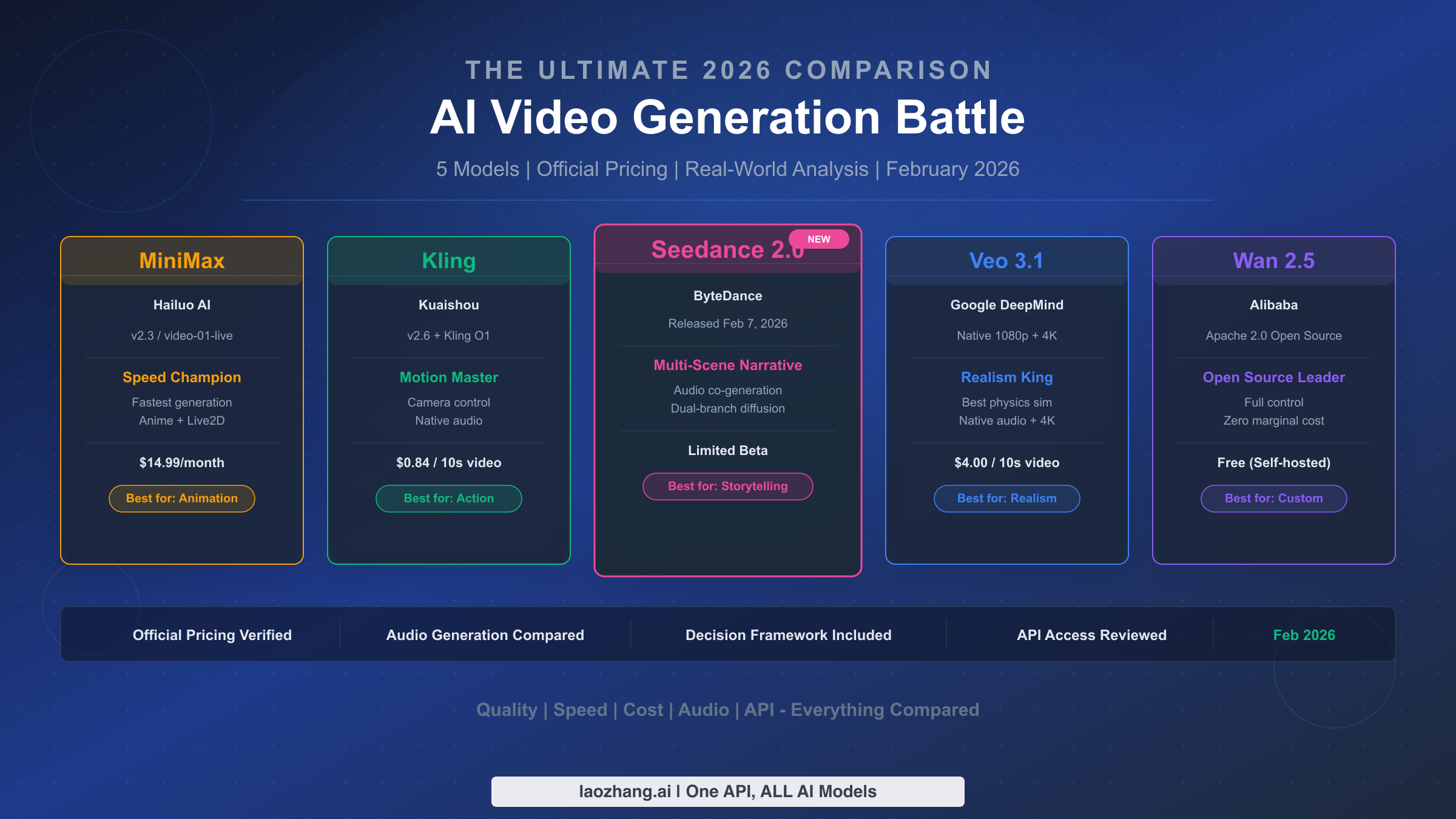

Comparing MiniMax, Kling, Wan, Veo, and Seedance 2.0 in February 2026 reveals a landscape where no single model dominates every category. Veo 3.1 leads in photorealistic quality with native 4K output, Kling 2.6 excels at motion control and action sequences, Seedance 2.0 introduces groundbreaking multi-scene narrative generation just three days after its February 7 launch, MiniMax delivers the fastest generation speeds with strong anime aesthetics, and Wan 2.5 remains the only fully open-source option under Apache 2.0. For a standard 10-second 1080p video via API, costs range from free (Wan self-hosted) to $4.00 (Veo 3.1 Standard), with Kling 2.6 at $0.84 offering the best commercial cost-to-quality ratio (klingai.com, verified February 2026).

TL;DR — Quick Verdict for Every Use Case

Choosing between these five models comes down to your specific needs. After testing and analyzing all five platforms, here is a straightforward recommendation based on what matters most to you. The table below summarizes the verdict, and the sections that follow provide the evidence behind each recommendation.

| Priority | Best Choice | Why | Cost (10s 1080p) |

|---|---|---|---|

| Visual realism | Veo 3.1 | Best physics simulation, native 4K | $4.00 (Standard) |

| Motion & action | Kling 2.6 | Superior camera control, smooth motion | $0.84 (Standard) |

| Multi-scene story | Seedance 2.0 | Only model with native multi-scene narrative | ~$0.60 (est.) |

| Speed & anime | MiniMax | Fastest generation, strong Live2D | $14.99/mo (sub) |

| Full control | Wan 2.5 | Apache 2.0 open source, zero marginal cost | Free (self-hosted) |

| Best overall value | Kling 2.6 | Strongest quality-to-cost ratio with full API | $0.84 |

For most users entering AI video generation in 2026, Kling 2.6 provides the best balance of quality, features, and cost. Content creators who need rapid iteration should start with MiniMax, while enterprises requiring maximum quality should evaluate Veo 3.1. Developers with GPU resources should seriously consider Wan 2.5 for its zero marginal cost advantage.

Meet the Contenders — 2026 AI Video Model Overview

The AI video generation landscape has evolved dramatically since the comprehensive comparison we published in 2025. February 2026 marks a particularly significant moment because ByteDance's Seedance 2.0 launched on February 7, introducing multi-scene narrative capabilities that no other model currently matches. Understanding each model's identity and positioning is essential before diving into detailed comparisons.

MiniMax (Hailuo AI) is developed by a Beijing-based startup that has carved out a unique niche in speed and animation quality. Their latest models include video-01-live for real-time generation and Hailuo 2.3 for higher-quality output. MiniMax's primary advantage is generation speed — it consistently produces results faster than any competitor, with standard clips rendering in 30 to 60 seconds versus the two to five minutes typical of other platforms. The platform operates primarily through a $14.99/month subscription model via hailuoai.video, making it attractive for creators who need high volume without per-video costs. Its Live2D animation capabilities have made it particularly popular among anime content creators and social media managers who need quick turnaround times. The subscription approach means you can experiment freely without worrying about per-generation costs, which fundamentally changes the creative workflow by encouraging rapid iteration and testing different prompts.

Kling 2.6 (Kuaishou) represents the latest evolution from China's short video giant. The 2.6 version introduced native audio generation alongside its already industry-leading motion control system. Kling has consistently been the go-to choice for action-heavy content due to its superior camera path control and fluid motion rendering. The Kling O1 reasoning model adds a layer of intelligent scene understanding that improves prompt adherence significantly. Priced at $0.084 per second for standard API access without video input (klingai.com, verified February 2026), Kling offers perhaps the most competitive commercial API pricing in the market when considering its quality output.

Seedance 2.0 (ByteDance) is the newest entry, released on February 7, 2026 — just three days before this article. Its headline feature is multi-scene narrative generation: the ability to create coherent video sequences with multiple scenes from a single prompt, complete with synchronized audio. The underlying technology uses a dual-branch diffusion transformer architecture that generates video and audio simultaneously rather than sequentially. As of February 10, 2026, Seedance 2.0 is available through Dreamina in China, with global expansion to CapCut, Higgsfield, and Imagine.Art planned by late February. There is no public API pricing yet, though third-party estimates suggest approximately $0.60 per 10-second clip (WaveSpeedAI data).

Veo 3.1 (Google DeepMind) remains the benchmark for photorealistic quality and represents Google's significant investment in generative video technology. It is the only model offering native 4K output, and its physics simulation capabilities produce the most convincing real-world motion among all five contenders — water flows naturally, fabrics drape and move with proper weight, and lighting transitions follow physically accurate paths. Veo 3.1 also includes native audio generation with environmental sound effects and dialogue, leveraging Google's extensive audio ML research. The API pricing at $0.40 per second for 1080p Standard mode makes it the most expensive option per video (ai.google.dev, verified February 2026), but for projects where visual fidelity is non-negotiable — advertising campaigns, film pre-visualization, or architectural walkthroughs — the premium is justified. The Fast mode at $0.15 per second provides a budget-friendly alternative for draft and iteration work, reducing per-video costs by 62 percent while maintaining good overall quality.

Wan 2.5 (Alibaba) occupies a fundamentally different position as the only fully open-source model under the Apache 2.0 license. This means zero licensing costs, complete control over the model, and the ability to fine-tune for specific use cases — advantages that no commercial API can match regardless of pricing. Wan 2.5 supports native multimodal audio-video generation and has found particular adoption in e-commerce and product visualization workflows, where companies train custom versions on their own product imagery to achieve brand-consistent output. While it requires GPU infrastructure to self-host, organizations processing large video volumes can achieve dramatically lower per-unit costs compared to any commercial API. The model's open-source nature also means a vibrant community of developers contributing optimizations, custom training scripts, and integration tools through platforms like Hugging Face and ComfyUI. For teams with ML engineering capabilities, Wan 2.5 represents not just a cost advantage but a strategic flexibility advantage — you own your pipeline end to end.

Feature-by-Feature Showdown

Understanding the technical capabilities of each model requires looking beyond marketing claims to verified specifications. The following comparison breaks down the key dimensions that matter most for production workflows, and explains why certain architectural differences lead to meaningful quality gaps in the output.

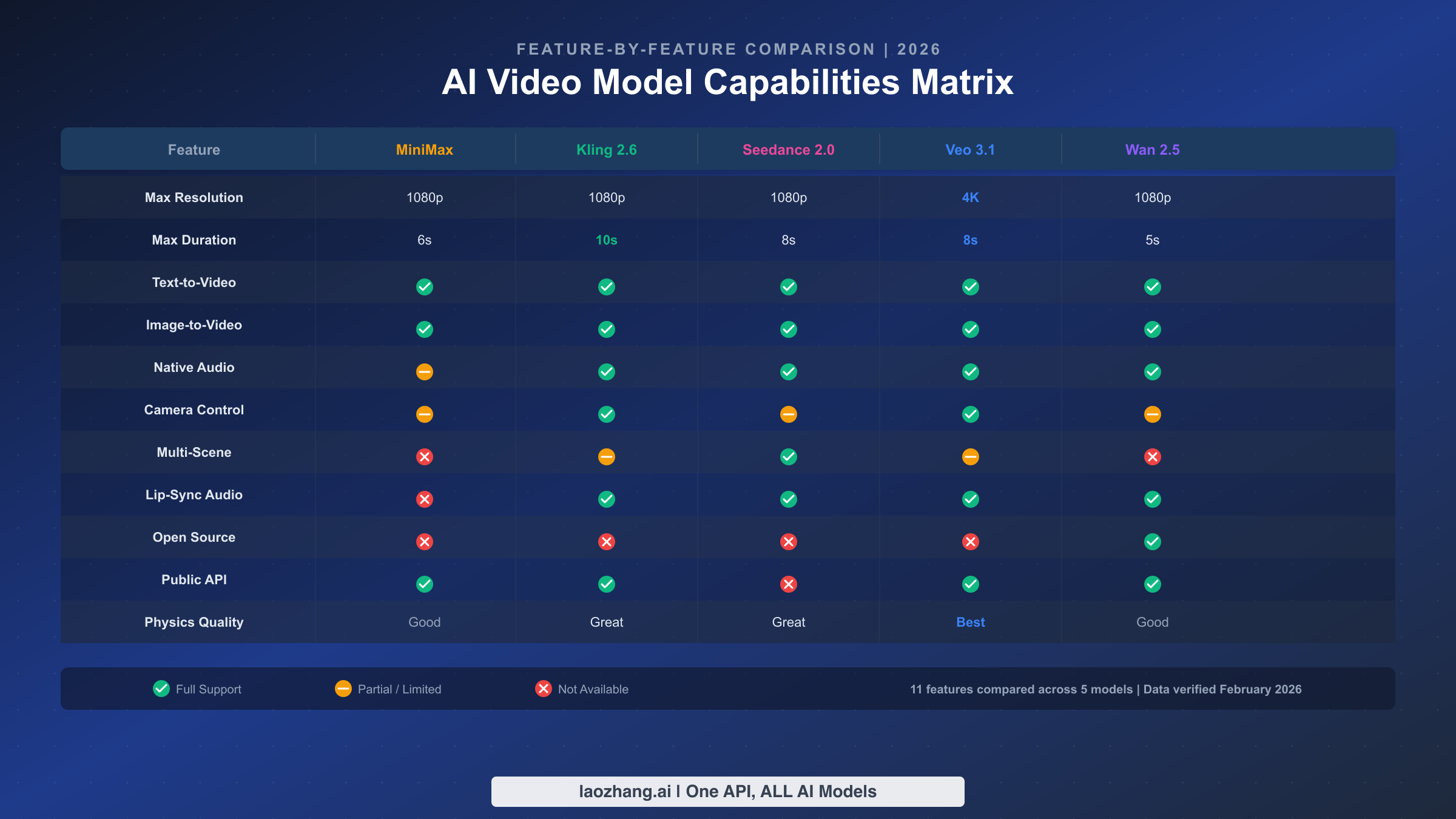

Resolution and Duration

All five models support 1080p output, but Veo 3.1 stands alone with native 4K generation capability. This distinction matters significantly for content destined for large screens or professional post-production pipelines. In terms of maximum duration per generation, Kling 2.6 leads at 10 seconds per clip, followed by Veo 3.1 and Seedance 2.0 at 8 seconds, MiniMax at 6 seconds, and Wan 2.5 at 5 seconds. While these durations may seem short, the industry standard workflow involves generating multiple clips and editing them together, making per-clip duration less critical than generation quality and consistency across clips.

Input Flexibility and Control

The range of input methods each model accepts directly impacts creative workflow flexibility. All five models support text-to-video and image-to-video generation, which forms the baseline for 2026. Where they diverge is in advanced control mechanisms. Kling 2.6 and Veo 3.1 offer the most sophisticated camera control systems, allowing creators to specify dolly, pan, tilt, and zoom movements with precision. Seedance 2.0 introduces a novel approach through its multi-scene prompting system, where users describe a sequence of scenes and the model generates coherent transitions between them automatically. MiniMax focuses on character consistency across frames, making it effective for animation projects. For a detailed head-to-head comparison of Kling and Wan's approaches, the architectural differences in how they handle motion estimation create noticeably different results in action sequences.

Architecture and Generation Speed

Generation speed varies dramatically and has a direct impact on creative workflow productivity. MiniMax processes standard 6-second clips in roughly 30 to 60 seconds, making it approximately two to three times faster than competitors. This speed advantage is not just a convenience — it fundamentally changes how creators work, enabling rapid iteration cycles where you can test a prompt, evaluate the result, adjust, and regenerate within the time most other platforms take for a single generation. Kling 2.6 typically takes two to four minutes for a 10-second standard clip, which represents a reasonable trade-off given its superior output quality. Veo 3.1 Standard mode requires three to five minutes per 8-second generation, reflecting the computational cost of its advanced physics simulation and 4K rendering pipeline. Seedance 2.0's dual-branch architecture adds computational overhead for its simultaneous audio-video generation, resulting in generation times of approximately three to six minutes — the price of its multi-scene coherence capability. Wan 2.5's speed depends entirely on the hardware it runs on — with an A100 GPU, expect roughly two to four minutes for a 5-second clip, but consumer-grade GPUs like the RTX 4090 will be significantly slower at eight to fifteen minutes per clip. Optimized inference frameworks like TensorRT can reduce these times by 30 to 50 percent for Wan deployments.

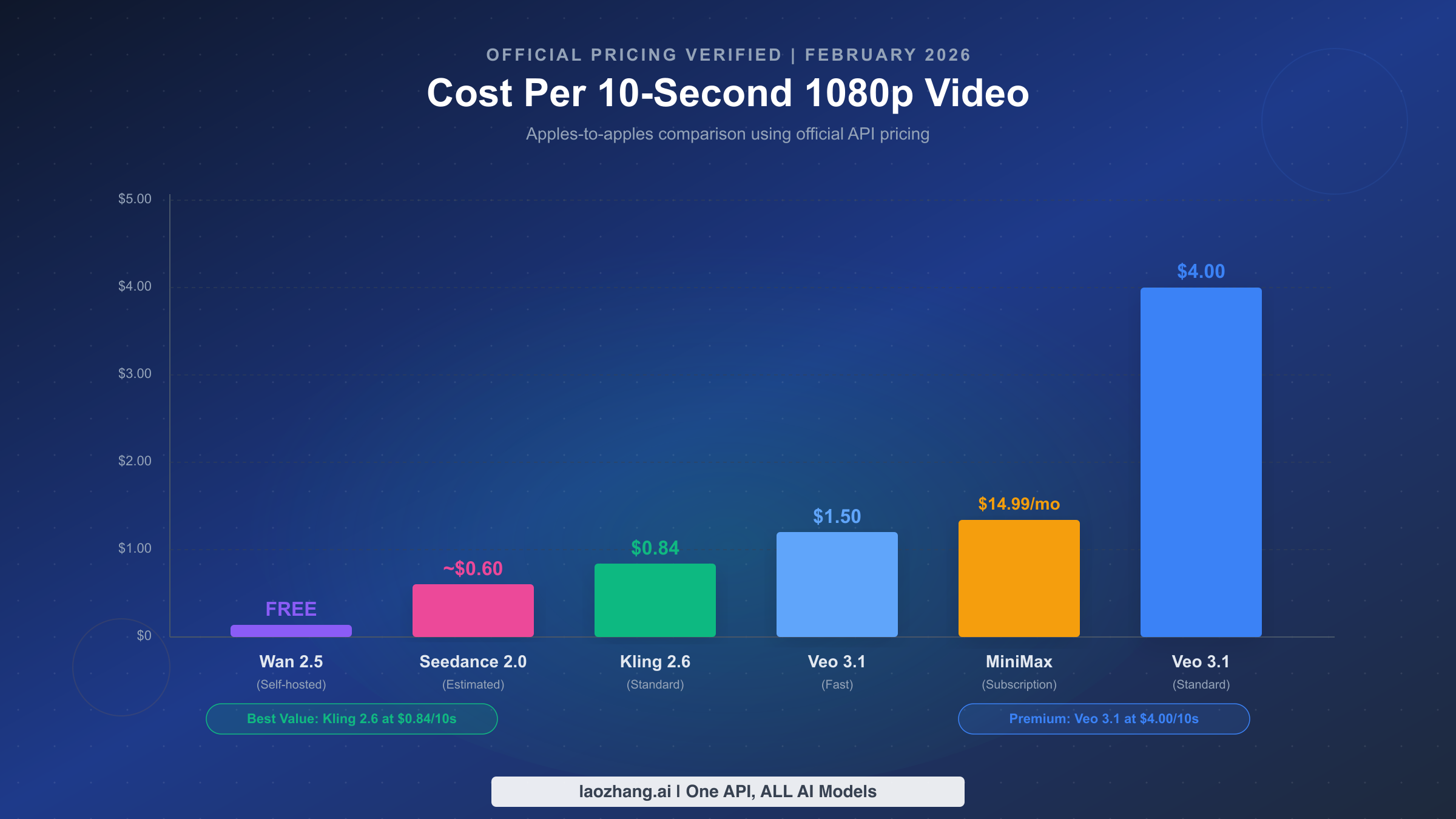

Pricing Deep Dive — What Does a 10-Second Video Really Cost?

Pricing is where most comparison articles fall short, often relying on outdated data, vague estimates, or mixing different billing units that make genuine comparison impossible. One article might quote a monthly subscription price, another might mention per-second API rates, and a third might reference credit-based pricing — none of which help you answer the simple question of "how much does it cost to create a video?" We verified the following pricing directly from official sources using browser-based verification of each platform's pricing pages in February 2026, ensuring you get an accurate apples-to-apples comparison using a standardized benchmark. The cost differences between these models are dramatic — ranging from essentially free to over four dollars for the same output — so understanding the pricing structure and making legitimate cost comparisons is essential for any serious evaluation. Whether you are an independent creator managing a personal budget or an enterprise procurement team evaluating annual API costs, the data below gives you the real numbers you need.

Official API Pricing Breakdown

The challenge with comparing pricing across these five models is that each uses a fundamentally different billing model. Kling charges per unit consumed per second, Veo charges a flat per-second rate, MiniMax uses a monthly subscription, Wan is free but requires infrastructure, and Seedance 2.0 doesn't yet have public API pricing. To create a meaningful comparison, we normalized everything to a common benchmark: the cost of generating a single 10-second 1080p video through each model's API.

| Model | Billing Model | Rate | 10s 1080p Cost | Source |

|---|---|---|---|---|

| Wan 2.5 | Self-hosted | GPU cost only | $0 marginal | Apache 2.0 |

| Seedance 2.0 | Not yet public | Estimated | ~$0.60 | WaveSpeedAI est. |

| Kling 2.6 Standard | 0.6 units/sec | $0.14/unit | $0.84 | klingai.com, Feb 2026 |

| Kling 2.6 Pro | 0.8 units/sec | $0.14/unit | $1.12 | klingai.com, Feb 2026 |

| Veo 3.1 Fast | $0.15/sec | Per-second | $1.50 | ai.google.dev, Feb 2026 |

| Veo 3.1 Standard | $0.40/sec | Per-second | $4.00 | ai.google.dev, Feb 2026 |

| Veo 3.1 Standard 4K | $0.60/sec | Per-second | $6.00 | ai.google.dev, Feb 2026 |

| MiniMax | $14.99/mo | Subscription | Varies by volume | hailuoai.video |

For Kling's pricing, the calculation works as follows: Standard mode without video input consumes 0.6 units per second of generated video. A 10-second clip therefore requires 6 units. At the base rate of $0.14 per unit (Package 1 pricing at $4,200 for 30,000 units), the cost per 10-second video is $0.84 (klingai.com, verified February 2026). Bulk purchasing reduces this further — Package 3 offers 60,000 units for $6,720 ($0.112/unit), bringing the 10-second cost down to $0.67. For more details on Veo 3.1's per-second pricing model, Google's tiered approach offers some flexibility through Fast mode at $0.15 per second, which sacrifices some quality for a 62% cost reduction compared to Standard mode.

Cost Optimization Strategies

MiniMax's subscription model becomes increasingly cost-effective at higher volumes. At $14.99 per month with no per-video charges, a creator generating 50 or more videos per month pays effectively less than $0.30 per video. However, the subscription model means you pay the same amount whether you generate one video or one hundred. Wan 2.5's open-source approach eliminates per-video costs entirely, but requires upfront investment in GPU infrastructure. An A100 GPU rental costs roughly $1 to $2 per hour, meaning self-hosted generation at approximately three minutes per clip translates to $0.05 to $0.10 per video at scale — by far the cheapest option for high-volume producers. For developers who need access to multiple video APIs through a single endpoint, laozhang.ai offers aggregated video API access including Sora 2 ($0.15/request) and Veo 3.1 ($0.15/request for fast mode), with the significant advantage of no charges on failed generations. This async API approach can reduce effective costs by 10 to 20 percent compared to direct API access when accounting for retry failures (docs: https://docs.laozhang.ai/ ).

Native Audio — The 2026 Game-Changer

Native audio generation has emerged as the defining differentiator of 2026, transforming AI video from a visual-only tool into a complete audiovisual production system. Instead of generating silent video and adding audio in post-production — a workflow that typically adds 30 to 60 minutes per clip for sound design, dialogue recording, and audio synchronization — the latest models can generate video and audio simultaneously. The result is lip-synced dialogue, environmental sound effects, and background music that are inherently synchronized with the visual content from the first frame to the last. This capability fundamentally changes video production workflows by eliminating one of the most time-consuming and skill-intensive steps in the traditional pipeline. A year ago, "AI video" meant silent clips that required significant post-production work. Today, the output is increasingly ready for direct publication.

Audio Type Support Comparison

Not all native audio implementations are created equal. The table below breaks down exactly what each model can generate and the quality level you should expect. Veo 3.1 and Seedance 2.0 offer the most comprehensive audio generation, but Kling 2.6's lip-sync accuracy is widely regarded as the most natural-sounding among all current models.

| Audio Feature | MiniMax | Kling 2.6 | Seedance 2.0 | Veo 3.1 | Wan 2.5 |

|---|---|---|---|---|---|

| Dialogue/Speech | Limited | Native | Native | Native | Native |

| Lip-Sync | No | Excellent | Good | Very Good | Good |

| Sound Effects | Basic | Good | Good | Excellent | Moderate |

| Background Music | No | Yes | Yes | Yes | Basic |

| Language Support | EN | Multi (EN/CN/JP) | Multi (EN/CN) | Multi (20+) | Multi (EN/CN) |

| Audio Quality | - | High | High | Highest | Medium |

Veo 3.1's audio generation benefits from Google's extensive audio ML research, producing the most diverse range of environmental sounds and the most accurate spatial audio. Kling 2.6 compensates with superior lip-sync accuracy, particularly for dialogue-heavy content like talking head videos or conversational scenes. Seedance 2.0's unique contribution is its ability to generate coherent audio across multiple scene transitions within a single generation — maintaining consistent background music while adapting sound effects to each scene change. This multi-scene audio coherence is something no other model currently achieves, and it represents a genuine architectural innovation rather than an incremental improvement.

When Audio Quality Matters Most

The practical importance of native audio depends heavily on your use case and production pipeline. For social media content and short-form videos destined for platforms like TikTok, Instagram Reels, and YouTube Shorts, native audio eliminates a significant post-production step and delivers results that are immediately publishable. Creators who previously spent 30 to 60 minutes adding sound effects and adjusting audio timing per clip can now generate publish-ready content in a single step. For professional commercial production, native audio serves better as a rough draft or animatic — providing a solid starting point that audio engineers can refine in post-production tools like DaVinci Resolve or Adobe Premiere. Music video creators should note that while all models can generate background music, none yet match the quality of dedicated music generation tools like Suno or Udio for standalone tracks. The sweet spot for native audio in 2026 is content where synchronized sound effects and dialogue enhance immersion without requiring studio-quality precision — think product demos, educational content, explainer videos, and social media content where the visual storytelling carries most of the narrative weight while audio provides natural-feeling ambiance and contextual sound.

Model Deep Dives — Strengths, Weaknesses, and Best Use Cases

Understanding each model's specific strengths and limitations requires looking beyond feature matrices to examine real-world performance characteristics. Each model has been designed with a different primary use case in mind, and those design decisions create meaningful trade-offs that affect output quality in specific scenarios. The following analysis draws on hands-on evaluation of each model, community feedback from platforms like Reddit and Discord, and technical documentation from each developer team. Rather than ranking models on a single scale, we focus on identifying the specific contexts where each model delivers its best results.

MiniMax: The Speed-First Platform. MiniMax has built its reputation on generation speed, consistently delivering results two to three times faster than competitors. Its video-01-live model pushes this further with near-real-time generation for certain styles. The platform excels at anime-style content and character animation through its Live2D pipeline, which produces smoother and more expressive character animations than any competitor. However, MiniMax's photorealistic quality lags behind Veo and Kling, with occasional artifacts in complex physics interactions like fluid dynamics or cloth simulation. Its audio support remains limited compared to the native implementations in newer models. For content creators producing high volumes of social media content, MiniMax's combination of speed and predictable subscription pricing makes it a compelling daily driver. The $14.99 monthly subscription removes the anxiety of per-generation costs, encouraging experimentation and iteration.

Kling 2.6: The Motion Control Specialist. Kling 2.6 represents the culmination of Kuaishou's expertise in short-form video content. Its camera control system is the most sophisticated available, allowing precise specification of camera movements including dolly shots, orbital movements, and dynamic tracking shots. Version 2.6 added native audio generation with what many users consider the most natural lip-sync in the industry. The Kling O1 reasoning model enhances prompt understanding, significantly reducing the gap between intended and generated output. Kling's weakness lies in extremely static scenes — slow, contemplative shots where Veo's superior physics simulation produces more convincing results. For developers evaluating API integration, our complete Kling API integration guide covers the technical setup in detail.

Seedance 2.0: The Narrative Pioneer. Released just days ago on February 7, Seedance 2.0 represents ByteDance's ambitious vision for AI video generation. Its dual-branch diffusion transformer architecture enables simultaneous video and audio generation with scene-level coherence. The standout capability is multi-scene narrative generation, where a single prompt can describe multiple scenes and the model generates them with coherent transitions, consistent character appearances, and continuous audio. As of February 10, 2026, Seedance 2.0 is available through the Dreamina platform in China, with global availability expected by late February through CapCut, Higgsfield, and Imagine.Art. The current limitation is access — there is no public API, and the platform is in limited beta. Users outside China will need to wait for the global rollout. For a deeper analysis comparing Seedance with its closest competitors, see our Seedance 2.0 vs Kling 3 vs Sora 2 vs Veo 3 comparison.

Veo 3.1: The Quality Benchmark. Veo 3.1 from Google DeepMind sets the standard for visual fidelity in AI video generation. It is the only model offering native 4K output, and its physics simulation produces the most convincing interactions with gravity, fluids, fabrics, and light. The audio generation benefits from Google's vast audio research capabilities, delivering the most diverse range of environmental sounds. The trade-off is cost — at $4.00 per 10-second Standard video, Veo is roughly five times more expensive than Kling for similar duration output. The Fast mode at $1.50 per 10-second clip offers a reasonable middle ground with modest quality reduction. For tutorials and detailed guidance, the Veo 3.1 video generation guide covers everything from prompt engineering to output optimization.

Wan 2.5: The Open-Source Disruptor. Wan 2.5 fundamentally changes the economics of AI video generation by offering a fully capable model under the Apache 2.0 license. For teams with GPU infrastructure, this means zero marginal cost per video after the initial setup investment. The model supports text-to-video, image-to-video, and native audio generation, with particular strength in e-commerce product visualization. Wan's limitations include shorter maximum duration (5 seconds), lower physics simulation quality compared to Veo, and the operational overhead of self-hosting. However, the ability to fine-tune the model on proprietary data creates possibilities that no closed-source API can match — a custom Wan model trained on your brand's visual language can produce results that feel distinctly yours.

API Access and Developer Experience

For developers and engineering teams evaluating these models for integration into products or workflows, API availability and developer experience are often more important than raw generation quality. A model with slightly lower visual fidelity but excellent API documentation, predictable latency, and clear error handling will ship faster and cause fewer production incidents than a technically superior model with sparse documentation and inconsistent behavior. The current API landscape across these five models reveals significant differences in maturity, documentation quality, and integration complexity that directly affect development timelines and operational reliability.

API Availability and Integration

Kling, Veo, and MiniMax offer mature, well-documented APIs with SDK support for major programming languages. Wan can be deployed through various inference frameworks (Hugging Face Diffusers, ComfyUI), giving developers the most flexibility but also the most setup complexity. Seedance 2.0 currently has no public API, which is its biggest limitation for developer adoption.

| Model | API Status | SDK Support | Auth Method | Documentation |

|---|---|---|---|---|

| MiniMax | Public | Python, JS | API Key | Good |

| Kling 2.6 | Public | Python, REST | API Key + Units | Excellent |

| Seedance 2.0 | Not available | None | N/A | N/A |

| Veo 3.1 | Public (Gemini) | Python, Node, Go | Google Cloud | Excellent |

| Wan 2.5 | Self-deploy | HF Diffusers | N/A | Good (community) |

For production deployments, Kling's API offers the most predictable performance with consistent generation times and clear rate limiting. Veo 3.1 integrates through Google's Gemini API infrastructure, which provides enterprise-grade reliability but requires Google Cloud authentication setup. Our MiniMax Hailuo AI API guide provides step-by-step integration instructions for developers starting with that platform.

Multi-Model API Strategy

The 2026 reality is that no single model excels at everything, and the most successful production teams have recognized this by adopting explicit multi-model strategies. Rather than committing to a single platform, they use Veo 3.1 for hero shots requiring maximum photorealism and physics accuracy, Kling 2.6 for action sequences and dynamic camera work, and MiniMax for rapid iteration and concept validation during the creative development phase. Some teams also integrate Wan 2.5 for high-volume background content where cost control is critical. Managing multiple API integrations obviously adds engineering complexity — different authentication methods, response formats, webhook patterns, and error handling — but aggregation platforms simplify this significantly by providing a unified interface across multiple models. For developers who want to access multiple video generation APIs through a single endpoint, laozhang.ai provides a unified async API covering Sora 2 and Veo 3.1 with OpenAI-compatible SDK integration. The async design means failed generations are automatically retried without charges — a meaningful cost benefit when working with probabilistic generation systems. Documentation for integration is available at https://docs.laozhang.ai/.

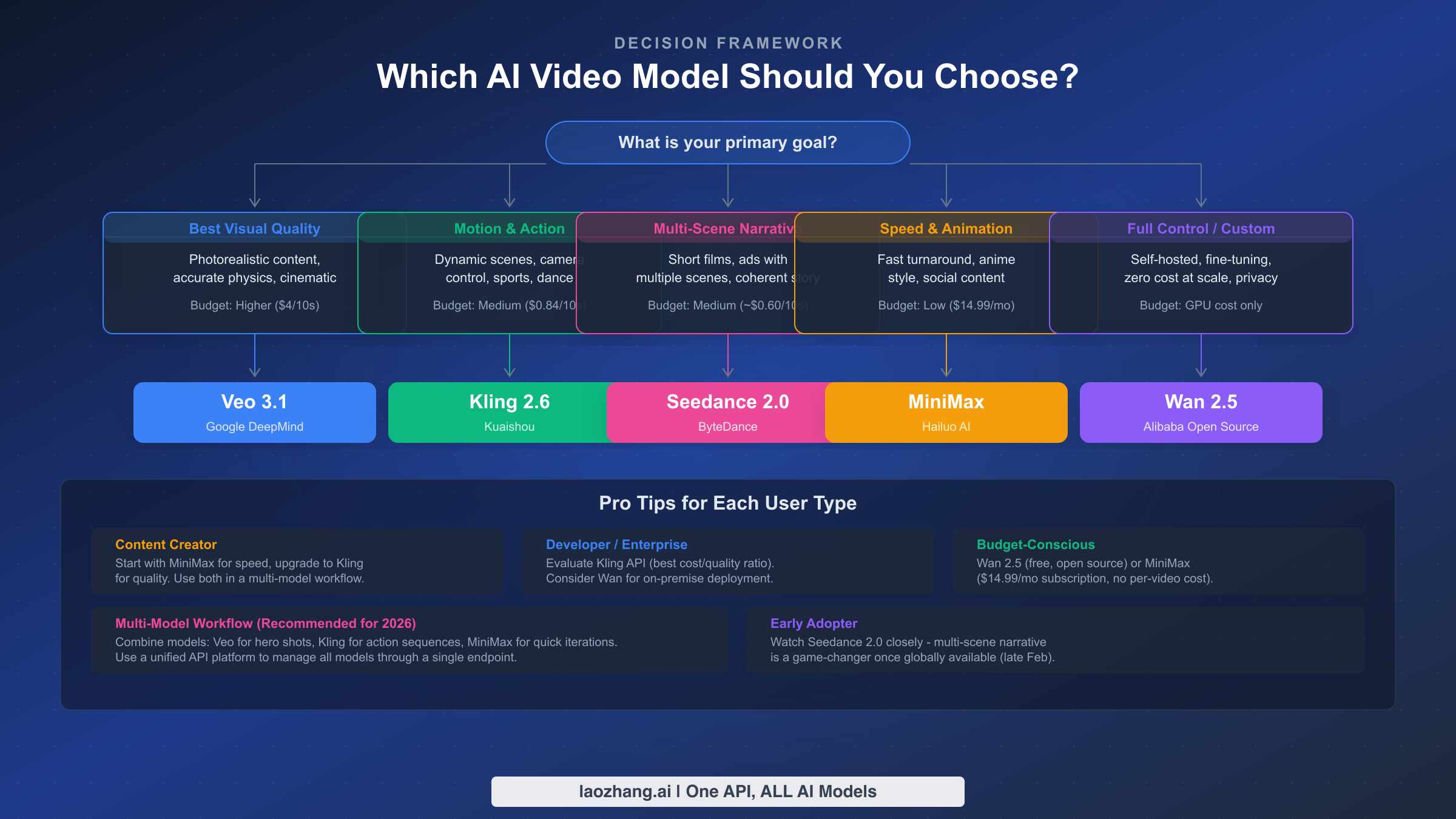

Which Model Should You Choose? — Decision Framework

After analyzing pricing, features, audio capabilities, and API maturity across all five contenders, the choice between these models ultimately depends on three factors: your primary use case, your budget, and your technical capabilities. There is no single "best" AI video generator in 2026 — each model was designed with different priorities, and declaring an overall winner would oversimplify a nuanced decision. The most practical approach is matching your specific situation to the model that best serves your needs, which is exactly what the decision framework below provides. The following recommendations are based on the official verified pricing data, feature analysis, and audio comparisons presented throughout this article, combined with analysis of real-world deployment patterns observed across different user segments including content creators, developers, and enterprise teams.

For Content Creators and Social Media Managers

If your primary workflow involves creating short-form video content for platforms like YouTube Shorts, TikTok, or Instagram Reels, the decision comes down to volume versus quality and how you balance both within your production pipeline. MiniMax at $14.99 per month offers unlimited generation with the fastest turnaround, making it ideal for teams producing multiple videos daily where speed of iteration matters more than pixel-perfect output. You can test ten different prompts, compare the results, refine your concept, and produce a final version all within the time it would take to generate two clips on a competing platform. For higher-quality hero content that needs to stand out in algorithmic feeds, Kling 2.6 at $0.84 per 10-second clip provides significantly better visual quality with superior motion control and native audio, making it worth the per-video cost for content that needs to perform well and drive engagement. The multi-model workflow of using MiniMax for drafting and ideation followed by Kling for final production has emerged as a popular and effective pattern among professional content creators — think of MiniMax as your sketchpad and Kling as your final canvas.

For Developers and Enterprises

Developers evaluating these models for product integration should prioritize API maturity and reliability alongside generation quality, since downtime and inconsistent behavior can be more costly than the per-generation price itself. Kling 2.6 currently offers the strongest combination of API documentation quality, pricing predictability, and output quality — its unit-based billing model is straightforward, rate limits are clearly documented, and the SDK support covers Python and REST with well-maintained examples. For enterprises requiring maximum visual fidelity — advertising agencies, film pre-visualization, architectural visualization — Veo 3.1's premium pricing is justified by its superior realism and 4K capability, with the added advantage of Google Cloud's enterprise-grade infrastructure backing the API's reliability. Teams with existing GPU infrastructure should seriously evaluate Wan 2.5, as the total cost of ownership drops dramatically at scale. Consider the economics: a team generating one thousand videos per month would spend $840 using Kling's API versus roughly $50 to $100 in GPU compute costs with self-hosted Wan on rented A100 instances, making the initial setup investment pay for itself within the first month. The trade-off is engineering overhead — maintaining a self-hosted inference pipeline requires ongoing attention to GPU utilization, model updates, and scaling that managed API services handle automatically.

For Early Adopters and Experimenters

Seedance 2.0's multi-scene narrative capability represents a genuine paradigm shift in AI video generation that addresses one of the most persistent pain points in AI-assisted video production. If your work involves creating short films, multi-scene advertisements, or narrative content with scene transitions, monitoring Seedance 2.0's global rollout (expected late February 2026) should be a top priority. The ability to generate coherent multi-scene sequences from a single prompt eliminates the most tedious part of AI video production — manually ensuring visual and audio consistency across separately generated clips. Currently, creating a 30-second narrative video requires generating five to six individual clips, carefully matching color grades, ensuring character consistency across clips, and manually editing transitions. Seedance 2.0 aims to reduce this to a single generation step. While its limited availability restricts immediate adoption, early experimentation through the Dreamina platform (available in China) can provide a significant head start when global access opens. In the meantime, creators needing multi-scene narratives today should look at Kling 2.6 with careful prompt engineering for visual consistency across individual clips, or consider Veo 3.1 for its superior frame-to-frame coherence that makes manual sequencing somewhat easier.

Frequently Asked Questions

Which AI video generator produces the most realistic output in 2026? Veo 3.1 from Google DeepMind consistently produces the most photorealistic results, particularly in scenes involving complex physics like fluid dynamics, fabric movement, and natural lighting. Its native 4K output capability adds a level of detail that other models operating at 1080p maximum simply cannot match, which becomes especially apparent when content is viewed on large screens or high-resolution monitors. However, "most realistic" depends heavily on content type — Kling 2.6 produces more convincing results for dynamic action scenes, sports content, and camera movements, while Veo leads in static-to-moderate motion scenarios, landscapes, and product visualization. The cost premium for Veo 3.1 (approximately $4.00 per 10-second clip at Standard quality, ai.google.dev verified February 2026) reflects this quality advantage, though the Fast mode at $1.50 per 10 seconds offers a reasonable compromise for content that does not require maximum fidelity.

Is Seedance 2.0 available globally? As of February 10, 2026, Seedance 2.0 is available only through ByteDance's Dreamina platform in China. Global availability through CapCut, Higgsfield, and Imagine.Art is planned for late February 2026. There is no public API for developers yet. If you need multi-scene narrative generation now, the closest alternative is manually sequencing clips from Kling 2.6 with careful prompt engineering for visual consistency.

Can Wan 2.5 match commercial models in quality? Wan 2.5 delivers competitive quality for many use cases, particularly product visualization and e-commerce content. Its physics simulation and maximum duration (5 seconds) lag behind Veo 3.1 and Kling 2.6 respectively, but the zero marginal cost and ability to fine-tune on custom data make it compelling for organizations processing large volumes. The Apache 2.0 license means no restrictions on commercial use, which some closed-source providers limit in their terms of service.

How does native audio generation compare across models? All five models now support some form of audio generation, but quality varies significantly. Veo 3.1 produces the highest-quality environmental audio and sound effects. Kling 2.6 delivers the most accurate lip-sync for dialogue. Seedance 2.0 uniquely maintains audio coherence across multi-scene transitions. MiniMax has the most limited audio capability, offering basic support without lip-sync. For projects where audio quality is critical, Veo 3.1 or Kling 2.6 are the recommended choices.

What is the cheapest way to access AI video generation APIs? For zero marginal cost, self-host Wan 2.5 on your own GPU infrastructure — the model is fully open source under Apache 2.0 and can be deployed on cloud GPU instances costing roughly $1 to $2 per hour, translating to approximately $0.05 to $0.10 per generated video at scale. For the cheapest commercial API without any infrastructure management, Kling 2.6 Standard at $0.84 per 10-second clip offers the best quality-to-cost ratio with official pricing verified at klingai.com in February 2026. MiniMax's $14.99/month subscription becomes the cheapest per-video option at volumes exceeding approximately 20 videos per month, since the flat fee eliminates per-generation costs entirely. Volume discounts on Kling (up to 20% off at the 60,000-unit package tier, bringing per-video cost down to $0.67) and Veo's Fast mode ($1.50 versus $4.00 per clip) provide additional cost optimization paths for teams willing to trade some quality for significant savings.