Google's SynthID adds invisible watermarks to AI-generated content to combat misinformation and deepfakes. Launched by Google DeepMind in August 2023, SynthID embeds imperceptible digital signatures directly into images, text, audio, and video created by Google AI tools including Gemini, Imagen, Lyria, and Veo. Over 20 billion pieces of content have been watermarked to date. The watermark survives common edits like cropping and compression, and can be detected using the Gemini app, SynthID Detector portal, or developer APIs.

Quick Answer: Why Your AI Image Has a SynthID Watermark

If you've generated an image using Google's AI tools and wondered why it carries an invisible watermark, the answer comes down to three critical challenges facing the internet today: misinformation, deepfakes, and the erosion of trust in digital content. Google developed SynthID as a technical solution to help people distinguish between human-created and AI-generated media at scale.

The core problem SynthID solves is deceptively simple to state but enormously difficult to address. When realistic AI-generated images became trivially easy to create, the internet faced a credibility crisis. Consider the viral image of Pope Francis wearing a stylish white puffer coat that fooled millions in early 2023—it was entirely AI-generated, yet many people shared it believing it was real. Such incidents aren't just amusing mistakes; they represent a fundamental threat to how we process and trust visual information online.

SynthID works by embedding an invisible digital signature directly into the pixels of an image at the moment of creation. Unlike traditional watermarks that sit on top of an image and can be cropped out, SynthID's watermark is woven into the image's fabric. This means the watermark remains detectable even after someone crops the image, applies filters, compresses it for web use, or takes a screenshot. The technology uses two neural networks trained together—one to embed the watermark invisibly, and another to detect it reliably.

Your image has a SynthID watermark because transparency matters. When you use Google's Imagen model on Vertex AI, generate images through Gemini, or create any visual content with Google's AI tools, SynthID automatically adds its invisible signature. This isn't about restricting what you can do with the image—it's about enabling anyone who encounters that image later to verify its AI origins. The watermark doesn't affect image quality, doesn't add visible marks, and doesn't limit your commercial usage rights.

The scale of SynthID's deployment demonstrates how seriously Google takes this challenge. Since launching in August 2023, the technology has watermarked over 20 billion pieces of AI-generated content. This number continues growing as SynthID expands beyond images to cover text, audio, and video content generated by Google's AI models.

How SynthID Technology Actually Works (Technical Breakdown)

Understanding how SynthID works requires looking at both its embedding process and detection mechanism. The technology represents a significant departure from traditional watermarking approaches that have proven inadequate for AI-generated content.

Traditional watermarks fail because they're applied as an afterthought. A conventional watermark—whether visible text in a corner or hidden metadata—exists as a separate layer that can be removed through basic editing. Crop the corner, strip the metadata, or re-save the image, and the watermark disappears. This approach was designed for a world where creating realistic fake images required significant skill and effort. AI image generation changed that equation entirely.

SynthID embeds its watermark at the generation level, not as post-processing. When an AI model like Imagen generates an image, SynthID's watermarking neural network influences the final pixel values in ways imperceptible to humans but detectable by machines. The modifications are distributed holographically throughout the image, meaning even a small cropped portion retains enough of the watermark signal for detection. This integration happens during generation, not after, making the watermark an inherent property of the image rather than an added layer.

The embedding process uses adversarial training to ensure robustness. During development, the watermarking and detection networks were trained together while being repeatedly subjected to common image modifications: JPEG compression at various quality levels, cropping to different aspect ratios, rotation, color adjustments, resizing, and even generative attacks designed to remove watermarks. The embedding network was penalized whenever the watermark became undetectable, creating a system that produces watermarks surviving real-world image handling.

For text content, SynthID takes a different approach suited to how language models work. Large language models generate text one token (word) at a time, assigning probability scores to potential next words. SynthID Text adjusts these probability scores in ways that create a statistical pattern detectable by analysis but invisible in the resulting text. The watermark doesn't change the meaning, grammar, or quality of the generated text—it creates a subtle mathematical signature that can be identified later.

Detection provides confidence levels rather than binary answers. The SynthID detector outputs one of three states: watermarked, not watermarked, or uncertain. This probabilistic approach reflects the reality that heavy modifications can degrade the watermark signal. A high-confidence "watermarked" result means strong evidence the content was generated by a Google AI tool. An "uncertain" result might indicate partial watermark survival after significant editing. A "not detected" result means either the content wasn't created with Google AI, or the watermark was too damaged to detect.

What Content Gets SynthID Watermarks (Complete Modality Guide)

SynthID has expanded significantly since its initial focus on images. Understanding which content types receive watermarks helps you know when to expect them and how verification works for each modality.

Image watermarking applies to content generated through Google's primary AI image services. The Imagen model on Google Cloud's Vertex AI includes SynthID watermarking by default for enterprise customers. Images created through the Gemini consumer app also receive SynthID watermarks. This covers both professional API usage and casual consumer image generation. The watermark embeds at the pixel level and survives typical web usage patterns including social media uploads, which often aggressively compress images.

Text watermarking covers content from Gemini and other Google LLMs. SynthID Text applies to AI-generated text, adjusting the statistical properties of token generation without affecting readability or meaning. However, text watermarks are more fragile than image watermarks. Substantially rewriting the text, translating it to another language, or paraphrasing extensively can reduce or eliminate the detectability of the watermark. For factual responses with limited phrasing flexibility, the watermark may also be less robust.

Audio watermarking protects content from Google's music and voice AI. Lyria, Google's AI music generation model, embeds SynthID watermarks in generated audio. The podcast generation feature of NotebookLM also produces watermarked audio. These watermarks are inaudible to humans and survive common audio modifications including MP3 compression, noise addition, and speed changes. The technology ensures AI-generated music and spoken content can be identified even after editing.

Video watermarking covers Google's Veo model output. Videos generated by Veo receive frame-level SynthID watermarks. The technology handles the unique challenges of video, including frame rate changes, temporal compression, and the sheer volume of visual data in video content. Google has also partnered with NVIDIA to watermark videos generated through NVIDIA Cosmos, extending SynthID's reach beyond Google's own models.

| Content Type | Google Products | Watermark Location | Robustness |

|---|---|---|---|

| Images | Imagen, Gemini | Pixel-level | High |

| Text | Gemini, LLMs | Token statistics | Moderate |

| Audio | Lyria, NotebookLM | Waveform | High |

| Video | Veo, NVIDIA Cosmos | Frame-level | High |

Not all Google AI content is watermarked. SynthID specifically covers content generation, not all AI interactions. Using Gemini for conversation, analysis, or information retrieval doesn't produce watermarked output unless you're generating new images, text documents, audio, or video. Additionally, content from non-Google AI tools like ChatGPT, Midjourney, or Stable Diffusion won't have SynthID watermarks, though these platforms may have their own watermarking or metadata approaches.

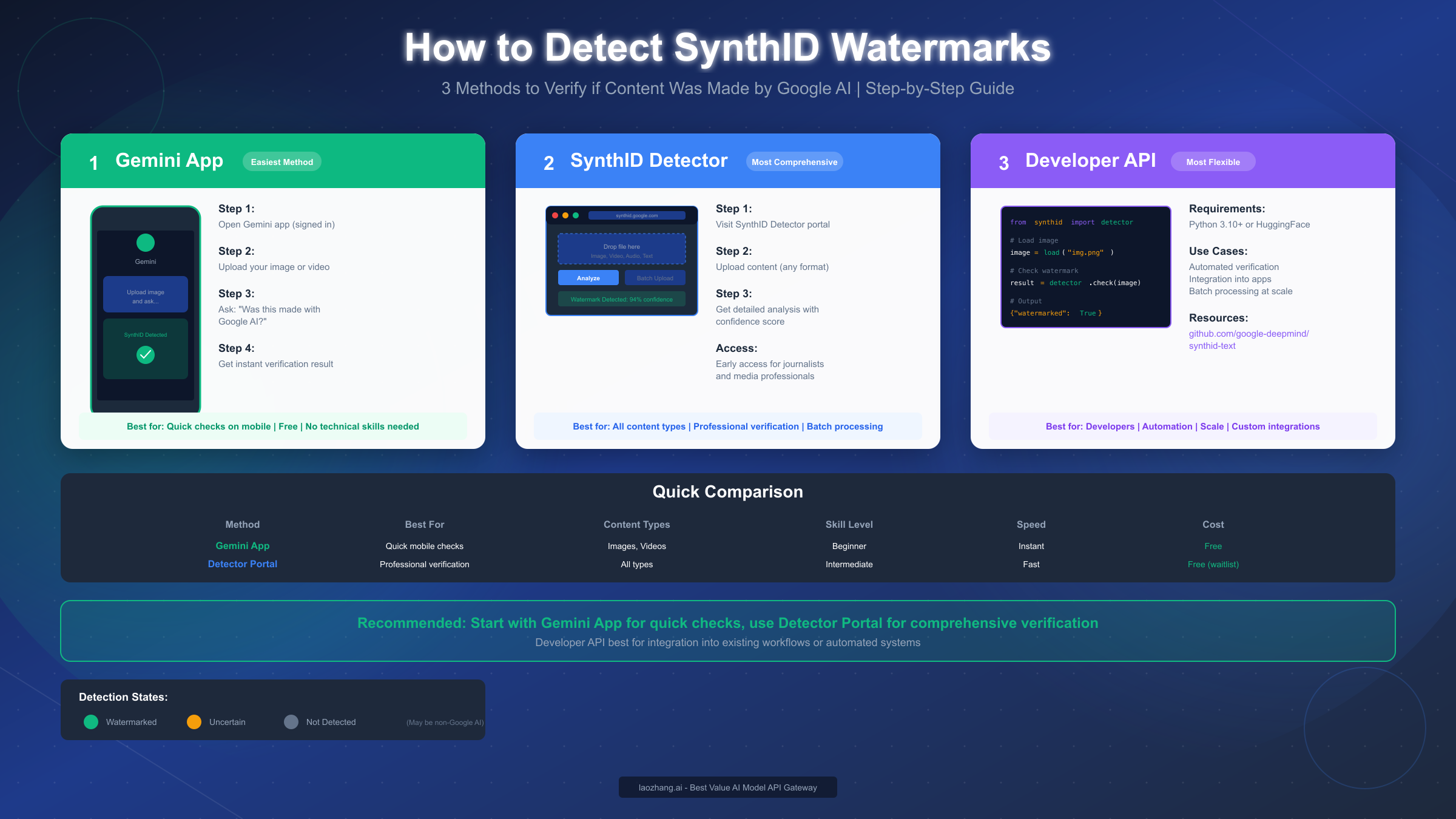

How to Check If Your Content Has SynthID (3 Detection Methods)

Knowing that SynthID watermarks exist is only useful if you can actually verify content. Google provides multiple detection methods suited to different users and use cases, from quick mobile checks to automated developer workflows.

Method 1: Using the Gemini App (Easiest)

The most accessible way to check for SynthID watermarks is through the Gemini mobile app. This approach requires no technical knowledge and works in seconds.

The verification process is conversational and intuitive. Open the Gemini app while signed into your Google account. Upload the image or video you want to verify. Then simply ask Gemini something like "Was this created with Google AI?" or "Does this image have a SynthID watermark?" Gemini will analyze the content and report whether it detects a SynthID signature.

This method works well for spot-checking content you encounter online or verifying your own AI-generated images. The main limitation is throughput—it's designed for individual checks rather than batch processing. If you need to verify many files, other methods become more practical.

Method 2: SynthID Detector Portal (Most Comprehensive)

Google launched the SynthID Detector portal in May 2025 as a dedicated verification tool. This web-based portal provides more detailed analysis than the conversational Gemini approach.

The portal accepts all SynthID-supported content types. You can upload images, videos, audio files, or text snippets for analysis. The detector scans for SynthID watermarks and provides a confidence score indicating how certain the detection is. For content that is partially watermarked (perhaps due to heavy editing), the portal can highlight which portions show the strongest watermark presence.

Access is currently limited to early testers. Google is initially rolling out the Detector to journalists, media professionals, and researchers—groups with professional needs for content verification. You can join a waitlist for access if you don't fall into these categories. Once access expands, the portal will likely become the go-to verification tool for anyone needing thorough watermark analysis.

Method 3: Developer API (Most Flexible)

For developers building applications that need watermark verification, Google provides programmatic access to SynthID detection. This enables automated verification workflows at scale.

The text watermarking implementation is open source. Google released a reference implementation of SynthID Text on GitHub (github.com/google-deepmind/synthid-text), allowing developers to understand and implement text watermark detection. A production-ready implementation is available through Hugging Face Transformers, making integration straightforward for Python-based projects.

Developers can configure the detector's sensitivity by adjusting threshold values, trading off between false positives and false negatives based on their specific needs. For applications where missing a watermark is worse than occasional false positives, thresholds can be adjusted accordingly.

For organizations needing comprehensive AI capabilities across multiple platforms, API relay services can simplify integration by providing unified access to various AI models including Google's offerings.

SynthID vs C2PA vs Other AI Watermarking Systems

SynthID isn't the only approach to authenticating AI-generated content. Understanding how it compares to alternatives helps you choose the right verification approach for your needs.

C2PA Content Credentials takes a fundamentally different approach. While SynthID embeds invisible watermarks in the content itself, C2PA (Coalition for Content Provenance and Authenticity) attaches cryptographic metadata describing the content's origin and edit history. This metadata can include detailed provenance information—which tool created the content, when, and what modifications were made afterward. Major companies including Adobe, Microsoft, BBC, and The New York Times developed C2PA as an open industry standard.

The key tradeoff is robustness versus richness. SynthID watermarks survive image editing because they're embedded in the pixels. C2PA metadata can be stripped when images are screenshot, re-uploaded to platforms that don't preserve metadata, or saved without EXIF data. However, when preserved, C2PA provides far richer provenance details than SynthID's binary "watermarked or not" signal.

Adobe Content Credentials builds on C2PA with consumer visibility. Adobe's implementation adds a visible "cr" icon to images with credentials, making the authenticity information discoverable to ordinary users. This consumer-facing approach differs from SynthID's invisible watermarking but serves a complementary purpose—educating people that authentication tools exist.

Meta's Video Seal focuses specifically on video content. Meta developed its own invisible watermarking technology for videos generated by its AI tools. Like SynthID, Video Seal embeds imperceptible markers designed to survive editing and compression. The technology remains proprietary to Meta's ecosystem.

| Aspect | SynthID | C2PA | Adobe | Meta |

|---|---|---|---|---|

| Approach | Invisible watermark | Metadata | Metadata + icon | Invisible watermark |

| Edit survival | High | Low | Low | High |

| Detail level | Basic | Rich | Rich | Basic |

| Open standard | Partial | Yes | Yes | No |

| Detection tools | Public | Public | Public | Limited |

The future likely combines these approaches. Google now adds both SynthID watermarks and C2PA metadata to AI-generated images, recognizing that neither approach alone solves the problem. Watermarking helps when metadata is stripped; metadata conveys richer information when platforms preserve it. For users verifying content, tools like other AI image generation platforms may have their own authentication approaches worth understanding.

Can SynthID Watermarks Be Removed? (Honest Discussion)

This is a question many people search for, and it deserves an honest answer. Yes, tools exist that claim to remove SynthID watermarks, but the reality is more nuanced than simple removal.

Removal tools work by re-processing images through AI. The basic technique involves using a diffusion model to "re-render" an image, using the original as a compositional guide while regenerating pixels. This process can disrupt the watermark signal while preserving the image's visual appearance. Researchers have demonstrated varying success rates with this approach, though Google has contested some claimed effectiveness numbers.

The practical barriers to removal are significant. Effective removal typically requires computational resources including capable GPUs with substantial VRAM. The process can introduce subtle visual artifacts, particularly in high-resolution images. Very high-resolution images (above 4K) may need downscaling for removal to work, potentially degrading quality. And importantly, these techniques target current SynthID implementations—future updates may improve watermark resilience.

The ethical implications matter more than technical feasibility. Watermark removal tools exist for the same reason lockpicks exist—the technology isn't inherently good or bad, but the use case determines the ethics. Legitimate reasons might include academic research into watermark robustness or testing your own content's watermark survival. Illegitimate uses include misrepresenting AI-generated content as human-created or creating deceptive media intended to mislead.

Detection isn't the only accountability mechanism. Even if someone removes a SynthID watermark, other forensic techniques can potentially identify AI-generated content. Training data artifacts, statistical patterns, and model-specific generation signatures may remain detectable. Watermark removal also doesn't erase the fact that content was AI-generated—it just makes that fact harder to prove automatically.

For content creators concerned about authentication, focusing on building trust through transparency is more sustainable than worrying about watermark circumvention. Clearly disclosing when you use AI tools, combining SynthID with C2PA metadata, and maintaining consistent practices builds credibility regardless of whether any single technical measure can be defeated.

Frequently Asked Questions

Does SynthID affect image quality or how images look?

SynthID watermarks are imperceptible to humans. The modifications made to pixel values are too subtle for the human visual system to detect. Google specifically optimized the technology to maintain image quality while ensuring watermark detectability. Extensive testing confirmed that watermarked images are visually indistinguishable from their unwatermarked equivalents. You cannot see, hear, or read the watermark—only machines can detect it.

Can I use SynthID-watermarked images commercially?

Yes. The SynthID watermark is about identification, not rights restriction. Having a watermark doesn't limit how you can use the image. Your commercial usage rights depend on the terms of service for the AI tool you used to generate the image, not on the watermark itself. The watermark simply allows anyone to verify the image's AI origins—it's a transparency mechanism, not a license constraint.

Does SynthID work for non-Google AI tools like ChatGPT or Midjourney?

No. SynthID only watermarks content generated by Google's AI tools. Images from Midjourney, DALL-E, Stable Diffusion, or other non-Google AI systems won't have SynthID watermarks. The SynthID detector cannot identify whether content from these platforms is AI-generated. Each platform may have its own watermarking or authentication approaches—for instance, DALL-E adds C2PA metadata to generated images.

What happens if SynthID doesn't detect a watermark?

A "not detected" result means one of two things: either the content wasn't created with Google AI, or any watermark present has been too damaged to detect. Heavy image manipulation, extreme compression, or purposeful removal attempts can degrade watermarks below detection thresholds. The detector cannot distinguish between never-watermarked content and successfully-removed watermarks.

Is the SynthID detector available to everyone?

The Gemini app detection method is available to any signed-in user. The SynthID Detector portal is currently in early access for journalists, media professionals, and researchers, with a waitlist for broader access. Developer APIs for text watermark detection are publicly available through GitHub and Hugging Face.

How accurate is SynthID detection?

Google hasn't published precise accuracy figures, but the system uses probabilistic detection with three possible outputs: watermarked, not watermarked, or uncertain. The confidence threshold can be adjusted for different use cases. High-confidence positive detections are very reliable; uncertain results indicate the need for additional verification methods.

What should I do if I need to verify non-Google AI content?

For verifying AI content from other sources, consider complementary approaches. Check for C2PA metadata using tools like Content Credentials Verify. Look for platform-specific indicators—many AI platforms embed their own identifiers. Combine technical verification with contextual analysis. For high-stakes verification needs, services like laozhang.ai offer multi-model API access that can help with cross-platform content analysis.

Conclusion: The Future of AI Content Authentication

SynthID represents a significant step forward in addressing the challenge of AI content authentication, but it's part of a larger ecosystem still taking shape. The technology succeeds at its core mission—enabling reliable identification of Google AI-generated content at scale—while openly acknowledging its limitations regarding non-Google content and extreme manipulation scenarios.

Three key developments will shape this space going forward. First, expect watermarking technology to improve in robustness while remaining invisible. Google's ongoing research aims to create watermarks that survive increasingly aggressive modification attempts. Second, industry standardization around approaches like C2PA will likely accelerate, creating interoperable authentication across platforms. Third, detection tools will become more widely accessible, moving from specialized professional tools to mainstream features.

For content creators, the practical guidance is straightforward. When using Google AI tools, know that your generated content will carry SynthID watermarks. This supports transparency without limiting your usage rights. Consider combining SynthID with C2PA metadata for maximum provenance information. And prioritize honest disclosure about AI usage—technical measures complement but don't replace ethical practices.

For content consumers, verification tools are increasingly accessible. The Gemini app provides instant watermark checking for anyone with a Google account. As the SynthID Detector expands access, more comprehensive verification will become available. While no single tool can authenticate all AI-generated content, the combination of SynthID, C2PA, and platform-specific approaches provides meaningful verification capabilities.

The challenge of distinguishing AI-generated from human-created content will persist as AI capabilities advance. SynthID demonstrates that practical solutions are possible—not perfect, but meaningfully useful at scale. Understanding how these tools work empowers you to make informed decisions about the content you create, share, and trust.