You've carefully crafted your prompt, hit enter, and then—"This prompt may violate our content policy" or "Prompt blocked due to safety reason." If you're reading this, you've experienced one of the most frustrating aspects of working with AI: safety filters blocking seemingly harmless requests. Whether you're a developer building applications, a content creator working on educational material, or a researcher exploring AI capabilities, this comprehensive guide will help you understand why prompts get blocked and how to fix the issue across all major platforms.

The reality is that false positives are surprisingly common. Terms like "kill" (as in "kill the process"), "adult" (as in "adult education"), or "bomb" (as in "bomb calorimeter") can trigger filters even when your intent is completely legitimate. The good news? There are proven solutions for each platform, and understanding how these safety systems work is the first step to working with them effectively.

Quick Answer: Why AI Blocks Your Prompts

AI safety systems block prompts for four main reasons: keyword detection (flagging words regardless of context), pattern matching (identifying sequences that resemble harmful requests), content classification (categorizing intent based on training data), and threshold violations (when probability scores exceed platform limits). Understanding which mechanism is triggering your block helps you choose the right solution.

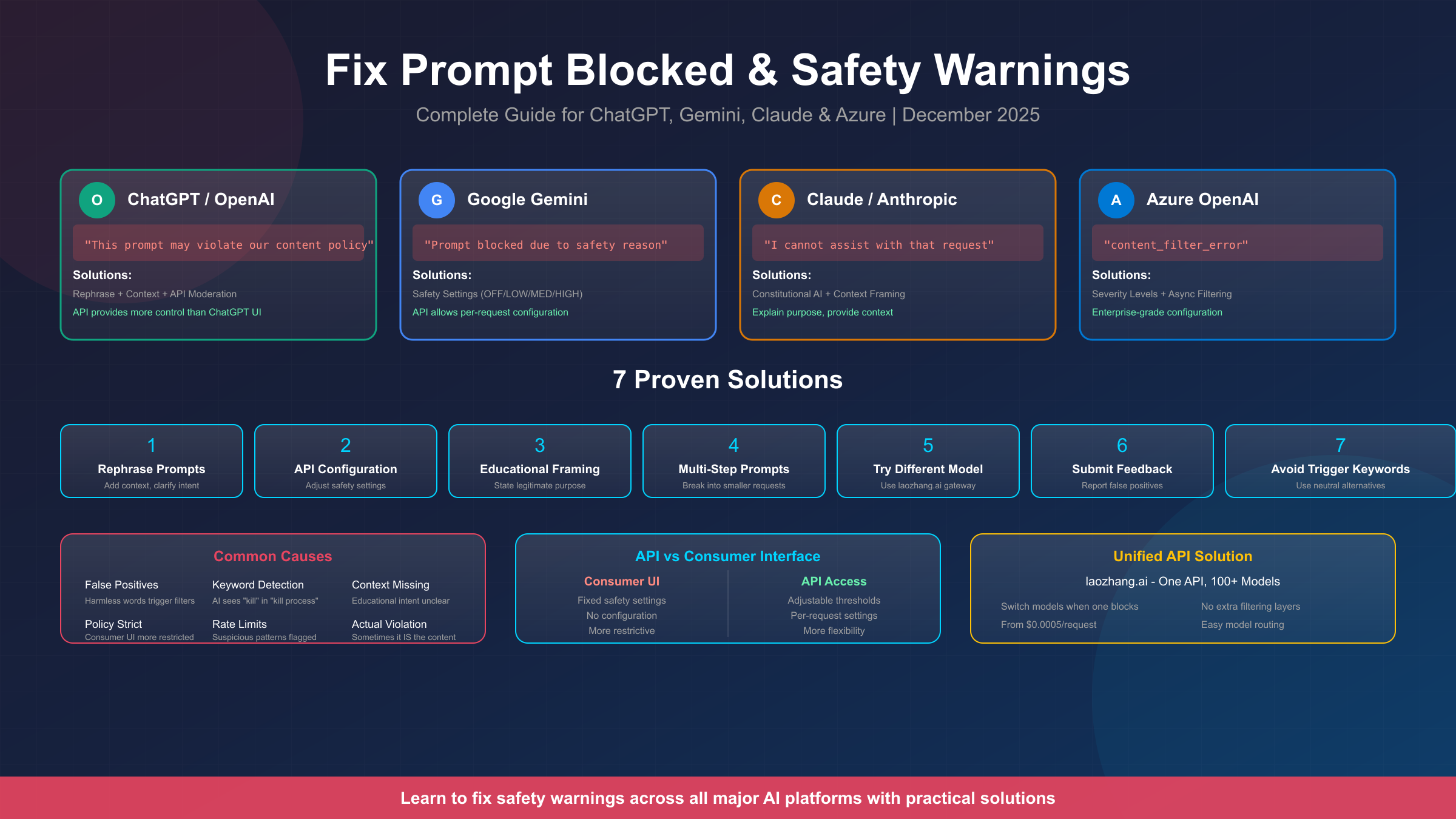

Here's a quick reference for the most common error messages and their typical causes:

| Platform | Error Message | Common Cause |

|---|---|---|

| ChatGPT | "This prompt may violate our content policy" | Keyword detection, sensitive topics |

| Gemini | "Prompt blocked due to safety reason" | HARM_CATEGORY threshold exceeded |

| Claude | "I cannot assist with that request" | Constitutional AI principles triggered |

| Azure | "content_filter_error" | Enterprise content filtering rules |

The key insight that most guides miss is this: consumer interfaces (the web apps you use directly) have fixed safety settings, while API access typically provides configuration options. If you're frequently hitting blocks, API access through services like laozhang.ai gives you more control over how filtering works.

Understanding AI Safety Systems

Before diving into platform-specific solutions, it's important to understand how modern AI safety systems actually work. This knowledge will help you craft prompts that achieve your legitimate goals while avoiding unnecessary blocks.

Modern AI platforms use multiple layers of content filtering. The first layer is pre-training: models are trained to avoid certain outputs during their initial development. The second layer is instruction tuning: additional training that reinforces safety behaviors. The third layer is real-time filtering: systems that analyze prompts and responses as they're generated.

The fundamental challenge is context: AI safety systems struggle to distinguish between someone asking "how do I kill a process in Linux" (legitimate) and someone asking about harmful activities (not legitimate). Both use similar keywords, but the intent is entirely different. This is why rephrasing with context often works—you're providing the information the safety system needs to correctly classify your intent.

Different platforms take different approaches to this challenge. Google's Gemini API offers the most configuration options, allowing you to adjust thresholds for specific harm categories. OpenAI's ChatGPT relies more heavily on pre-training and has limited real-time configuration. Claude uses "Constitutional AI," training the model with principles rather than filters. Azure OpenAI provides enterprise-grade controls with multiple severity levels.

If you're experiencing blocks in certain geographic regions, that's an additional layer—some platforms have stricter filtering in specific countries due to regulatory requirements.

The practical implication is clear: the same prompt may work on one platform but be blocked on another. This is why access to multiple models through a unified API gateway becomes valuable—if one model blocks your legitimate request, you can route to another that handles it differently.

ChatGPT & OpenAI Solutions

ChatGPT's content policy warnings are among the most common, affecting millions of users daily. OpenAI uses a combination of model training and moderation endpoints to filter content. The error "This prompt may violate our content policy" appears when the system detects potential violations, but importantly, this is often a false positive.

The most effective solution is contextual rephrasing. ChatGPT's safety system responds well to explicit context and clarified intent. Here are proven examples of prompts that were blocked and their working alternatives:

Blocked: "How do I hack into a website?" Working: "As a cybersecurity student learning about defensive measures, what are common website vulnerabilities that security professionals test for during authorized penetration testing?"

Blocked: "Write a story about someone being killed" Working: "Write a mystery novel excerpt where a detective investigates a crime scene. Focus on the investigation process and clues discovered."

Blocked: "Tell me about dangerous chemicals" Working: "For a chemistry education assignment, explain the safety protocols and hazards associated with common laboratory chemicals that students might encounter."

For developers using the OpenAI API, you have access to the Moderation endpoint, which allows you to pre-check content before sending it to the main model. This can help you understand what's triggering blocks. Here's how to use it:

pythonimport openai client = openai.OpenAI(api_key="your-api-key") response = client.moderations.create( input="Your prompt text here" ) for result in response.results: for category, flagged in result.categories.model_dump().items(): if flagged: print(f"Flagged: {category}")

If you're getting false positives consistently, submit feedback. When using GPT-4, click on the content violation warning link and report the false positive. OpenAI actively uses this feedback to refine their detection systems. Your reports help improve the system for everyone.

For those needing more flexibility, consider accessing GPT models through an OpenAI API key rather than the ChatGPT interface. The API provides more control and typically has slightly different filtering behavior than the consumer product.

Google Gemini Solutions

Gemini's safety system is the most configurable of the major platforms, which is both a blessing and a curse. The blessing: you can adjust settings for your specific use case. The curse: the default settings are often more restrictive than necessary, leading to many false positives.

Gemini categorizes content into four main harm categories:

- HARM_CATEGORY_HARASSMENT: Negative or harmful comments targeting identity

- HARM_CATEGORY_HATE_SPEECH: Rude, disrespectful, or profane content

- HARM_CATEGORY_SEXUALLY_EXPLICIT: Sexual content references

- HARM_CATEGORY_DANGEROUS_CONTENT: Content promoting harmful activities

For each category, you can set blocking thresholds:

- BLOCK_NONE: No blocking (most permissive)

- BLOCK_ONLY_HIGH: Block only high-probability harmful content

- BLOCK_MEDIUM_AND_ABOVE: Block medium and high (default for most)

- BLOCK_LOW_AND_ABOVE: Block everything flagged (most restrictive)

For most legitimate use cases, setting thresholds to BLOCK_ONLY_HIGH resolves the majority of false positives. Here's how to configure this in the Gemini API:

pythonfrom google import genai from google.genai import types client = genai.Client(api_key="your-gemini-api-key") response = client.models.generate_content( model="gemini-2.0-flash", contents=["Your prompt here"], config=types.GenerateContentConfig( safety_settings=[ types.SafetySetting( category=types.HarmCategory.HARM_CATEGORY_HARASSMENT, threshold=types.HarmBlockThreshold.BLOCK_ONLY_HIGH, ), types.SafetySetting( category=types.HarmCategory.HARM_CATEGORY_HATE_SPEECH, threshold=types.HarmBlockThreshold.BLOCK_ONLY_HIGH, ), types.SafetySetting( category=types.HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT, threshold=types.HarmBlockThreshold.BLOCK_ONLY_HIGH, ), types.SafetySetting( category=types.HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT, threshold=types.HarmBlockThreshold.BLOCK_ONLY_HIGH, ), ] ) )

One important limitation: Google has internal safety filters that operate beyond the user-configurable settings. Community reports on the Google AI Developers Forum confirm that even with all settings set to OFF, some prompts are still blocked. These internal filters protect against content like CSAM and cannot be adjusted.

If you're getting "BLOCKLIST" or "RECITATION" errors, these are different issues:

- BLOCKLIST: Your content triggered an internal word/phrase list

- RECITATION: The model's output resembles training data too closely

For recitation issues, increase the temperature setting or rephrase to ask for summaries rather than verbatim content. For pricing and setup details, see our Gemini API pricing guide.

Claude & Anthropic Solutions

Claude takes a fundamentally different approach to safety called "Constitutional AI." Rather than using filters, Claude is trained with a set of principles that guide its behavior. This means Claude often understands context better than filter-based systems, but it also means there are no safety settings to configure.

When Claude refuses a request, it's because the model itself has determined (based on its training) that the request conflicts with its principles. This is actually a feature, not a bug—Claude is designed to be helpful while avoiding harm through understanding rather than keyword matching.

The most effective approach with Claude is explicit context and purpose. Claude responds well to understanding why you're asking something. Here are examples:

Refused: "Explain how explosives work" Accepted: "I'm a chemistry teacher preparing a lesson on industrial applications of controlled detonation in mining and demolition. Can you explain the basic chemistry involved at a level appropriate for high school students?"

Refused: "Write code to bypass security" Accepted: "I'm a security researcher conducting authorized penetration testing on my company's systems. Can you help me understand common authentication vulnerabilities so I can test our defenses?"

The key pattern is: explain your role, your purpose, and the legitimate context for your request. Claude is sophisticated enough to distinguish between a security researcher and someone with malicious intent, but it needs that information to make the distinction.

If Claude continues to refuse after you've provided context, try:

- Being even more specific about your legitimate use case

- Breaking the request into smaller, less sensitive components

- Asking Claude to explain why it's refusing—this can reveal what aspect is triggering concern

For API access and pricing information, see our Claude API pricing guide. Note that Claude's API behaves similarly to the consumer interface in terms of safety—the principles are embedded in the model, not in configurable filters.

Azure OpenAI Enterprise Solutions

Azure OpenAI provides the most comprehensive content filtering system, designed for enterprise use cases with compliance requirements. While this can mean more blocks initially, it also means more configuration options once you understand the system.

Azure's content filtering operates on four core categories:

- Hate and Fairness: Discriminatory content

- Sexual Content: Explicit material

- Violence: Violent or threatening content

- Self-Harm: Content related to self-injury

Each category can be configured with severity levels: Safe, Low, Medium, and High. The key insight is that Azure's default settings are often overly aggressive for legitimate business use cases.

The recommended configuration for most enterprise applications:

- Set all standard filters to "High" severity (least restrictive)

- Keep Prompt Shield enabled (protects against prompt injection)

- Enable Asynchronous Filtering mode (improves performance)

The async filtering mode is particularly important. By default, Azure runs filters synchronously—checking the prompt, then generating a response, then checking the response. This creates latency. Async mode allows filters to run parallel to response streaming, significantly improving performance while still providing protection.

To configure these settings in Azure OpenAI Studio:

- Navigate to Safety + Security → Create Content Filter

- Set Input and Output filters to "High" severity for each category

- Under "Streaming mode," select "Asynchronous Filters"

- Assign the filter configuration to your model deployment

For organizations that need even more flexibility, apply for Modified Content Filtering access. This requires a business justification but can enable even fewer restrictions for legitimate use cases.

One important note: Azure content filters can flag medical content (operative notes, clinical descriptions) as "self-harm" even in purely clinical contexts. If you're building healthcare applications, prefix your prompts with context like "The following is clinical documentation for medical coding purposes and does not describe intentional self-injury."

Unified API Solutions with laozhang.ai

If you're experiencing content filter issues across multiple platforms—or if you need the flexibility to route requests to different models based on content type—a unified API gateway offers a practical solution.

The core problem with platform-specific approaches is that you're locked into one model's filtering behavior. What works for ChatGPT may be blocked by Gemini. What Claude handles well might trigger Azure's filters. This creates ongoing friction for developers and content creators who work with diverse content types.

laozhang.ai provides access to 100+ AI models through a single API endpoint. The key advantages for handling content filter issues:

- Model Switching: If one model blocks your request, route to another that handles it differently

- No Additional Filtering: laozhang.ai passes requests through without adding its own content filtering layer

- Unified Interface: One API key, one format, multiple model options

- Cost Efficiency: Starting from $0.0005/request with competitive pricing across all models

Here's a practical example using curl:

bashcurl https://api.laozhang.ai/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $LAOZHANG_API_KEY" \ -d '{ "model": "gpt-4", "messages": [{"role": "user", "content": "Your prompt here"}] }'

If GPT-4 blocks your request, switch models by changing the model parameter:

bashcurl https://api.laozhang.ai/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $LAOZHANG_API_KEY" \ -d '{ "model": "claude-3-sonnet", "messages": [{"role": "user", "content": "Your prompt here"}] }'

This approach is particularly valuable for:

- Developers building applications that need reliable completions regardless of content type

- Content creators working with educational material that triggers false positives

- Researchers exploring AI capabilities who need access to multiple models

- Businesses with diverse content needs that vary in sensitivity

The practical benefit is flexibility: rather than being stuck when one model blocks you, you can immediately try alternatives without changing your application architecture.

Decision Guide and Best Practices

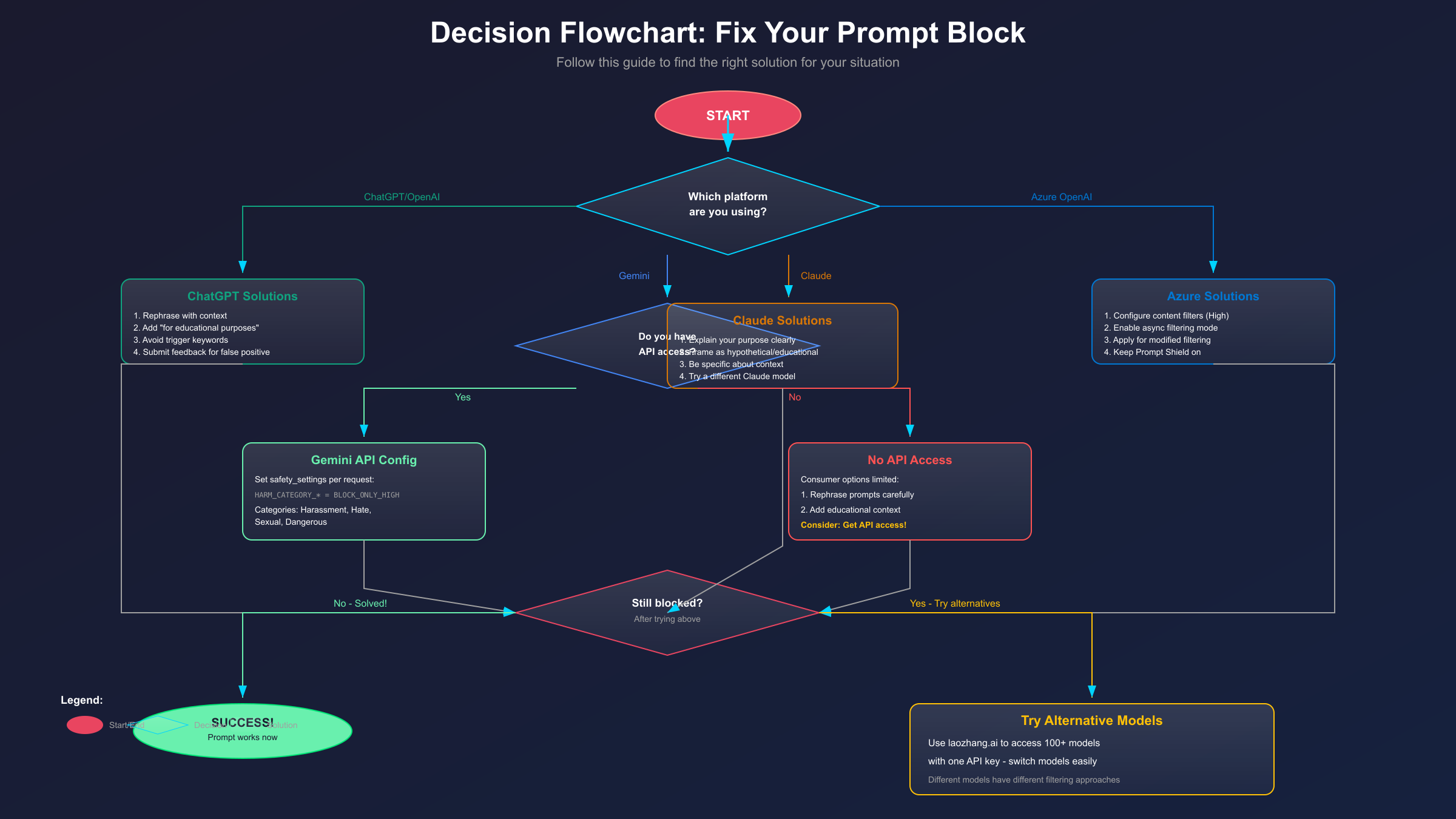

To help you quickly find the right solution, follow this decision framework:

Step 1: Identify your platform

- ChatGPT/OpenAI → Focus on rephrasing and context

- Gemini → Configure API safety settings

- Claude → Provide detailed purpose and role context

- Azure → Adjust severity levels and async mode

Step 2: Determine if you have API access

- Yes → Use API configuration options

- No → Focus on prompt engineering techniques

Step 3: Try the platform-specific solutions above

Step 4: If still blocked, consider alternatives

- Try a different model on the same platform

- Use laozhang.ai to access multiple models

- Evaluate if your request truly needs adjustment

General Best Practices That Work Across All Platforms:

-

Add explicit context: "For educational purposes," "As part of my security research," "In my role as a healthcare professional"

-

Clarify your role: Tell the AI who you are and why you need the information

-

Use neutral language: Replace loaded terms with neutral alternatives (e.g., "security testing" instead of "hacking")

-

Break complex requests into smaller parts: If a large request is blocked, decompose it

-

Avoid unnecessary trigger words: If "kill" isn't essential to your meaning, use alternatives like "terminate," "stop," or "end"

Legal and Ethical Considerations:

It's important to distinguish between legitimate workarounds and actual policy violations:

- Legitimate: Rephrasing to clarify educational, research, or professional intent

- Legitimate: Configuring API settings for your specific use case within guidelines

- Legitimate: Using alternative models that handle your content type better

- Not legitimate: Attempting to generate content that violates platform terms of service

- Not legitimate: Using "jailbreak" prompts to bypass safety for harmful purposes

Remember that repeated violations can result in account suspension. If your use case genuinely requires content that platforms consistently block, the issue may not be false positives—consider whether your request aligns with acceptable use policies.

Frequently Asked Questions

Q: Will I get banned for trying to work around content filters?

Legitimate workarounds like rephrasing prompts and configuring API settings are explicitly allowed. You won't be banned for adding context or adjusting your safety_settings in Gemini. However, persistent attempts to generate actually harmful content, or using known "jailbreak" prompts, can result in warnings or account suspension.

Q: Why does the same prompt sometimes work and sometimes get blocked?

AI systems have probabilistic elements. The same prompt can receive slightly different probability scores on different attempts. Additionally, platforms continually update their safety systems, so behavior can change over time. If a prompt works inconsistently, try adding more explicit context to push it clearly into the "safe" category.

Q: Is API access less restrictive than consumer interfaces?

Generally, yes—but not because APIs are "unfiltered." APIs provide configuration options that consumer interfaces don't expose. In Gemini, you can set safety thresholds. In Azure, you can configure severity levels. The models themselves still have built-in safety training, but you have more control over the filtering layer.

Q: Which AI platform has the least restrictive content filtering?

This varies by content type. Claude often handles nuanced topics well because it understands context. Gemini API is highly configurable. ChatGPT is relatively balanced. Azure can be configured extensively but requires enterprise access. Rather than looking for the "least restrictive," focus on which platform handles your specific content type best.

Q: How do I report false positives?

For ChatGPT, click the warning link and submit feedback. For Gemini, post on the Google AI Developers Forum. For Claude, contact Anthropic support. For Azure, open a support ticket through the Azure portal. Your feedback genuinely helps improve these systems.

Conclusion

Content filter blocks are frustrating, but they're rarely insurmountable for legitimate use cases. The key insights from this guide:

- Understand the mechanism: Different platforms use different approaches—filters, principles, or configurable thresholds

- Context is king: Most false positives resolve when you clarify your intent and purpose

- API access provides options: If you're blocked frequently, API configuration gives more control

- Flexibility matters: Services like laozhang.ai let you route to different models when one blocks you

- Stay legitimate: Workarounds for genuine use cases are fine; actual policy violations aren't

The AI safety landscape continues to evolve as companies balance user utility with responsible AI deployment. By understanding how these systems work and applying the platform-specific solutions in this guide, you can accomplish your legitimate goals while working within the boundaries these platforms have established.

If you're still experiencing persistent blocks after trying these solutions, consider whether your specific use case requires specialized access—many platforms offer enterprise tiers or researcher access programs with different filtering configurations for qualified applicants.